Abstract

Neurocritical care bioinformatics is a new field that focuses on the acquisition, storage and analysis of physiological and other data relevant to the bedside care of patients with acute neurological conditions such as traumatic brain injury or stroke. The main focus of neurocritical care for these conditions relates to prevention, detection and management of secondary brain injury, which relies heavily on monitoring of systemic and cerebral parameters (such as blood-pressure level and intracranial pressure). Advanced neuromonitoring tools also exist that enable measurement of brain tissue oxygen tension, cerebral oxygen utilization, and aerobic metabolism. The ability to analyze these advanced data for real-time clinical care, however, remains intuitive and primitive. Advanced statistical and mathematical tools are now being applied to the large volume of clinical physiological data routinely monitored in neurocritical care with the goal of identifying better markers of brain injury and providing clinicians with improved ability to target specific goals in the management of these patients. This Review provides an introduction to the concepts of multimodal monitoring for secondary brain injury in neurocritical care and outlines initial and future approaches using informatics tools for understanding and applying these data to clinical care.

Key Points

-

Monitoring for secondary brain injury is a fundamental aspect of neurocritical care

-

Advances in neuromonitoring technologies have been profound and now include the ability to directly monitor brain oxygenation, cerebral blood flow, and cerebral metabolism in, essentially, real time

-

Despite these advances, data from bedside monitors in neurocritical care are evaluated by clinicians in much the same way as 40 years ago

-

Informatics has fundamentally changed many fields in medicine including epidemiology, genetics and pharmacology

-

New data-acquisition, storage and analytical tools are now being applied to neurocritical care data to harness the large volume of data now available to clinicians

-

Neurocritical care bioinformatics is an emerging field that will require collaboration between clinicians, computer scientists, engineers, and informatics experts to bring user-friendly, real-time advances to the patient bedside

Similar content being viewed by others

Introduction

Intensive care medicine has been described as “the art of managing extreme complexity”.1 In neurocritical care, this complexity is magnified by limitations in the clinical assessment of patients with brain injury and different primary and secondary brain injury pathways.2 Prevention, detection and management of secondary brain injury are the main purposes of neurocritical care.3,4 These goals are accomplished through neurological examination, neuroimaging studies (such as CT or MRI), and monitoring of a wide range of systemic and neurophysiological parameters (called multimodal monitoring).5,6,7 Not surprisingly, the neurocritical care unit is a data-intensive environment (Figure 1). Utilization of these data in real-time decision-making for patient care represents the art of neurocritical care practice. Several questions arise regarding multimodal monitoring. For instance, the parameters that should be measured and how often these measurements should be taken are unclear. Furthermore, is every piece of information valuable or can some be discarded and, if so, which and when? As we develop new ways of monitoring, how can multiple parameters be integrated into a coherent picture of the patient's condition?

The image shows a neurocritical care bed at San Francisco General Hospital, CA, USA. The neurocritical care unit is a data-intensive and clinically complex environment, as indicated by the presence of the patient, two nurses, a respiratory therapist, and multiple bedside devices and monitors including a mechanical ventilator, multiple pumps for intravenous medications, and separate computerized devices for measuring levels of intracranial pressure, brain tissue oxygen tension, jugular venous oxygen saturation, and cerebral blood flow. An overhead monitor displays these data continuously (background in green), and a computerized bedside charting system (foreground) is used to automatically and manually record this and other information into the medical record. Despite the volume of information, charts and monitors display and record raw data generally without advanced analysis. Permission obtained from the American Academy of Neurology © Hemphill, J. C. & De Georgia, M. American Academy of Neurology [online], (2008).

Neurocritical care bioinformatics is an emerging field that attempts to bring order to this chaos and provide insight into disease processes and treatment paradigms.8,9 In this Review, we describe the current state of multimodal monitoring, initial forays into the use of neurocritical care bioinformatics, and the potential for this discipline to shape the future of neurocritical care.

Secondary brain injury

When patients have an acute neurological catastrophe such as traumatic brain injury (TBI) or stroke, damage to the brain can occur at the time of the initial event. This damage is termed primary brain injury, and the underlying mechanisms include: intraparenchymal or extra-axial traumatic or spontaneous hemorrhage; diffuse axonal injury (in TBI); and focal or global ischemia from acute ischemic stroke or global cerebral ischemia during cardiac arrest. Although interventions (such as surgical hematoma evacuation) can be undertaken to limit or reverse primary brain injury, much of the primary damage may be irreversible. In most acute neurocritical care conditions, however, this primary brain injury initiates a cascade of biochemical events that are, at least at onset, reversible. This process is termed secondary brain injury and its management is of fundamental importance to the treatment of patients with TBI, ischemic and hemorrhagic stroke, and global cerebral ischemia.10

Secondary brain injury can generally be considered as two related concepts: cellular injury cascades and secondary brain insults (SBIs). An example of the cellular injury cascade is the ischemic cascade in which events such as excitotoxicity, intracellular calcium influx, and free radical membrane damage are ongoing. Hence, ultra-early ischemic stroke treatment via revascularization of an occluded intracranial artery with thrombolytic agents is intended to restore perfusion before permanent cell death, thereby reversing this secondary brain injury. In addition to initiating ischemic and apoptotic cell injury cascades, primary brain injuries make injured, but salvageable, brain tissue vulnerable to SBIs. These insults are usually well-tolerated but, when occurring in an injured brain, can lead to further cell death and worsened patient outcome. Hypotension, hypoxia and hypoglycemia are all examples of SBIs in which decreased substrate delivery to an injured brain further worsens injury.4 On the other hand, fever, seizures and hyperglycemia are examples of SBIs in which increased metabolic demand may outstrip compensatory mechanisms and result in further injury.11,12

Current management paradigms for TBI, stroke, status epilepticus and, essentially, all acute brain disorders encountered in neurocritical care, center around the goal of limiting secondary brain injury. For example, surgical evacuation of a subdural hematoma is intended to limit tissue damage from brain herniation or ischemic damage from low cerebral perfusion. In patients with subarachnoid-hemorrhage-related vasospasm, the use of pressors to increase the systemic blood-pressure level is intended to increase blood flow and, therefore, oxygen delivery to vulnerable brain tissue. However, effective management of secondary brain injury relies on the ability to detect its occurrence, monitor its progress, and avoid overtreatment with interventions that often have their own risks. Previous approaches have involved monitoring of systemic parameters, such as blood-pressure level and peripheral oxygen saturation, with the hope of adequately protecting the brain from secondary injury, whereas current and future approaches in neurocritical care emphasize the ability to directly monitor the brain and to develop better tools to integrate these sometimes complex measures.

Multimodal monitoring

Intracranial pressure

Intracranial pressure (ICP) is the most commonly monitored brain-specific physiological parameter in the neurocritical care unit (Box 1). Vast experience exists with ICP monitoring in TBI, aneurysmal subarachnoid hemorrhage, ischemic stroke, and intracerebral hemorrhage.13 Although no prospective randomized trials have confirmed a benefit from ICP monitoring (or, indeed, from the use of any patient monitor), ICP monitoring and management is, nevertheless, generally considered standard, and guidelines exist for both TBI and stroke.14,15,16

The gold standard device for monitoring ICP is a ventricular catheter attached to an external micro-strain gauge. The device can be re-zeroed at any time and can also be used to drain cerebrospinal fluid to treat elevated ICP. Ventricular catheters are usually set in a position to display ICP, with drainage performed intermittently to maintain ICP, usually <20 mmHg. These devices can be inserted at the time of surgery or in the intensive care unit (ICU). The zero point for the ICP transducer is the tragus of the ear. Potential complications associated with this device are bleeding and infection.17,18

An intraparenchymal fiberoptic device, inserted at the bedside via a cranial bolt, is an alternative way to monitor ICP. The device is connected to a separate bedside monitor to continuously display the ICP waveform. Several types are available and most have only modest measurement drift, independent of the duration of monitoring. The risk of infection and bleeding are lower than for ventricular catheters, but the inability to re-zero intraparenchymal fiberoptic ICP monitors after placement or drain the cerebrospinal fluid are disadvantages.19,20

Cerebral perfusion pressure

Current Brain Trauma Foundation (BTF) guidelines for management of severe TBI recommend maintaining ICP <20 mmHg. Guidelines for other disorders such as intracerebral hemorrhage have generally followed this threshold despite fewer disease-specific data.16,21 The cerebral perfusion pressure (CPP) is the difference between systemic mean arterial pressure and ICP (ideally using the same zero reference point for ICP and arterial pressure). The CPP is the driving pressure for cerebral blood flow across the microvascular capillary bed.17,18 Since 1995, treatment approaches have emphasized ensuring an adequate CPP level to avoid secondary cerebral ischemia and as a treatment for elevated ICP. With intact cerebral autoregulation, increasing the CPP can result in compensatory vasoconstriction, thereby reducing cerebral blood volume and ICP.22 This approach has been called 'CPP therapy'. Despite its physiological appeal, the sole randomized controlled trial comparing CPP therapy (CPP >70 mmHg) and ICP therapy (ICP <20 mmHg) found no difference in outcomes, probably because of increased pulmonary complications in the CPP therapy group.23 However, existing studies have moved beyond this 'one size fits all' CPP target and have emphasized that individual patients may have different CPP thresholds depending on the degree of autoregulation and intracranial compliance.22,23,24,25 For example, Howells found that if autoregulation was impaired, treatment targeting a higher CPP (>70 mmHg) resulted in worse functional outcomes in patients with TBI than did treatment targeting a lower CPP (50–60 mmHg). If autoregulation was intact, high CPP levels resulted in improved outcomes.25 BTF guidelines now recommend avoidance of a CPP <50 mmHg and to consider cerebral autoregulation status when selecting a CPP target in a specific patient.21 Unfortunately, current standard methods of viewing, recording and analyzing ICP and CPP data do not allow bedside clinicians to easily assess cerebral autoregulation.

Brain tissue oxygen tension

New methods of advanced neuromonitoring allow more-direct measurement of cerebral oxygenation and metabolism. Monitoring of brain tissue oxygen tension (PbtO2) involves placement of a micro Clark electrode (closed polarographic oxygen probe with reversible electromagnetic actions and semipermeable membrane) into the brain parenchyma using a method similar to that for placement of an intraparenchymal fiberoptic ICP monitor.26 PbtO2 values <15 mmHg are associated with worsened outcome in patients with TBI, although prospective trials of improving PbtO2 have not yet been performed.27,28,29 Brain temperature is also measured concurrently, although the specific effect of brain temperature (as opposed to body temperature) in fever-related secondary brain injury has not been well-studied.28,30

Cerebral blood flow

Continuous monitoring of cerebral blood flow (CBF) is intuitively appealing, although, up until the past few years, this approach was not practical at the bedside. A currently available method of continuous quantitative CBF monitoring uses the principle of thermal diffusion and involves inserting a probe with two small thermistors—a proximal one set at tissue temperature and a distal one that is heated by 2 °C above this tissue temperature—into the brain. The distal thermistor measures the tissue's ability to dissipate heat: the greater the blood flow, the greater the dissipation of heat. A microprocessor then converts this information into a measure of CBF in ml/100 g/min.31 CBF monitoring has been used in patients with head trauma32 or subarachnoid hemorrhage,33 and during neurosurgical procedures.34 Because many interventions in neurocritical care are based on the principle of augmenting arterial blood flow, information provided by CBF monitoring can be helpful in guiding clinical management.35

Jugular venous oxygen saturation

By placing a small fiberoptic catheter in the internal jugular vein and advancing the tip to the jugular bulb, jugular venous oxygen saturation (SjvO2) can be measured.36 SjvO2 represents a measure of global cerebral oxygen extraction. Values <50% represent 'ischemic desaturations' and are associated with worsened outcome in patients with TBI. Values >75% represent luxury perfusion, in which blood flow substantially exceeds that necessary for tissue metabolic demand, and are similarly associated with poor outcome in patients with TBI.36,37 As a global measure, SjvO2 monitoring is complementary to focal monitoring of PbtO2.

Cerebral microdialysis

Cerebral microdialysis involves placement of a small catheter into the brain parenchyma, either during surgery or through a burr hole and secured by a cranial bolt.38 Extracellular concentrations of ischemic metabolites, such as lactate and pyruvate, can then be measured. The lactate:pyruvate ratio is currently the best marker of the brain redox state and an early biomarker of secondary ischemic injury; lactate:pyruvate ratio >40 is indicative of cerebral metabolic crisis.39 Glycerol, a marker of cell membrane damage, and glutamate, an excitatory amino acid, provide additional evidence of developing brain injury.40 It is important to remember that ischemic hypoxia is just one of the many types of brain hypoxia that can be detected in the injured brain.41

A limitation to microdialysis is that fluid transports slowly through the catheter, and measured values represent cerebral events that occurred 20-60 min earlier, depending on the collection interval. Microdialysis offers the potential for measurement of multiple new parameters, but pattern recognition is difficult and clinical studies are limited. The use of new, larger-pore membranes provides the possibility of measuring the broader neuroinflammatory cascades elicited by TBI.42 Microdialysis might also be used for profiling of new neuroprotective drugs. Presence of any drug in the extracellular fluid does not guarantee a neuroprotective effect: if a drug cannot cross the blood–brain barrier, the brain will not be targeted. A better understanding of the concentrations of any drug found in the brain tissue is an important step in considering new therapeutic pharmacological candidates for neuroprotection. At present, microdialysis is generally considered only a research tool, despite being increasingly used in the clinic to guide management.43

Summary

In addition to the above parameters, many other tools may be used for monitoring in neurocritical care. Continuous EEG monitoring using either surface or intracortical electrodes is increasingly used for the detection of subclinical seizures or evolving ischemia.44,45 Near-infrared spectroscopy for brain oxygenation, brain compliance monitoring, and quantitative pupillometry are being considered as emerging tools.44,46,47,48 Despite the availability of many tools for monitoring relevant biological processes in neurocritical care, important questions remain regarding what they measure, how they relate to the familiar parameters such as ICP and CPP, and how they should be integrated into bedside clinical care. In fact, it was precisely these questions about how to integrate advanced neuromonitoring methods that clearly illustrated the need for newer bioinformatics approaches, including improved acquisition and recording of data, more in-depth analysis, and translation of data for patient care.49

Neurocritical care bioinformatics

Acquisition, integration and synchronization

Although the ability to monitor the body and the brain in critical care has advanced tremendously over the past few decades, methods of recording these data for bedside use, archiving it for future review, and analyzing it remain primitive and underutilized.50 In fact, paper charts are still the most commonly used data record in the ICU and have some advantages: familiarity with the format, the ability to visualize data in a predictable way (usually one 2 ft by 3 ft sheet for a 24 h period), and the inherent validation that occurs when transcribing data. Electronic medical records (EMRs) have the potential to reduce medical errors, increase ease of record keeping, and provide more-reliable documentation for regulatory oversight than traditional paper charts.51,52 Nevertheless, most commercial bedside EMRs merely recapitulate the format of paper charts, albeit in electronic form, without providing additional analytical power. Data logging is still done laboriously and intermittently, often hourly.

Although this data logging can provide some general trend information, it necessarily obscures the underlying data structure, especially information about waveform morphology. If data is sampled too infrequently or for too short a duration then information can be missed. Physiological signals can have information content in frequencies exceeding 0.2 kHz, but information can be lost when data below 0.5–1 kHz is sampled over a short time (1–2 ms).53 Moreover, even if the individual devices are capable of recording higher-time-resolution data, without some way to store and review this data, it is basically useless; the information scrolls across the screen and disappears forever. Thus, in most neurocritical care units, clinicians can view monitored physiological data continuously by watching the bedside monitor but, once away from the bedside, they only have access to intermittently-recorded values. This disconnect emphasizes that collecting and archiving data is the crucial first step to information management.54

Integration of data is the next step in neurocritical care bioinformatics. In any ICU, finding a comprehensive set of all available physiological data (as well as data from patient records, laboratory studies, imaging findings and so on) for a patient in one place is virtually impossible. For the most part, individual monitors are self-contained, stand-alone units. This inability to integrate physiological signal data simultaneously into one searchable data set has been a major limiting factor in the ability to use intensive care monitoring data for more-advanced real-time analysis. Many reasons exist for this poor data integration, but the lack of interoperability (for example, the inability of different information technology systems and software applications to exchange data accurately and consistently) has been the main problem. Unlike the interoperable 'plug and play' environment of modern computers and consumer electronics, most acute-care medical devices are not designed to interoperate. In 2009, the concept of an 'integrated clinical environment' was adopted by the American Society for Testing and Materials and now serves as the basic template for future development of medical devices and systems.55

Two other fundamental aspects of high-resolution data acquisition relate to data integrity with regard to timing and artifact detection. Integration of physiological data is only meaningful when combined with high-resolution time synchronization. Without a 'master clock' ensuring that all the values and waveforms acquired at the same time 'line up' exactly in synchronization, interpreting the information and understanding the inter-relationships is difficult, if not impossible.

Finally, when all data produced by a monitor are recorded, some values are likely to be artifacts. In fact, that artifactual data occurs frequently in the ICU is taken for granted: a transducer is moved from a patient's bedside, a stopcock is opened to drain cerebrospinal fluid thereby rendering the recorded value inaccurate, a monitor is turned off and on in the context of patient transport. Ironically, when using a paper chart these values are 'cleaned' by bedside nurses who never enter them because of their obvious artifactual nature. However, when using a high-resolution data-acquisition system that might record values 60 times a second or more, automated data-cleaning algorithms are needed to avoid interpreting artifactual data as real. Thus, while data acquisition may seem straightforward to clinicians whose interests lie in interpreting patient data for prognosis and treatment, many fundamental barriers must still be overcome to ensure that these data are accurate, real and integrated before advanced analysis can even be considered.56

Once a comprehensive database of integrated, precisely time-stamped physiological signals is created (without artifact), complemented by relevant clinical observations, laboratory results, and imaging data, clinicians can then begin to formulate and test hypotheses about the underlying dynamic physiological processes in patients. Systems are being designed specifically for this purpose, and some commercially available software and hardware solutions are emerging.57

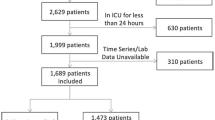

Most systems used for early studies have, however, been 'home-grown', one-off systems that are built within an institution to serve the needs of researchers with a specific interest in physiological informatics.9,54,58,59 These systems are generally in one of two forms: a kiosk-type system in which a computerized data-acquisition unit is brought to the patient's bedside (Figure 2) and connected to the output ports of various monitors, or a distributed system in which data from bedside monitors are sent continuously to a remote server.59 A kiosk system is less expensive than a distributed system, but only allows data acquisition from one patient at a time and then only when the system is connected and turned on. Distributed systems are costlier because they require set-up in multiple ICU beds and a remote server for storage, but they are better for large-scale neurocritical care bioinformatics work than are kiosk systems. Barriers related to data security and systems architecture, however, have made implementation, especially across multiple hospitals, challenging. Certain groups, such as the BrainIT (Brain monitoring with Information Technology) consortium, have overcome this barrier in the short term by allowing investigators from various sites to add data to a group database voluntarily.60,61

A mobile system is moved to the bedside and attached to various monitoring devices. Built-in software may provide advanced analysis for real-time decision support. Disadvantages of these systems are that usually only one patient can be monitored at a time, and data are not acquired unless the device is manually connected. Image courtesy of Richard Moberg, CNS Technology, Moberg Research, Philadelphia, PA, USA.

Translating data into clinical information

Ultimately, neurocritical care bioinformatics is intended to bring practical patient-based information to the bedside to be used in clinical decision-making. Some of the initial uses have tackled relatively modest questions compared with potential future uses such as predictive modeling of patient disease states. However, this preliminary work does demonstrate that analysis of patient physiological data, rather than just the usual display of raw data in a bedside chart, can bring unique insights that are clinically useful and can be implemented with current technology. For example, rather than just displaying the maximum daily temperature as the measure of fever, calculating the area under the curve (AUC) above a specific cut-off (such as >38.5 °C) provides a more-robust measure of 'dose' of fever, which can be used to track the effectiveness of interventions such as fever control or hypothermia therapy.62

Although cerebral autoregulation may be an important factor in determining optimal cerebral perfusion after acute brain injury, this process is difficult to determine from raw data at the bedside. The pressure reactivity index (PRx) is a moving correlation coefficient between mean arterial pressure and ICP that provides information about whether cerebral pressure autoregulation is intact, and has been used to determine the optimal CPP for patient management after TBI and intracranial hemorrhage.63,64

AUC 'dose' and PRx are both examples of indices calculated using informatics that add information to patient assessment. Neither uses sophisticated analytical tools to tackle complex multivariable modeling. However, to even use these simple informatics applications, digital data acquisition and real-time data analysis are required.

Advanced bioinformatics

The promise of neurocritical care bioinformatics lies in the potential to use advanced analytical techniques on high-resolution multimodal physiological data to improve patient outcome. The hope is that these more-advanced analytical tools will lead to better understanding of the complex relationships between various physiological parameters, improve the ability to predict future events (not only outcome, but also short-term events such as elevated ICP or low PbtO2), and thereby provide targets for individualized treatment in real time.65 Advanced analysis of physiological critical care data can be divided into two general approaches: data-driven methods and model-based methods (Box 2).

Data-driven methods

Data-driven methods can be thought of as using existing data to learn to predict an outcome of interest on the basis of previously unseen data. Analysis is trained on existing data sets in which outcomes are known (supervised learning) or analyzed in an exploratory manner (unsupervised learning) to find unexpected relationships between parameters through 'data mining'. Examples of supervised learning methods include regression analysis, decision trees, and neural networks. An example of unsupervised learning is cluster analysis.

Regression analysis

Regression is a familiar tool that attempts to fit a linear model to parameters on the basis of a provided outcome, with the nature of this outcome determining the specific type of regression analysis to be performed. Continuous outcomes use linear regression, while binary outcomes use logistic regression and categorical ordinal outcomes can be analyzed using ordinal regression. Multivariable regression analysis is routinely used in epidemiological studies of disease prediction and can also be applied to physiological data. Hemphill et al.27 used lagged regression analysis on time-series physiological data to identify an association between changes in the fraction of inspired oxygen (FiO2) or mean arterial pressure and subsequent changes in PbtO2 in patients with intracerebral hemorrhage. Although the concept of multivariable analysis on physiological parameters is appealing, problems are associated with this approach. Regression analysis assumes that all data are of value, presents challenges for the inclusion of time-series data, and generally assumes a linear relationship between parameters (or their transformations) and outcome. Consequently, other analytical methods may ultimately be of more use in neurocritical care bioinformatics.

Decision tree analysis

Decision trees are useful to analyze multivariate data, particularly those with discrete inputs (sex, injury type, drug dosage), and have been implemented to refine estimates of prognosis.66 For example, a set of patients might be grouped together at the 'top' of a tree. At each branch, the set can be divided into two (for example, whether a patient is female or male) or more (for example, did they receive 5 mg, 10 mg or 15 mg of drug? The nature of the rules can be optimized according to various algorithms. The final subsets are evaluated on the basis of an end point (for example, females who received 5 mg of drug showed 70% improvement, females who received 10 mg showed 80% improvement, and so on). Andrews and co-workers67 used this technique to identify subgroups of patients with head injury who had a poor prognosis. Figure 3 demonstrates how decision trees can be visually informative for clinicians for the prediction of TBI outcome. In particular, decision trees show how an individual patient sits within a well-developed predictive model.

The structure of the decision tree is determined from analyzed parameters rather than the usual clinical factors and cut-offs, which explains why admission pupils (that is, the size and reactivity of a patient's pupils on admission to the clinic) comes before age, Glasgow Coma Scale score, or grade of injury. Patients 'enter' the tree via the top node (admission pupils) and then are subsequently parsed into tree 'branches' on the basis of successive parameters until they reach a final terminus and an associated outcome prediction. Numbers in the outcome boxes reflect the total number of cases for that outcome (n) and the number of misclassified cases (m); decimals appear due to pruning of the tree (n/m). Permission obtained from the American Association of Neurosurgeons © Andrews, P. J. et al. J. Neurosurg. 97, 326–336 (2002).

Neural networks

Artificial neural networks are powerful tools for multifactorial classification and multivariate nonlinear analysis. Modeled after neurophysiological learning, neural networks have the ability, through iterative training, to model complex data relationships and to discern patterns. With neural networks, Vath and co-workers could accurately predict outcomes after TBI for different combinations of clinical and neuromonitoring parameters.68 Hidden patterns can be uncovered and displayed using Kohonen self-organizing maps. Using this technique with microdialysis data, Nelson and co-workers69 showed that highly individualistic and complex patterns or 'states' exist. Similarly, using a dimension reduction technique called hierarchical clustering (which was developed for genomics to simplify data sets), Cohen and co-workers70 identified specific clusters of physiological data in trauma patients from which distinct patient states—described as at risk of infection, multiorgan failure, or death—could be defined. Prognostic patterns too complex to visualize could then be recognized and displayed using dendrograms and heat maps. Figure 4 shows an example of cluster analysis in which a self-organizing map—a tool widely used in genetics and genomics—is used to identify unexpected associations between physiological parameters across patients with TBI.

Heat maps in which genes are displayed across the top row and related genes cluster together have been commonly used in genetics. In this neurocritical care heat map, genes are replaced by physiological variables that cluster on the basis of association within and across patients such that they become hierarchically clustered into three groups of patients. As expected, MAP and ABP cluster together. In this specific case, ICP and inspiratory oxygen (or fraction of inspired oxygen, FiO2) were unexpectedly clustered, leading to the identification of previously unrecognized ICP elevations during bedside suctioning in this set of patients with TBI who were mechanically ventilated.9 Abbreviations: ABP, arterial blood pressure, CPP, cerebral perfusion pressure; ETCO2, end tidal carbon dioxide; ICP, intracranial pressure; MAP, mean arterial blood pressure; O2, oxygen; PbtO2, brain tissue oxygen tension; PEEP, positive end-expiratory pressure; SpO2, systemic oxygen saturation; TBI, traumatic brain injury. With kind permission from Springer Science+Business Media © Sorani, M.D. et al. Neurocrit. Care 7, 45–52 (2007).

Complex systems analysis

Techniques for the analysis of nonlinear systems (complex systems analysis) have emerged from the mathematical and engineering sciences.71 Time series analysis, for example, measures variation over time and has been most often applied to monitoring of heart rate by evaluating intervals between consecutive QRS complexes. Decreased variability is thought to reflect system isolation and a reduced ability to respond to perturbations. Decreased heart rate variability is associated with poor outcome in patients with myocardial infarction72 or heart failure.73 Similarly, reduced ICP variability may be a better predictor of outcome than is the measure usually displayed at the bedside; namely, mean ICP.74 Frequency domain analysis displays the contributions of each sine wave as a function of its frequency (using Fourier transformation). The result is termed spectral analysis. Changes in spectral heart rate variability have been demonstrated in hypovolemia,75 hypertension,76 coronary artery disease,77 renal failure,78 and depth of anesthesia, among others.79

Approximate entropy (ApEn) provides a measure of the degree of randomness within a series of data. Heart rate ApEn decreases with age80 and is predictive of atrial fibrillation.81 Hornero and co-workers82 showed that ICP ApEn decreases with ICP elevations >25 mmHg.Papaioannou and co-workers83 also demonstrated that among critically ill patients, nonsurvivors had lower heart rate ApEn than survivors. As ApEn calculations can be sensitive to the length of the data series (especially with 'short and noisy' data sets), a modification, termed sample entropy (SampEn), was introduced.84 Using this approach, Lake and co-workers85 showed that heart rate entropy falls before clinical signs of neonatal sepsis become apparent.

Detrended fluctuation analysis is a technique for describing fractal scaling behavior of variability in physiological signals (similar patterns of variation across multiple timescales). Altered fractal scaling of the ICP signal is associated with poor outcome.86 Hopefully, the study of normal and pathological dynamics using an array of these complex systems analysis methods will provide unique insights into normal physiological relationships and the pathobiology of critical illness, which could lead to new mathematical models of disease prediction that are more accurate and realistic than existing models.87

Model-based methods

Conceptualization of patients as existing in physiological and pathophysiological states has led to the idea that therapies may need to be redirected toward facilitating transitions toward favorable physiological states, as opposed to 'fixing' particular physiological variables.88,89 These states and the transitions between them are invisible to clinicians using current paper or spreadsheet-based ICU patient records, but may be assessed using model-based analytical methods borrowed from other scientific disciplines.

Dynamical system models

Dynamical system models describe, on the basis of classic mechanics such as pressure–volume–flow relationships, how systems evolve over time. For example, Ursino and co-workers90 modeled the interaction between cerebral vascular reserve, cerebral hemodynamics during arterial pressure changes, and relationships between CPP and autoregulation. Although dynamical system modeling is useful, the drawback is that most, if not all, biological systems behave in a nonlinear rather than a linear manner. In nonlinear systems, small changes can cause disproportionately large responses because the response is not simply the sum of individual response to each stimuli. Biological systems require data analysis that incorporates all interconnections and coupling between organ systems, and this approach may be conceptually better suited to the complex environment of neurocritical care.91,92,93 A degree of intrinsic complex and chaotic behavior also exists. While this behavior can be captured to some extent with dynamical system models, the deterministic nature of these systems raises concerns about accounting for all relevant biological interactions.

Dynamic Bayesian networks

Given the complexity of critical care data, a systematic real-time classification process for understanding a patient's condition is needed. One approach uses Bayesian inference, in which uncertainty is described by probabilities. To identify a diagnostic state, each possible state is assigned a probability that reflects the relative belief of it being the patient's actual state. These beliefs are then updated with empirical data and the relative likelihoods of the observations are weighed via Bayes rule. Posterior probabilities of a patient's state membership are systematically generated and embody our best guess as to the patient's current diagnostic state. Transitions between states can be predicted using dynamic Bayesian networks.94 Classification models, the collection of diagnostic states, can also take on structure that indicates relationships between states, such as partial orderings.95 Further techniques have been developed to tackle the ill-posed inverse problem, which arises when two diagnostic states cannot be distinguished from the information provided by the observable data.96

A clinically relevant use of Bayesian neural networks to predict a change in patient state is the Avert-IT project,97 which is being undertaken by the BrainIT European neurocritical care informatics consortium. Avert-IT seeks to use physiological, demographic and clinical data from patients with TBI across multiple centers to create a prediction index for the subsequent occurrence of hypotension. Rather than using a single parameter, this concept might be considered as analogous to clinical intuition, in which a patient is identified as at risk for transition from one state (stable) to another state (hypotensive and at risk of secondary brain injury).

Conclusions

Multimodal monitoring of physiological parameters related to pressure, flow and metabolism is now routinely used in neurocritical care for detection and management of secondary brain injury. Despite major advances over the past decades in the ability to monitor the brain, clinicians archive and analyze this data in much the same way as in the 1960s. The availability of high-resolution data-acquisition tools and the success of advanced informatics in other areas of medicine, such as epidemiology, genetics and pharmacology, have led to interest in the development of neurocritical care bioinformatics as a way to provide new insights into the complex physiological relationships in patients with acute brain injury. This emerging field will require a coordinated effort involving clinicians, engineers, computer scientists, and experts in informatics and complex systems analysis, as well as industry to develop tools that can be used to improve data visualization and provide real-time, user-friendly advanced data analysis that can be applied clinically at the bedside of patients in the neurocritical care unit.

Review criteria

Articles were considered for inclusion in this article on the basis of a search of the PubMed database using the terms “neurocritical care informatics” and “multimodal monitoring” through to April 2011. Only full-text articles in English were considered. Given the early stage of development of this area, articles were also considered on the basis of the authors' personal knowledge of the field.

References

Gawande, A. The checklist. The New Yorker (10 Dec 2007).

Ropper, A. H. Neurological intensive care. Ann. Neurol. 32, 564–569 (1992).

Andrews, P. J. Critical care management of acute ischemic stroke. Curr. Opin. Crit. Care 10, 110–115 (2004).

Chesnut, R. M. et al. The role of secondary brain injury in determining outcome from severe head injury. J. Trauma 34, 216–222 (1993).

Diedler, J. & Czosnyka, M. Merits and pitfalls of multimodality brain monitoring. Neurocrit. Care 12, 313–316 (2010).

Stuart, R. M. et al. Intracranial multimodal monitoring for acute brain injury: a single institution review of current practices. Neurocrit. Care 12, 188–198 (2010).

Wartenberg, K. E., Schmidt, J. M. & Mayer, S. A. Multimodality monitoring in neurocritical care. Crit. Care Clin. 23, 507–538 (2007).

Chambers, I. R. et al. BrainIT: a trans-national head injury monitoring research network. Acta Neurochir. Suppl. 96, 7–10 (2006).

Sorani, M. D., Hemphill, J. C. 3rd, Morabito, D., Rosenthal, G. & Manley, G. T. New approaches to physiological informatics in neurocritical care. Neurocrit. Care 7, 45–52 (2007).

Fahy, B. G. & Sivaraman, V. Current concepts in neurocritical care. Anesthesiol. Clin. North America 20, 441–462 (2002).

Badjatia, N. Hyperthermia and fever control in brain injury. Crit. Care Med. 37, S250–S257 (2009).

Van den Berghe, G., Schoonheydt, K., Becx, P., Bruyninckx, F. & Wouters, P. J. Insulin therapy protects the central and peripheral nervous system of intensive care patients. Neurology 64, 1348–1353 (2005).

Forsyth, R. J., Wolny, S. & Rodrigues, B. Routine intracranial pressure monitoring in acute coma. Cochrane Database of Systematic Reviews, Issue 2. Art. No.: CD002043. doi:10.1002/14651858.CD002043.pub2 (2010).

Bratton, S. L. et al. Guidelines for the management of severe traumatic brain injury. VIII. Intracranial pressure thresholds. J. Neurotrauma 24 (Suppl. 1), S55–S58 (2007).

Bratton, S. L. et al. Guidelines for the management of severe traumatic brain injury. VII. Intracranial pressure monitoring technology. J. Neurotrauma 24 (Suppl. 1), S45–S54 (2007).

Morgenstern, L. B. et al. Guidelines for the management of spontaneous intracerebral hemorrhage: a guideline for healthcare professionals from the American Heart Association/American Stroke Association. Stroke 41, 2108–2129 (2010).

Andrews, P. J. & Citerio, G. Intracranial pressure. Part one: historical overview and basic concepts. Intensive Care Med. 30, 1730–1733 (2004).

Citerio, G. & Andrews, P. J. Intracranial pressure. Part two: clinical applications and technology. Intensive Care Med. 30, 1882–1885 (2004).

Martinez-Manas, R. M., Santamarta, D., de Campos, J. M. & Ferrer, E. Camino intracranial pressure monitor: prospective study of accuracy and complications. J. Neurol. Neurosurg. Psychiatry 69, 82–86 (2000).

Munch, E., Weigel, R., Schmiedek, P. & Schurer, L. The Camino intracranial pressure device in clinical practice: reliability, handling characteristics and complications. Acta Neurochir (Wien) 140, 1113–1119 (1998).

Bratton, S. L. et al. Guidelines for the management of severe traumatic brain injury. IX. Cerebral perfusion thresholds. J. Neurotrauma 24 (Suppl. 1), S59–S64 (2007).

Rosner, M. J., Rosner, S. D. & Johnson, A. H. Cerebral perfusion pressure: management protocol and clinical results. J. Neurosurg. 83, 949–962 (1995).

Robertson, C. S. et al. Prevention of secondary ischemic insults after severe head injury. Crit. Care Med. 27, 2086–2095 (1999).

Andrews, P. J. Cerebral perfusion pressure and brain ischaemia: can one size fit all? Crit. Care 9, 638–639 (2005).

Howells, T. et al. Pressure reactivity as a guide in the treatment of cerebral perfusion pressure in patients with brain trauma. J. Neurosurg. 102, 311–317 (2005).

Rose, J. C., Neill, T. A. & Hemphill, J. C. 3rd . Continuous monitoring of the microcirculation in neurocritical care: an update on brain tissue oxygenation. Curr. Opin. Crit. Care 12, 97–102 (2006).

Hemphill, J. C. 3rd, Morabito, D., Farrant, M. & Manley, G. T. Brain tissue oxygen monitoring in intracerebral hemorrhage. Neurocrit. Care 3, 260–270 (2005).

Rumana, C. S., Gopinath, S. P., Uzura, M., Valadka, A. B. & Robertson, C. S. Brain temperature exceeds systemic temperature in head-injured patients. Crit. Care Med. 26, 562–567 (1998).

van den Brink, W. A. et al. Brain oxygen tension in severe head injury. Neurosurgery 46, 868–876 (2000).

Nakagawa, K. et al. The effect of decompressive hemicraniectomy on brain temperature after severe brain injury. Neurocrit. Care doi:10.1007/s12028-010-9446-y.

Carter, L. P., Weinand, M. E. & Oommen, K. J. Cerebral blood flow (CBF) monitoring in intensive care by thermal diffusion. Acta Neurochir. Suppl. (Wien) 59, 43–46 (1993).

Sioutos, P. J. et al. Continuous regional cerebral cortical blood flow monitoring in head-injured patients. Neurosurgery 36, 943–949 (1995).

Vajkoczy, P., Horn, P., Thome, C., Munch, E. & Schmiedek, P. Regional cerebral blood flow monitoring in the diagnosis of delayed ischemia following aneurysmal subarachnoid hemorrhage. J. Neurosurg. 98, 1227–1234 (2003).

Thome, C. et al. Continuous monitoring of regional cerebral blood flow during temporary arterial occlusion in aneurysm surgery. J. Neurosurg. 95, 402–411 (2001).

Vajkoczy, P. et al. Effect of intra-arterial papaverine on regional cerebral blood flow in hemodynamically relevant cerebral vasospasm. Stroke 32, 498–505 (2001).

Robertson, C. S. et al. SjvO2 monitoring in head-injured patients. J. Neurotrauma 12, 891–896 (1995).

Macmillan, C. S., Andrews, P. J. & Easton, V. J. Increased jugular bulb saturation is associated with poor outcome in traumatic brain injury. J. Neurol. Neurosurg. Psychiatry 70, 101–104 (2001).

Goodman, J. C. & Robertson, C. S. Microdialysis: is it ready for prime time? Curr. Opin. Crit. Care 15, 110–117 (2009).

Marcoux, J. et al. Persistent metabolic crisis as measured by elevated cerebral microdialysis lactate-pyruvate ratio predicts chronic frontal lobe brain atrophy after traumatic brain injury. Crit. Care Med. 36, 2871–2877 (2008).

Bellander, B. M. et al. Consensus meeting on microdialysis in neurointensive care. Intensive Care Med. 30, 2166–2169 (2004).

Siggaard-Andersen, O., Ulrich, A. & Gothgen, I. H. Classes of tissue hypoxia. Acta Anaesthesiol. Scand. Suppl. 107, 137–142 (1995).

Hutchinson, P. J. et al. Inflammation in human brain injury: intracerebral concentrations of IL-1α, IL-1β, and their endogenous inhibitor IL-1ra. J. Neurotrauma 24, 1545–1557 (2007).

Andrews, P. J. et al. NICEM consensus on neurological monitoring in acute neurological disease. Intensive Care Med. 34, 1362–1370 (2008).

Stuart, R. M. et al. Intracortical EEG for the detection of vasospasm in patients with poor-grade subarachnoid hemorrhage. Neurocrit. Care 13, 355–358 (2010).

Claassen, J. et al. Prognostic significance of continuous EEG monitoring in patients with poor-grade subarachnoid hemorrhage. Neurocrit. Care 4, 103–112 (2006).

Claassen, J. et al. Quantitative continuous EEG for detecting delayed cerebral ischemia in patients with poor-grade subarachnoid hemorrhage. Clin. Neurophysiol. 115, 2699–2710 (2004).

Fountas, K. N. et al. Clinical implications of quantitative infrared pupillometry in neurosurgical patients. Neurocrit. Care 5, 55–60 (2006).

Kim, M. N. et al. Noninvasive measurement of cerebral blood flow and blood oxygenation using near-infrared and diffuse correlation spectroscopies in critically brain-injured adults. Neurocrit. Care 12, 173–180 (2010).

De Georgia, M. A. & Deogaonkar, A. Multimodal monitoring in the neurological intensive care unit. Neurologist 11, 45–54 (2005).

Buchman, T. G. Computers in the intensive care unit: promises yet to be fulfilled. J. Intensive Care Med. 10, 234–240 (1995).

Kumar, S. & Aldrich, K. Overcoming barriers to electronic medical record (EMR) implementation in the US healthcare system: A comparative study. Health Informatics J. 16, 306–318 (2010).

Ali, T. Electronic medical record and quality of patient care in the VA. Med. Health R. I. 93, 8–10 (2010).

Burykin, A. et al. Toward optimal display of physiologic status in critical care: I. Recreating bedside displays from archived physiologic data. J. Crit. Care 26, 105.e1–105.e9 (2010).

Goldstein, B. et al. Physiologic data acquisition system and database for the study of disease dynamics in the intensive care unit. Crit. Care Med. 31, 433–441 (2003).

ASTM subcommittee F29.21. ASTM Standard F2761–09 Medical devices and medical systems—essential safety requirements for equipment comprising the patient-centric integrated clinical environment (ICE)—Part 1: general requirements and conceptual model. ASTM International[online], (2009).

Otero, A., Felix, P., Barro, S. & Palacios, F. Addressing the flaws of current critical alarms: a fuzzy constraint satisfaction approach. Artif. Intell. Med. 47, 219–238 (2009).

Smielewski, P. et al. ICM+: software for on-line analysis of bedside monitoring data after severe head trauma. Acta Neurochir. Suppl. 95, 43–49 (2005).

Gomez, H. et al. Development of a multimodal monitoring platform for medical research. Conf. Proc. IEEE Eng. Med. Biol. Soc. 1, 2358–2361 (2010).

Saeed, M. et al. Multiparameter Intelligent Monitoring in Intensive Care II (MIMIC-II): a public-access intensive care unit database. Crit. Care Med. 39, 952–960 (2011).

Chambers, I. et al. BrainIT collaborative network: analyses from a high time-resolution dataset of head injured patients. Acta Neurochir. Suppl. 102, 223–237 (2008).

Piper, I. et al. The brain monitoring with Information Technology (BrainIT) collaborative network: EC feasibility study results and future direction. Acta Neurochir (Wien) 152, 1859–1871 (2010).

Diringer, M. N. Treatment of fever in the neurologic intensive care unit with a catheter-based heat exchange system. Crit. Care Med. 32, 559–564 (2004).

Diedler, J. et al. Impaired cerebral vasomotor activity in spontaneous intracerebral hemorrhage. Stroke 40, 815–819 (2009).

Steiner, L. A. et al. Continuous monitoring of cerebrovascular pressure reactivity allows determination of optimal cerebral perfusion pressure in patients with traumatic brain injury. Crit. Care Med. 30, 733–738 (2002).

Buchman, T. G. The digital patient: predicting physiologic dynamics with mathematical models. Crit. Care Med. 37, 1167–1168 (2009).

McQuatt, A., Sleeman, D., Andrews, P. J., Corruble, V. & Jones, P. A. Discussing anomalous situations using decision trees: a head injury case study. Methods Inf. Med. 40, 373–379 (2001).

Andrews, P. J. et al. Predicting recovery in patients suffering from traumatic brain injury by using admission variables and physiological data: a comparison between decision tree analysis and logistic regression. J. Neurosurg. 97, 326–336 (2002).

Vath, A., Meixensberger, J., Dings, J., Meinhardt, M. & Roosen, K. Prognostic significance of advanced neuromonitoring after traumatic brain injury using neural networks. Zentralbl. Neurochir. 61, 2–6 (2000).

Nelson, D. W. et al. Cerebral microdialysis of patients with severe traumatic brain injury exhibits highly individualistic patterns as visualized by cluster analysis with self-organizing maps. Crit. Care Med. 32, 2428–2436 (2004).

Cohen, M. J. et al. Identification of complex metabolic states in critically injured patients using bioinformatic cluster analysis. Crit. Care 14, R10 (2010).

Goldberger, A. L. in Applied Chaos (eds Kim, J. H. & Stringer, J.) 321–331 (Wiley-Interscience, New York, 1992).

Kleiger, R. E., Miller, J. P., Bigger, J. T. Jr & Moss, A. J. Decreased heart rate variability and its association with increased mortality after acute myocardial infarction. Am. J. Cardiol. 59, 256–262 (1987).

Szabo, B. M. et al. Prognostic value of heart rate variability in chronic congestive heart failure secondary to idiopathic or ischemic dilated cardiomyopathy. Am. J. Cardiol. 79, 978–980 (1997).

Kirkness, C. J., Burr, R. L. & Mitchell, P. H. Intracranial pressure variability and long-term outcome following traumatic brain injury. Acta Neurochir. Suppl. 102, 105–108 (2008).

Triedman, J. K., Cohen, R. J. & Saul, J. P. Mild hypovolemic stress alters autonomic modulation of heart rate. Hypertension 21, 236–247 (1993).

Mussalo, H. et al. Heart rate variability and its determinants in patients with severe or mild essential hypertension. Clin. Physiol. 21, 594–604 (2001).

van Boven, A. J. et al. Depressed heart rate variability is associated with events in patients with stable coronary artery disease and preserved left ventricular function. REGRESS Study Group. Am. Heart J. 135, 571–576 (1998).

Axelrod, S., Lishner, M., Oz, O., Bernheim, J. & Ravid, M. Spectral analysis of fluctuations in heart rate: an objective evaluation of autonomic nervous control in chronic renal failure. Nephron 45, 202–206 (1987).

Toweill, D. L. et al. Linear and nonlinear analysis of heart rate variability during propofol anesthesia for short-duration procedures in children. Pediatr. Crit. Care Med. 4, 308–314 (2003).

Ryan, S. M., Goldberger, A. L., Pincus, S. M., Mietus, J. & Lipsitz, L. A. Gender- and age-related differences in heart rate dynamics: are women more complex than men? J. Am. Coll. Cardiol. 24, 1700–1707 (1994).

Vikman, S. et al. Altered complexity and correlation properties of R–R interval dynamics before the spontaneous onset of paroxysmal atrial fibrillation. Circulation 100, 2079–2084 (1999).

Hornero, R., Aboy, M., Abasolo, D., McNames, J. & Goldstein, B. Interpretation of approximate entropy: analysis of intracranial pressure approximate entropy during acute intracranial hypertension. IEEE Trans. Biomed. Eng. 52, 1671–1680 (2005).

Papaioannou, V. E., Maglaveras, N., Houvarda, I., Antoniadou, E. & Vretzakis, G. Investigation of altered heart rate variability, nonlinear properties of heart rate signals, and organ dysfunction longitudinally over time in intensive care unit patients. J. Crit. Care 21, 95–103 (2006).

Richman, J. S. & Moorman, J. R. Physiological time-series analysis using approximate entropy and sample entropy. Am. J. Physiol. Heart Circ. Physiol. 278, H2039–H2049 (2000).

Lake, D. E., Richman, J. S., Griffin, M. P. & Moorman, J. R. Sample entropy analysis of neonatal heart rate variability. Am. J. Physiol. Regul. Integr. Comp. Physiol. 283, R789–R797 (2002).

Burr, R. L., Kirkness, C. J. & Mitchell, P. H. Detrended fluctuation analysis of intracranial pressure predicts outcome following traumatic brain injury. IEEE Trans. Biomed. Eng. 55, 2509–2518 (2008).

Buchman, T. G. Nonlinear dynamics, complex systems, and the pathobiology of critical illness. Curr. Opin. Crit. Care 10, 378–382 (2004).

Buchman, T. G. Novel representation of physiologic states during critical illness and recovery. Crit. Care 14, 127 (2010).

Buchman, T. G. Physiologic stability and physiologic state. J. Trauma 41, 599–605 (1996).

Ursino, M., Lodi, C. A., Rossi, S. & Stocchetti, N. Estimation of the main factors affecting ICP dynamics by mathematical analysis of PVI tests. Acta Neurochir. Suppl. 71, 306–309 (1998).

Godin, P. J. & Buchman, T. G. Uncoupling of biological oscillators: a complementary hypothesis concerning the pathogenesis of multiple organ dysfunction syndrome. Crit. Care Med. 24, 1107–1116 (1996).

Coveney, P. V. & Fowler, P. W. Modelling biological complexity: a physical scientist's perspective. J. R. Soc. Interface 2, 267–280 (2005).

Jacono, F. F., DeGeorgia, M. A., Wilson, C. G., Dick, T. E. & Loparo, K. A. Data acquisition and complex systems analysis in critical care: developing the intensive care unit of the future. J. Healthcare Eng. 1, 337–356 (2010).

Peelen, L. et al. Using hierarchical dynamic Bayesian networks to investigate dynamics of organ failure in patients in the Intensive Care Unit. J. Biomed. Inform. 43, 273–286 (2010).

Tatsuoka, C. Data analytic methods for latent partially ordered classification models. Appl. Statist. 51, 337–350 (2002).

Zenker, S., Rubin, J. & Clermont, G. From inverse problems in mathematical physiology to quantitative differential diagnoses. PLoS Comput. Biol. 3, e204 (2007).

AVERT-IT project. Avert-IT[online], (2011).

Acknowledgements

J. C. Hemphill is funded in part by grant U10 NS058931 from the US NIH. P. Andrews has received funding from the European Society of Intensive Care Medicine for the Eurotherm3235 Trial.

L. Barclay, freelance writer and reviewer, is the author of and is solely responsible for the content of the learning objectives, questions and answers of the Medscape, LLC-accredited continuing medical education activity associated with this article.

Author information

Authors and Affiliations

Contributions

All authors contributed equally to researching, discussing, writing, reviewing and editing this manuscript.

Corresponding author

Ethics declarations

Competing interests

J. C. Hemphill has acted as a consultant for, and holds shares of stock for, Ornim. M. De Georgia has acted as a consultant for Orsan Medical Technologies. P. Andrews declares no competing interests.

Rights and permissions

About this article

Cite this article

Hemphill, J., Andrews, P. & De Georgia, M. Multimodal monitoring and neurocritical care bioinformatics. Nat Rev Neurol 7, 451–460 (2011). https://doi.org/10.1038/nrneurol.2011.101

Published:

Issue Date:

DOI: https://doi.org/10.1038/nrneurol.2011.101

This article is cited by

-

Milestones in the history of neurocritical care

Neurological Research and Practice (2023)

-

Altered levels of transthyretin in human cerebral microdialysate after subarachnoid haemorrhage using proteomics; a descriptive pilot study

Proteome Science (2023)

-

The State of the Field of Pediatric Multimodality Neuromonitoring

Neurocritical Care (2023)

-

Harmonization of Physiological Data in Neurocritical Care: Challenges and a Path Forward

Neurocritical Care (2022)

-

Cerebral Vascular Changes During Acute Intracranial Pressure Drop

Neurocritical Care (2019)