Abstract

Myelosuppression that occurs during chemotherapy has been reported to be a predictor of better survival in patients with breast or lung carcinomas. We evaluated the prognostic implications of chemotherapy-induced neutropenia in advanced gastric carcinoma. Data from a prospective survey of oral fluoropyrimidine S-1 for advanced gastric cancer patients in Japan were reviewed. We identified 1055 untreated patients with adequate baseline bone marrow function. During treatment with S-1, a total of 293 (28%) patients experienced grade 1 or higher neutropenia. The adjusted hazard ratio of death for the presence of neutropenia, as compared with the absence of such toxicity, from a multivariate Cox model was 0.72 (95% confidence interval, 0.54–0.95; P=0.0189) for grade 1 neutropenia, 0.63 (0.50–0.78; P<0.0001) for grade 2 neutropenia and 0.71 (0.51–0.98; P=0.0388) for grade 3–4 neutropenia. These findings suggest that the occurrence of neutropenia during chemotherapy is an independent predictor of increased survival in patients with advanced gastric cancer, whereas the absence of such toxicity indicates that the dosages of drugs are not pharmacologically adequate. Monitoring of neutropenia in patients who receive chemotherapy may contribute to improved drug efficacy and favourable survival.

Similar content being viewed by others

Main

The dose intensity is recognised as a key element in a patient’s response to cytotoxic drugs. In general, it is considered that the higher the dose intensity, the greater the chance that the optimal dose is approached (Frei and Canellos, 1980). Neutropenia is one of the most important dose-limiting toxicities of cytotoxic drugs, often necessitating a reduction from the initial dosage (Crawford et al, 2004). Therefore, many oncologists may consider that the absence of haematological toxicity will raise the hope of an adequate tumour response without myelosuppression. Since the late 1990s, however, several studies have reported that neutropenia or leucopenia occurring during chemotherapy is a sign of patient’s response to adjuvant chemotherapy in women with breast cancer; a significantly longer survival results in patients who have those haematological toxic effects (Saarto et al, 1997; Poikonen et al, 1999; Mayers et al, 2001; Cameron et al, 2003). A recent study by Di Maio et al (2005) confirmed the positive correlation between chemotherapy-induced neutropenia and increased survival in a pooled analysis of three randomised trials that included a total of 1265 patients with advanced non-small-cell lung cancer (NSCLC). Consideration of these findings led us to review data from a prospective survey of Japanese patients with advanced gastric cancer, and to investigate the association between neutropenia occurring during chemotherapy and patient survival. Our goal was to provide the initial evidence, through a rigorous statistical analysis, in a large series of subjects with advanced gastric cancer as to the utility of neutrophil count as a surrogate indicator of drug efficacy.

Materials and methods

Patients

The subjects of this study were identified from among the patients participating in a nationwide survey of oral fluoropyrimidine derivative S-1 (TS-1®; Taiho Pharmaceutical Co. Ltd, Tokyo, Japan) in Japan (Shirasaka et al, 1996). This survey was prospectively conducted by the manufacturer to obtain safety data of the drug, and all patients in Japan scheduled for S-1 administration were centrally registered from March 1999 through March 2000. The protocol of this survey was approved by all participating centres, and informed consent was obtained from all the patients prior to participation in this study.

A total of 3758 patients with advanced gastric cancer were enrolled at more than 700 centres. We identified 1055 subjects who met the following criteria: age less than 80 years, Eastern Cooperative Oncology Group (ECOG) performance status (PS) of 0 or 1, sufficient bone marrow function (neutrophils ⩾2.0 × 109 l−1, leucocytes ⩾4.0 and ⩽12.0 × 109 l−1, platelets ⩾100 × 109 l−1 and haemoglobin ⩾9.5 g dl−1) and no history of chemotherapy within 6 months before the commencement of S-1 treatment. The patients were followed up until March 2002 to obtain survival information.

Treatment delivery

S-1 was to be taken twice a day orally after meals. The initial dose was assigned based on the body surface area (BSA) as follows: BSA <1.25 m2, 40 mg (80 mg day−1); BSA ⩾1.25 and <1.50 m2, 50 mg (100 mg day−1); BSA ⩾1.50 m2, 60 mg (120 mg day−1). A single therapy cycle consisted of S-1 monotherapy for 28 consecutive days, followed by 14 days of no treatment. This schedule was repeated every 6 weeks unless the disease progressed or unacceptable adverse effects occurred. The dose modification scheme followed that in the previous trials of S-1 (Sakata et al, 1998; Koizumi et al, 2000); when haematological adverse reactions at grade 3–4 or non-haematological reactions at grade 2–4 appeared, the dose was reduced from 120 to 100 mg day−1 and from 100 to 80 mg day−1, respectively, or administration was temporarily discontinued.

The dose intensity was calculated as the total dose administered divided by the duration of time over which it was given. The relative dose intensity (RDI) was then calculated as the ratio of the actual dose intensity to the ideal value if planned doses were all given on schedule (Hryniuk and Levine, 1986).

Study endpoint and assessment of haematological toxicity

The study endpoint was overall survival. This was defined as the interval between the date of the beginning of S-1 treatment and the date of death or last follow-up. Haematological toxicity, including neutropenia, leucopenia, thrombocytopenia and decreased haemoglobin level, was graded according to the National Cancer Institute Common Terminology Criteria for Adverse Events (NCI-CTCAE), version 3. The central data centre conducted onsite monitoring weekly during the first cycle and biweekly during and after the second cycle in order to confirm results of laboratory tests. The data centre collected and managed all the data regarding blood cell counts and grades of haematological toxicity.

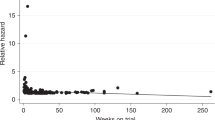

Statistical methods

In order to evaluate the prognostic implications of chemotherapy-induced neutropenia, we first identified the worst grade of neutropenia during treatment with S-1 for each patient. Owing to the size of the study group, we classified neutropenia into four categories: absent (grade 0), mild (grade 1), moderate (grade 2) and severe (grade 3–4). The survival curves of the four categories were estimated by the Kaplan–Meier method and compared by the log-rank test. This approach, considering the occurrence of myelosuppression during chemotherapy to be a baseline feature, has been used in many studies (Saarto et al, 1997; Poikonen et al, 1999; Cameron et al, 2003; Nakata et al, 2006) and is appropriate in an adjuvant setting, as the number of patients who die during the chemotherapy treatment period is usually negligible. In advanced cancer, however, considering myelosuppression to be a baseline feature can lead to a large bias because fewer cycles of chemotherapy can be administered due to poorer outcomes, consequently leading to a lower chance of neutropenia. In other words, patients who have better outcomes can receive many cycles of treatment, thus resulting in a higher incidence of chemotherapy-induced neutropenia. This can produce a false-positive association between chemotherapy-induced neutropenia and increased survival. To avoid this problem, a landmark analysis is sometimes employed, in which the study is limited to patients who survive for at least certain specific time period after the initiation of treatment, with neutropenia during that period considered as a baseline feature (Di Maio et al, 2005). However, such a landmark analysis would discard information regarding deaths occurring ‘before the landmark’, which could bias patient selection (Green et al, 2002). Further, our protocol required that treatment with S-1 would continue until the occurrence of disease progression or unacceptable toxicity. Consequently, a certain number of patients continued the treatment for prolonged period and experienced the late onset of neutropenia. A landmark analysis would easily be biased by neutropenia occurring ‘after the landmark’, and thus be unsuitable for our study. Instead, we treated chemotherapy-induced neutropenia as a time-dependent variable; for each patient, the worst grade of neutropenia occurring between the beginning of S-1 treatment and time T>0 was defined as the value of the variable at T. The variable value for each patient could change over time according to the worst grade of neutropenia experienced by that time. To quantify the impact of time-dependent neutropenia on survival, a Cox regression model was used to estimate the hazard ratio (HR) of death (Hosmer and Lemeshow, 1999). We also considered a multivariate Cox model that included other clinical features to obtain an adjusted HR and to examine the independent prognostic role of chemotherapy-induced neutropenia. A method by Simon and Makuch (1984) was used for a graphical representation of the survival curves according to the worst grade of neutropenia, which was considered to be a time-dependent variable; this method accounted for patients transferring from one group to another. All reported P values of statistical tests are two-tailed and P<0.05 was taken to be statistically significant. All analyses were performed using SAS (version 9.13) or S-PLUS (version 6.2).

Results

Incidence of neutropenia

Table 1 shows the worst grade of neutropenia according to the treatment cycle of chemotherapy in the study subjects (n=1055). Cumulatively, a total of 637 cases of neutropenia (grades 1–4) were reported; among these, 206 cases were grade 1, 328 cases were grade 2, 91 cases were grade 3 and 12 cases were grade 4 (Table 1). The study protocol did not allow the prophylactic use of granulocyte-colony-stimulating factor (G-CSF), and no such use was reported. The results were therefore not biased regarding the use of G-CSF.

Survival data according to the worst grade of neutropenia

The demographics and clinical/haematological characteristics of the study population are summarised in the leftmost column of Table 2. On the whole, patient demographics such as age, gender or BSA were very similar to those observed in the previous trials of S-1 (Sakata et al, 1998; Koizumi et al, 2000). The median values of the baseline leucocyte count and of the baseline neutrophil count were 6.3 × 109 l−1 (range, 4.0–12.0) and 4.0 × 109 l−1 (range, 2.0–10.9), respectively. The median RDI was 0.86 (range, 0.11–1.24), indicating that compliance for S-1 treatment was good overall.

There were 293 patients (28% out of the 1055 patients) who experienced neutropenia; among whom, 73 patients (7%) had grade 1 as the worst grade, 156 patients (15%) had grade 2 and 64 patients (6%) had grade 3 or higher. Of these patients with neutropenia, the worst grade initially occurred during the first cycle in 177 patients, during the second cycle in 57 patients, during the third cycle in 21 patients, during the fourth cycle in 19 patients, during the fifth cycle in patients and during the sixth or subsequent cycles in 11 patients. The characteristics of the patients, according to the worst grade of neutropenia experienced, are shown in Table 2. The dose intensity was lower in the patients with severe neutropenia; the median RDI in the patients with grade 3–4 neutropenia was 0.79 (range, 0.32–1.07), whereas that was 0.86 (range, 0.11–1.24) in the patients with no neutropenia (Table 2).

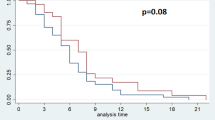

We compared the survival curves for subgroups of patients identified by the worst grade of neutropenia. As stated above, this simple approach may be subject to potential bias, but nevertheless it can be informative for a preliminary analysis. Figure 1 shows the survival curves (A) for all the patients and (B) for subgroups of patients stratified according to the worst grade of neutropenia. A total of 795 deaths (75%) were confirmed, including 767 deaths that were reported as tumour-related. The median follow-up of the 260 surviving patients was 472 days (range, 9–1136 days). The median survival of all the patients was 302 days (95% confidence interval (CI), 270–318 days) with 1- and 2-year survival rates of 40% (95% CI, 37–43%) and 18% (95% CI, 16–21%), respectively (Figure 1A). According to the severity of neutropenia, the median survival was 254 days (95% CI, 239–281 days) for patients with no neutropenia (grade 0), 355 days (95% CI, 309–415 days) for patients with mild neutropenia (grade 1), 459 days (95% CI, 377–559 days) for patients with moderate neutropenia (grade 2) and 480 days (95% CI, 380–552 days) for patients with severe neutropenia (grade 3–4) (Figure 1B). The log-rank test showed that the differences among the four curves were statistically significant (P<0.0001).

Association of survival with the time-dependent neutropenia variable

Table 3 shows the result of a Cox regression analysis for the association between overall survival and the worst grade of neutropenia, which was treated as a time-dependent variable. The HR for mild (grade 1) neutropenia in comparison with no neutropenia (grade 0) was 0.74 (95% CI, 0.56–0.98; P=0.0330), which translated into a 26% lower risk of death. Similarly, the HR for moderate (grade 2) neutropenia in comparison with no neutropenia was 0.55 (95% CI, 0.44–0.68; P<0.0001), which represented a 45% lower risk of death, and the HR for severe (grade 3–4) neutropenia in comparison with no neutropenia was 0.62 (95% CI, 0.44–0.85; P=0.0037), which was a 38% lower risk of death. Figure 2 shows the graphical representation of survival curves according to the worst grade of neutropenia in a time-dependent manner, allowing patients to be transferred from one group to another (Simon and Makuch, 1984; Richardson et al, 2003).

A multivariate Cox regression model including other clinical features (age, gender, BSA, ECOG PS, disease status, liver metastasis and leucocyte count) also demonstrated that any grade of neutropenia was independently associated with a better survival (Table 4). The adjusted HRs for mild, moderate and severe neutropenia in comparison with no neutropenia were 0.72 (95% CI, 0.54–0.95; P=0.0189), 0.63 (95% CI, 0.50–0.78; P<0.0001) and 0.71 (95% CI, 0.51–0.98; P=0.0388), respectively. Therefore, patients who experienced neutropenia had a more favourable prognosis, and the presence of moderate neutropenia suggested a higher efficacy of the drug than did the presence of either mild neutropenia or no neutropenia.

It is notable that patients with a leucocyte count ⩾9.0 × 109 l−1 had a significantly poorer survival when compared with those with a leucocyte count <9.0 × 109 l−1 (HR=1.46; 95% CI, 1.19–1.80; P=0.0004; Table 4). Several recent studies have reported that large numbers of leucocytes and their cytokine production correlate well with tumour development and severity (Coussens and Werb, 2002; Balkwill, 2004). It is also often clinically observed in advanced cancer that the neutrophil count elevates in accordance with an increased number of leucocytes. This implies that there is a potential link between a high density of neutrophils and a poor prognosis, thus leading to a false association between patients experiencing no neutropenia during chemotherapy and unfavourable survival outcomes. However, the multivariate analysis, adjusted by the leucocyte count, did not affect the significant association between neutropenia and survival, implying that neutropenia is independently predictive of the prognosis (Table 4).

We can observe that there was a trend for BSA being predictive of overall survival (HR=0.87; 95% CI=0.74–1.02; P=0.0878), although the statistical test did not reach a 5% significance (Table 4). This in turn suggested that higher dose might be related to better outcome in our dose-banding approach for S-1. However, BSA, which is defined by height and weight, showed a very high correlation with weight (Pearson correlation coefficient=0.962, P<0.0001) and it was thus likely that the above trend simply reflected the fact that patients maintaining weight tended to survive longer than those losing it. Hence, weight, which can be reasonably associated with patient’s general condition, could be a confounding factor in this case.

Finally, we performed similar analysis for other haematological manifestations of toxicity, including leucopenia, haemoglobin decrease and thrombocytopenia. However, none of these variables remained as significant factors in the multivariate model (data not shown).

Discussion

The objective of this study was to investigate whether chemotherapy-induced neutropenia was related to increased survival and could therefore be used as a surrogate indicator of patient prognosis. Since the late 1990s, several studies have linked myelosuppression induced by adjuvant chemotherapy to a better outcome in patients with breast cancer (Saarto et al, 1997; Poikonen et al, 1999; Mayers et al, 2001; Cameron et al, 2003). Recently, Di Maio et al (2005) reported the first evidence regarding the relationship between chemotherapy-induced neutropenia and longer survival time in patients with an advanced stage of cancer. They analysed the pooled data from three randomised trials of 1265 patients with advanced NSCLC treated with one of five different regimens, and concluded that both mild (grade 1–2) and severe (grade 3–4) neutropenia similarly predicted longer patient survival than did the absence of such toxicity. Nakata et al (2006) examined the prognostic role of neutropenia in a phase I study of 23 patients with advanced gastric cancer receiving S-1 plus cisplatin. These findings prompted us to perform a rigorous quantification of the prognostic value of chemotherapy-induced neutropenia with respect to survival outcomes in patients with advanced gastric cancer. In comparison with previous investigations, an advantage of our study is that a large number of patients received the same treatment with S-1 monotherapy according to the schedule used in previous clinical trials. Further, the survey entailed central data collection and management and frequent site-visit monitoring in order to ensure data quality.

In our study, the association between patients experiencing neutropenia and survival prolongation was considerable. The patients with a grade 1 or greater decrease in their neutrophil count had significantly better survival outcomes than did patients without such toxicity, indicating that chemotherapy-induced neutropenia may provide an index of delivering an optimal dose of chemotherapy that is required for generating an active antitumour effect. Moreover, the median RDI of S-1 in patients with neutropenia was less than that in patients with no neutropenia (Table 2). These results lead us to recognise the possibility that optimal dosing is not necessarily governed by the use of BSA-dosing guidelines; in fact, a poor correlation between BSA and the pharmacokinetics of most cytotoxic agents has been critically pointed out (Gurney, 1996, 2002; Ratain, 1998; Newell, 2002). Several prospective randomised studies have investigated the dose–response relationship in breast carcinoma (Fumoleau et al, 1993; Wood et al, 1994; Fisher et al, 1997) in order to assess the preference for higher doses than those guided by the BSA criteria. If, however, the BSA-based dosing system is not appropriate, then the optimal dose of chemotherapy for individual patients is relatively unrelated to whether the dose is high or low in terms of the BSA-based one.

When oncologists conduct phase II or III clinical trials, they predefine a schedule for dose reduction in the event of myelosuppression. In contrast, the dose is not increased in the absence of such toxicity. Similar guidelines are usually followed in daily clinical practice. This apparent asymmetry results from the presumption that the doses directed by BSA are valid for most, if not all, patients and that patients without toxicity such as myelosuppression will continue to derive substantial benefits from treatment. However, our study and that of others suggest that the absence of neutropenia may actually be a sign of an inadequate dose of chemotherapy (Kvinnsland, 1999). If this is the case, then the fact that more than 70% of patients in our study did not experience neutropenia in their treatment implies that the traditional BSA-based dose is far from optimal in ensuring that the majority of patients receive an effective dose.

Our analysis demonstrated that the patients with neutropenia had a significantly better survival than did the patients without neutropenia, and that severe (grade 3–4) neutropenia did not indicate a better survival than mild (grade 1) or moderate (grade 2) neutropenia. This result is precisely consistent with that obtained for the chemotherapy of NSCLC (Di Maio et al, 2005). In addition, our rigorous statistical approach elucidated that patients with moderate neutropenia reaped greater survival benefits from the treatment than did the patients with mild neutropenia. These findings provide us with a possible approach for fine-tuning of the initial dose of S-1, which is selected according to BSA; unless other severe toxicities are observed, a dose level that decreases the neutrophil count to 1.0–1.5 × 109 l−1 (grade 2) is required for active antitumour effects. This approach would be most beneficial early on in treatment. Indeed, there were 884 (84% of the study population) patients who remained at grade 0–1 neutropenia in the first and second cycles of treatment. Of these, 428 (41%) patients experienced neither moderate/severe haematological toxicity (grade 2–4 leucopenia, haemoglobin decrease or thrombocytopenia) nor any grade of key non-haematological toxicity (grade 1–4 nausea, vomiting, diarrhoea, stomatitis, dermatosis) in the first and second cycles. An early dose increase aimed at moderate neutropenia could have been conducted safely for these patients and thus a significant number of patients were expected to fall under this category and receive more survival benefit from S-1.

On the other hand, a dose increase based on lack of neutropenia in the first and second cycles would clearly be a problem for patients exhibiting severe toxicity later on in treatment. In this sense, an early dose modification does not allow for the development of late toxicity. However, the number of patients who remained at grade 0–1 neutropenia in the first and second cycles but thereafter developed neutropenia or one of the above eight toxicities to a grade 3–4 level was only 54. Thus, an early dose increase would not be a problem for most of the patients targeted in our approach.

In our study, the prognostic impact of neutropenia as a marker for delivering the optimal dose was estimated to be 0.6–0.7 in terms of HR, similar to the survival benefit reported in the NSCLC study (Di Maio et al, 2005). This improvement in outcome would be equivalent to, if not greater than, that generally expected for chemotherapeutic regimens in experimental arms of phase III studies. Thus, the use of chemotherapy-induced neutropenia may prompt the more rational use of available drugs and benefit a large proportion of patients who are currently receiving unintentional under-dosing of cytotoxic chemotherapy. Although it remains unclear why the plateau of dose–response appears in proximity to the dose that produces modest neutropenia, accumulating evidence indicates that the possibility of using toxicity as a guide for tailored dosages deserves more attention for both curable and non-curable treatments.

In conclusion, we confirmed the prognostic implications of chemotherapy-induced neutropenia through the analysis of a large series of advanced gastric cancer patients treated with S-1. Our study suggests that chemotherapy-induced neutropenia can be used to individualise a pharmacologically active dose. Prospective randomised trials to explore safe intrapatient dose escalation with the intent of achieving neutropenia are thus warranted.

Change history

16 November 2011

This paper was modified 12 months after initial publication to switch to Creative Commons licence terms, as noted at publication

References

Balkwill F (2004) Cancer and the chemokine network. Nat Rev Cancer 4: 540–550

Cameron DA, Massie C, Kerr G, Leonard RC (2003) Moderate neutropenia with adjuvant CMF confers improved survival in early breast cancer. Br J Cancer 89: 1837–1842

Coussens LM, Werb Z (2002) Inflammation and cancer. Nature 420: 860–867

Crawford J, Dale DC, Lyman GH (2004) Chemotherapy-induced neutropenia: risks, consequences, and new directions for its management. Cancer 100: 228–237

Di Maio M, Gridelli C, Gallo C, Shepherd F, Piantedosi FV, Cigolari S, Manzione L, Illiano A, Barbera S, Robbiati SF, Frontini L, Piazza E, Ianniello GP, Veltri E, Castiglione F, Rosetti F, Gebbia V, Seymour L, Chiodini P, Perrone F (2005) Chemotherapy-induced neutropenia and treatment efficacy in advanced non-small-cell lung cancer: a pooled analysis of three randomised trials. Lancet Oncol 6: 669–677

Fisher B, Anderson S, Wickerham DL, DeCillis A, Dimitrov N, Mamounas E, Wolmark N, Pugh R, Atkins JN, Meyers FJ, Abramson N, Wolter J, Bornstein RS, Levy L, Romond EH, Caggiano V, Grimaldi M, Jochimsen P, Deckers P (1997) Increased intensification and total dose of cyclophosphamide in a doxorubicin-cyclophosphamide regimen for the treatment of primary breast cancer: findings from National Surgical Adjuvant Breast and Bowel Project B-22. J Clin Oncol 15: 1858–1869

Frei E, Canellos GP (1980) Dose: a critical factor in cancer chemotherapy. Am J Med 69: 585–594

Fumoleau P, Devaux Y, Vo Van ML, Kerbrat P, Fargeot P, Schraub S, Mihura J, Namer M, Mercier M (1993) Premenopausal patients with node-positive resectable breast cancer. Preliminary results of a randomised trial comparing 3 adjuvant regimens: FEC 50 × 6 cycles vs FEC 50 × 3 cycles vs FEC 75 × 3 cycles. The French Adjuvant Study Group. Drugs 45 (Suppl 2): 38–45

Green S, Benedetti J, Crowley J (2002) Clinical Trials in Oncology. Boca Raton: Chapman & Hall/CRC

Gurney H (1996) Dose calculation of anticancer drugs: a review of the current practice and introduction of an alternative. J Clin Oncol 14: 2590–2611

Gurney H (2002) How to calculate the dose of chemotherapy. Br J Cancer 86: 1297–1302

Hosmer D, Lemeshow S (1999) Applied Survival Analysis: Regression Modeling of Time to Event Data. New York: John Wiley & Sons

Hryniuk W, Levine MN (1986) Analysis of dose intensity for adjuvant chemotherapy trials in stage II breast cancer. J Clin Oncol 4: 1162–1170

Koizumi W, Kurihara M, Nakano S, Hasegawa K (2000) Phase II study of S-1, a novel oral derivative of 5-fluorouracil, in advanced gastric cancer. For the S-1 Cooperative Gastric Cancer Study Group. Oncology 58: 191–197

Kvinnsland S (1999) The leucocyte nadir, a predictor of chemotherapy efficacy? Br J Cancer 80: 1681

Mayers C, Panzarella T, Tannock IF (2001) Analysis of the prognostic effects of inclusion in a clinical trial and of myelosuppression on survival after adjuvant chemotherapy for breast carcinoma. Cancer 91: 2246–2257

Nakata B, Tsuji A, Mitachi Y, Yamamitsu S, Hirata K, Takeuchi T, Shirasaka T, Hirakawa K (2006) Moderate neutropenia with S-1 plus low-dose cisplatin may predict a more favourable prognosis in advanced gastric cancer. Clin Oncol 18: 678–683

Newell DR (2002) Getting the right dose in cancer chemotherapy – time to stop using surface area? Br J Cancer 86: 1207–1208

Poikonen P, Saarto T, Lundin J, Joensuu H, Blomqvist C (1999) Leucocyte nadir as a marker for chemotherapy efficacy in node-positive breast cancer treated with adjuvant CMF. Br J Cancer 80: 1763–1766

Ratain MJ (1998) Body-surface area as a basis for dosing of anticancer agents: science, myth, or habit? J Clin Oncol 16: 2297–2298

Richardson PG, Barlogie B, Berenson J, Singhal S, Jagannath S, Irwin D, Rajkumar SV, Srkalovic G, Alsina M, Alexanian R, Siegel D, Orlowski RZ, Kuter D, Limentani SA, Lee S, Hideshima T, Esseltine DL, Kauffman M, Adams J, Schenkein DP, Anderson KC (2003) A phase 2 study of bortezomib in relapsed, refractory myeloma. N Engl J Med 348: 2609–2617

Saarto T, Blomqvist C, Rissanen P, Auvinen A, Elomaa I (1997) Haematological toxicity: a marker of adjuvant chemotherapy efficacy in stage II and III breast cancer. Br J Cancer 75: 301–305

Sakata Y, Ohtsu A, Horikoshi N, Sugimachi K, Mitachi Y, Taguchi T (1998) Late phase II study of novel oral fluoropyrimidine anticancer drug S-1 (1 M tegafur-0.4 M gimestat-1 M otastat potassium) in advanced gastric cancer patients. Eur J Cancer 34: 1715–1720

Shirasaka T, Shimamato Y, Ohshimo H, Yamaguchi M, Kato T, Yonekura K, Fukushima M (1996) Development of a novel form of an oral 5-fluorouracil derivative (S-1) directed to the potentiation of the tumor selective cytotoxicity of 5-fluorouracil by two biochemical modulators. Anticancer Drugs 7: 548–557

Simon R, Makuch RW (1984) A non-parametric graphical representation of the relationship between survival and the occurrence of an event: application to responder vs non-responder bias. Stat Med 3: 35–44

Wood WC, Budman DR, Korzun AH, Cooper MR, Younger J, Hart RD, Moore A, Ellerton JA, Norton L, Ferree CR, Ballow AC, Frei E, Henderson IC (1994) Dose and dose intensity of adjuvant chemotherapy for stage II, node-positive breast carcinoma. N Engl J Med 330: 1253–1259

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

From twelve months after its original publication, this work is licensed under the Creative Commons Attribution-NonCommercial-Share Alike 3.0 Unported License. To view a copy of this license, visit http://creativecommons.org/licenses/by-nc-sa/3.0/

About this article

Cite this article

Yamanaka, T., Matsumoto, S., Teramukai, S. et al. Predictive value of chemotherapy-induced neutropenia for the efficacy of oral fluoropyrimidine S-1 in advanced gastric carcinoma. Br J Cancer 97, 37–42 (2007). https://doi.org/10.1038/sj.bjc.6603831

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/sj.bjc.6603831

Keywords

This article is cited by

-

Association between chemotherapy-induced myelosuppression and curative efficacy of 2-cycle chemotherapy in small cell lung cancer

Cancer Chemotherapy and Pharmacology (2024)

-

Chemotherapy-induced neutropenia as a prognostic factor in patients with extensive-stage small cell lung cancer

European Journal of Clinical Pharmacology (2023)

-

Relevance of pharmacogenetic polymorphisms with response to docetaxel, cisplatin, and 5-fluorouracil chemotherapy in esophageal cancer

Investigational New Drugs (2022)

-

Emerging immunotherapies for metastasis

British Journal of Cancer (2021)

-

Reduction of derived neutrophil-to-lymphocyte ratio after four weeks predicts the outcome of patients receiving second-line chemotherapy for metastatic colorectal cancer

Cancer Immunology, Immunotherapy (2021)