More Than Meets the Eye: The Merging of Perceptual and Conceptual Knowledge in the Anterior Temporal Face Area

- 1Frontotemporal Dementia Unit, Department of Neurology, Massachusetts General Hospital and Harvard Medical School, Charlestown, MA, USA

- 2Department of Psychology, University of Texas Austin, Austin, TX, USA

- 3Department of Psychology, Temple University, Philadelphia, PA, USA

An emerging body of research has supported the existence of a small face sensitive region in the ventral anterior temporal lobe (ATL), referred to here as the “anterior temporal face area”. The contribution of this region in the greater face-processing network remains poorly understood. The goal of the present study was to test the relative sensitivity of this region to perceptual as well as conceptual information about people and objects. We contrasted the sensitivity of this region to that of two highly-studied face-sensitive regions, the fusiform face area (FFA) and the occipital face area (OFA), as well as a control region in early visual cortex (EVC). Our findings revealed that multivoxel activity patterns in the anterior temporal face area contain information about facial identity, as well as conceptual attributes such as one’s occupation. The sensitivity of this region to the conceptual attributes of people was greater than that of posterior face processing regions. In addition, the anterior temporal face area overlaps with voxels that contain information about the conceptual attributes of concrete objects, supporting a generalized role of the ventral ATLs in the identification and conceptual processing of multiple stimulus classes.

Introduction

Over a decade of neuroimaging work has characterized the neural basis of face perception and identified several nodes that preferentially respond to faces relative to other objects (Haxby et al., 2000; Kanwisher and Yovel, 2006). Most of this work has focused on the fusiform face area (FFA) and the occipital face area (OFA; Kanwisher et al., 1997; Kanwisher and Yovel, 2006; Pitcher et al., 2007), however an emerging literature has implicated an anterior temporal face area, on the ventral surface of the right anterior temporal lobes (vATLs) in or near perirhinal cortex, in facial processing (Tsao et al., 2008; Pinsk et al., 2009; Rajimehr et al., 2009; Avidan et al., 2013).

The function of the anterior temporal face area remains unclear. One body of research implicates this region in perception. Using single-cell recordings it has been shown that the identity of previously unfamiliar faces is represented in the population code of monkey vATL face areas (Freiwald and Tsao, 2010). In humans, discrete portions of the vATLs have the ability to discriminate between individual unfamiliar faces, as reported by two fMRI studies using multivoxel pattern analysis (MVPA; Kriegeskorte et al., 2007; Nestor et al., 2011). More recently it was shown that identity representations in the anterior temporal face area are sensitive to perceptual manipulations that affect identity, but not to perceptual manipulations that leave identity intact such as changes in rotation or lighting (Nasr and Tootell, 2012; Anzellotti et al., 2014). Furthermore, it has recently been shown that image invariant identity representations persist in the right vATL face region of a patient with focal damage to the OFA and FFA (Yang et al., 2016).

However, other work has pointed to a mnemonic function. The vATLs have been consistently implicated in semantic dementia, and individuals with this disorder frequently experience difficulty remembering biographical information about specific people (Evans et al., 1995; Snowden et al., 2004). Similar deficits have been observed in patients with focal unilateral lesions (reviewed by Olson et al., 2007). Damasio et al. (1990) coined the term “amnesic associative prosopagnosia” for patients with unilateral anterior temporal lobe (ATL) resections, as these individuals typically can discriminate individual faces based on their perceptual features, but fail to recognize the faces of familiar individuals. More recently, numerous studies have reported that portions of the ATLs are preferentially sensitive to familiar relative to novel faces, presumably due to the wealth of semantic information that is retrieved upon viewing a familiar face (reviewed by Von Der Heide et al., 2013). These effects appear to be lateralized, with the left ATL playing a role in retrieving names (Tsukiura et al., 2002, 2010), and the right ATLs playing a role in the retrieval of person- specific semantic information, such as their occupation, from facial cues (Tsukiura et al., 2002, 2006, 2008). In the episodic memory literature, it has been shown that activity patterns in perirhinal cortex, overlapping with face-selective voxels, differentiate between unfamiliar and recently learned familiar faces that have no semantic content (Martin et al., 2013, 2015).

We have recently proposed that the anterior temporal face area may perform person identification by integrating abstracted perceptual information with person-specific semantic knowledge (Collins and Olson, 2014). Here we started to test this hypothesis by using MVPA to assess the sensitivity of the anterior temporal face area to different aspects of newly learned person information: identity, occupation, and the setting in which they are typically encountered. We compared the sensitivity profile of the anterior temporal face area to that of the more posterior face processing regions (the OFA and FFA) and to a control region in early visual cortex (EVC). In addition, we assessed whether the anterior temporal face area overlapped with voxels in the vATLs that represent semantic information about non-social items: common objects.

Materials and Methods

Data Acquisition

Neuroimaging sessions were conducted at the Temple University Hospital on a 3.0 T Siemens Verio scanner (Erlangen, Germany) using a 12-channel Siemens head coil. The functional runs were preceded by a high-resolution anatomical scan that lasted 9 min. The T1-weighted images were acquired using a three-dimensional magnetization prepared rapid acquisition gradient echo pulse sequence. Imaging parameters were as follows: 144 contiguous slices of 0.9766 mm thickness; repetition time (TR) = 1900 ms; echo time (TE) = 2.94 ms; FOV = 188 × 250 mm; inversion time = 900 ms; voxel size = 1 × 0.9766 × 0.9766; matrix size = 188 × 256; flip angle = 9°.

Visual stimuli were shown using a rear mounted projection system. The stimulus delivery was controlled by E-Prime Software (Psychology Software Tools Inc., Pittsburg, PA, USA) on a windows desktop located in the scanner control room. Responses were recorded using a four-button fiber optic response pad system.

Functional T2*-weighted images sensitive to blood oxygenation level-dependent contrasts were acquired using a gradient-echo echo-planar pulse sequence and automatic shimming. Imaging parameters were as follows: TR = 3 s; TE = 20 ms; FOV = 240 × 240; voxel size = 3 × 3 × 2.5 mm; matrix size = 80 × 80; flip angle = 90°, GRAPPA = 2. Thirty-eight interleaved slices with 2.5 mm thickness were acquired aligned to the AC-PC line, with full brain coverage. Data preprocessing and univariate analysis of fMRI data were performed using FEAT (fMRI Expert Analysis Tool) version 6.0, part of the software library of the Oxford Centre for Functional MRI of the Brain (fMRIB1). MVPA analysis was carried out using the Princeton MVPA Toolbox version 0.7.1 running on MATLAB R2012b, and with custom MATLAB Software.

Early imaging studies of face perception likely missed anterior activations because they used a restricted FOV that excluded the inferior temporal lobe from image acquisition, or because they suffered from the well known problem of imaging the ATLs: susceptibility artifacts and signal distortion due to the proximity of these regions to the nasal sinuses and ear canals (Devlin et al., 2000; Visser et al., 2010). We were thoughtful about this problem in designing our study and made several adjustments that optimized our signal to noise ratio. We used small slice-thickness (2.5 mm) which has been shown to reduce signal drop-out caused by variations in the static magnetic field within a voxel (Farzaneh et al., 1990; Olman et al., 2009; Carlin et al., 2012). We also used a short (TE, 20 ms), which has also been shown to reduce signal drop-out (Farzaneh et al., 1990; Olman et al., 2009).

To assess whether there was adequate sensitivity for signal detection in the ATLs, the temporal signal to noise ratio (tSNR) for each participant was calculated using the first run of the functional localizer, by dividing the mean of the time series by the residual error SD after pre-processing. Visual inspection of individual tSNR maps confirmed signal coverage in the ATLs of all subjects that was well within a proper sensitivity range (>40; Murphy et al., 2007). Some signal loss in the medial orbitofrontal cortex (e.g., gyrus rectus) was observed and varied between participants.

Participants

Eighteen participants (8 females) were recruited from Temple University. All participants were between the ages of 18 and 30, without history of brain injury or psychiatric illness, had normal or corrected-to-normal vision, and were right-handed. Written informed consent was attained from each subject before the first training session, and participants were compensated monetarily for their time. One participant was excluded from all analyses due to excessive movement, and another was excluded due to a failure to complete the face-training paradigm, resulting in a final sample of 16 participants.

Behavioral Training Sessions

Eight gray-scale images of real male faces, all lacking facial hair and glasses and facing forward, were used in the training paradigm (stimuli courtesy of Michael J. Tarr2). In addition, eight object images were selected from the Internet. Object stimuli consist of gray-scale images of eight different items that would typically be found in a kitchen or hospital (blood pressure pumps, thermometers, corkscrews, mixers), which could be sorted into four different object categories. All stimuli were 360 by 360 pixels and displayed on a white background.

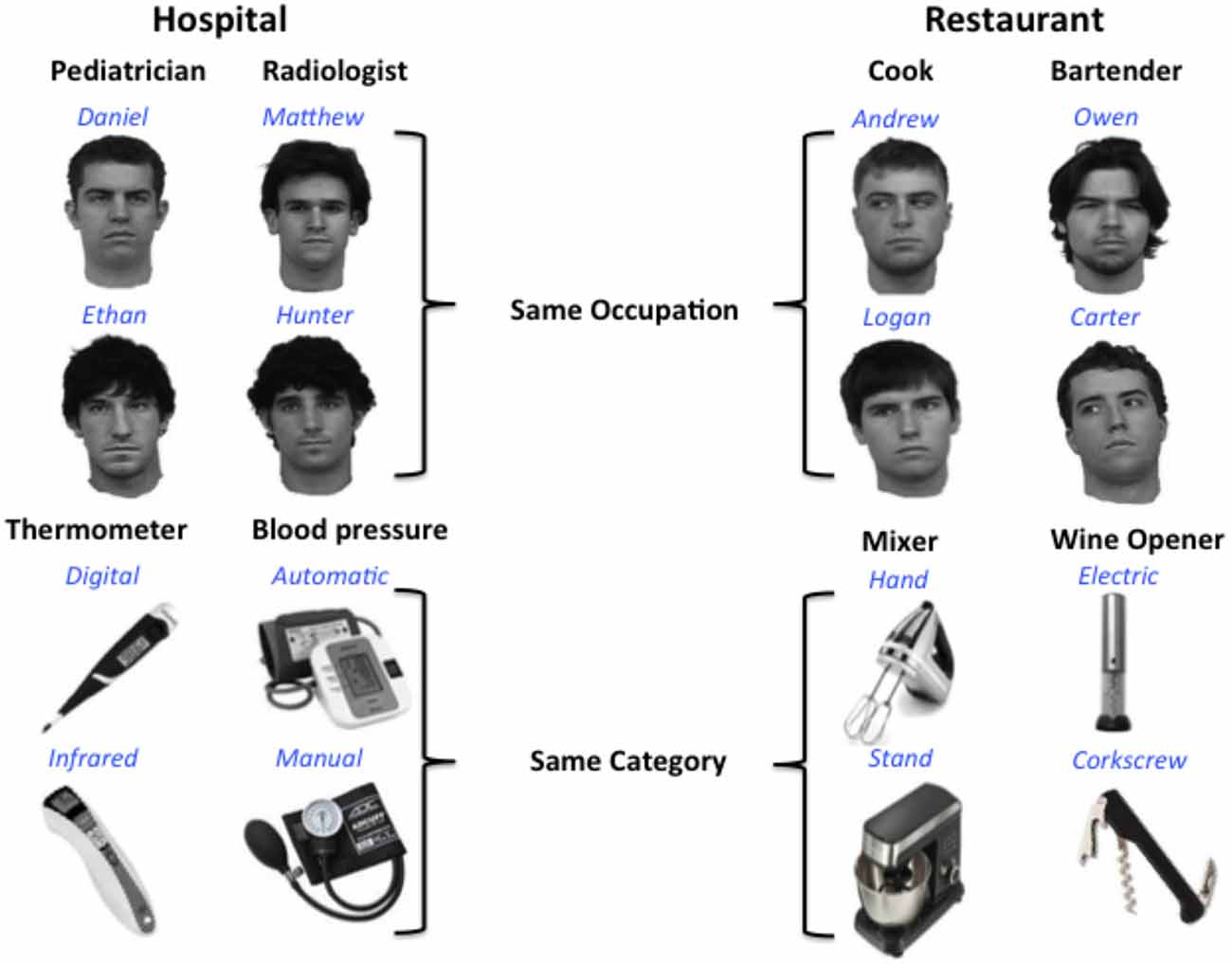

Participants learned to associate a name and category label (occupation or object type) with each of the face and object stimuli (see Figure 1). Participants also learned to associate performance rankings (2 or 5 stars) with each face and object stimulus for a different project that will not be discussed here. To make object and face information as conceptually similar as possible, two object categories and two occupation categories were selected from two familiar public locations: restaurants and hospitals. Object categories with at least two different, distinct exemplars were selected, and these two exemplars represent the individual object names within each object category. Similarly, different male names were chosen to represent individual faces within each occupation category. Common, distinct male names were selected from the US Social Security list of the top 100 baby names for the 2000s.3 Training was conducted over 2 days in a laboratory setting, with the first session lasting approximately 45 min, and the second session lasting 30 min.

Figure 1. Design for multivoxel pattern analysis (MVPA) analysis. Participants learned to associate distinct faces and objects with identifying names (blue) and category labels or occupations (black). Each occupation or object category is typically found in one of two locations: a hospital or restaurant. Participants were not explicitly informed of the location associations.

During the first training session, participants first completed “show” trials in which they passively viewed slides containing a face or object image, along with that face or object’s associated name and category labels. Each slide was presented four times each (64 trials total) for 5 s in a random order, and participants were instructed to learn the information for each face and object. Next participants completed “category response” trials, in which they viewed an image of a face or object, and were instructed to select the category that matched the displayed stimulus from a list of the four possible category labels presented below. The face and response choices remained on the screen until the participant responded, after which the correct category label was displayed for 2 s. Each face or object stimulus was presented twice (32 trials total) in a random order. Next participants completed 32 “status-response trials, ” during which each trained image was presented one at a time and participants selected the performance rating assigned to each item (either two or five stars). Following their response, the correct answer was displayed for 2 s. Participants completed blocks of trials in the following order, two times: show trials (64 total); category-response trials (32 total); show trials (64 total); and status-response trials (32 total).

The second training session used the same trial blocks in the same order as the first session. Afterwards, participants completed a free recall test, where a number was presented on the computer screen with one of the 16 trained images. Participants were instructed to write down on a separate sheet of paper all of the information they had learned for each image, including name, category, and status information.

Participants completed an additional recall test on the third day of the study, immediately before their fMRI session, to ensure they had retained all information learned during training. The recall test consisted of a piece of paper with the 16 trained images, and participants were instructed to write next to each face or object the associated name, category, and status information learned during training.

Regions of Interest Task

A functional localizer scan preceded the experimental runs to localize areas sensitive to faces (the OFA, FFA, anterior temporal face area) and objects (the LOC, results reported elsewhere). The functional localizer utilized a block design, where seven types of images are presented: famous faces, non-famous faces, famous places, non-famous places, common objects, and scrambled images taken from publically available sources on the Internet. These stimuli were presented in gray-scale and varied in color, expression, pose, and background. The inclusion of famous faces in our functional localizer was motivated by our previous work (Von Der Heide et al., 2013) showing that that famous faces activate the same regions in the ATLs as non-famous faces, but that the activations tend to be larger and more robust. The images of famous faces and landmarks included here have been pilot tested to ensure that they are highly familiar within our study cohort (see Ross and Olson, 2012). An additional null stimulus was used, consisting of a gray screen and central fixation cross.

Images were randomly selected from lists of 89 images per category, and presented one at a time for 400 ms (350 ISI) in eight category blocks consisting of 14 trials. Participants performed a one-back task, responding whenever a randomly selected image was presented twice in a row (twice per block). The full cycle of category blocks was presented 5 times, with the order of categories varying in a fixed randomized order. Each cycle ended with a 12,000 ms presentation of a central fixation cross. The full localizer run lasted 9 min and 12 s and was completed twice.

Regions of Interest Definition

The first five volumes of each run were discarded prior to any analyses. The following pre-processing steps were applied to all functional localizer data: non-brain removal using FSL’s brain extraction tool, motion correction using FSL’s MCFLIRT linear realignment tool, spatial smoothing using a 5 mm FWHM Gaussian kernel, high-pass temporal filtering with a 100 s cutoff, and un-distorting of the EPI data to correct for magnetic field distortions by means of individual field maps. EPI data were registered to each participant’s T1-weighted anatomical scan using BBR, and normalized to a standard Montreal Neurological institute (MNI-152) template.

After preprocessing the functional localizer runs for each fMRI time-series for each participant, the data were submitted to a fixed effects general linear model using FSL’s fMRI expert analysis tool (FEAT), with one predictor that was convolved with a double-gamma model of the hemodynamic response function (HRF) for each block type (face, places, fixation). Regions of interest (ROIs) were identified individuating the peaks showing greater activity for faces than for places (uncorrected, p < 0.05) in regions that have previously been implicated in face processing (for a review, see Collins and Olson, 2014). Spheres of 9 mm radius were generated, centered on the voxel with the highest activation within each peak. This ROI size (average = 106 voxels after intersection with the brain mask) was selected because it provided the best coverage of face-selective activations in the ATLs across individuals, and because it was consistent with previous MVPA studies that have demonstrated sensitivity to facial identity in the ATLs (Kriegeskorte et al., 2007; Goesaert and Op de Beeck, 2013; Anzellotti et al., 2014). Our face-selective ROIs included bilateral FFA located in the mid fusiform gyrus, the OFA, located in the inferior occipital gyrus, and the anterior temporal face area, located on the ventral surface of the ATLs. In some participants, multiple face-selective regions were identified in the ventral ATLs. For these participants the vATL functional ROI was centered on the most inferior peak, on the inferior temporal or fusiform gyrus, along the anterior collateral sulcus, consistent with previous work (Tsao et al., 2008; Rajimehr et al., 2009; Nestor et al., 2011; O’Neil et al., 2014). To control for effects driven by the low-level perceptual features of our stimuli, we additionally generated a 9-mm spherical ROI in EVC around the voxel showing the greatest activation for all visual stimuli (faces + places) vs. fixation within V1/BA17 as defined by the Juelich Histological Atlas in FSL. None of the face-selective ROIs overlapped in any participant at this sphere size, however the EVC and OFA ROIs overlapped partially in two participants. All ROIs were aligned to the individual subject’s (non-normalized) functional space using the FMRIB’s Linear Image Registration Tool. Given the wealth of data suggesting that the face-processing network is strongly lateralized to the right hemisphere (Sergent et al., 1992; Kanwisher et al., 1997; Rossion et al., 2003; Snowden et al., 2004; Gainotti, 2007, 2013, 2015; Gainotti and Marra, 2011; Duchaine and Yovel, 2015), we chose to restrict our analysis to face-processing ROIs (vATL, FFA, and OFA) in the right hemisphere, and bilateral EVC.

MVPA Experimental Task and Design

Four novel exemplars of the same 8 male faces used during training were used in the experimental runs. These faces were angled 30° and 45° to the right or left. Four novel exemplars of each of the eight object types used during training were also used in the fMRI task. This is similar to prior work looking at object and face representations in the ATLs (see Peelen and Caramazza, 2012; Anzellotti et al., 2014) to avoid neural decoding of individual images, rather than object or facial identity.

The main experiment used a block design with a target detection task. The target detection task was orthogonal to the interests of the study in order to ensure that any activations observed were not due to task-related effects. Four exemplar images for each face and object identity (Fred, Carl, Hand-Mixer, Digital Thermometer, etc.,) from the training sessions were used for the main experiment. Stimuli were presented in 16 identity blocks (8 face, 8 object) per run, consisting of 16 trials each. Blocks were presented in a fixed random order, alternating between faces and objects. Within each block, each exemplar was presented 4 times in a fixed random order for 700 ms (425 ms ISI), and each block was followed by 3000 ms of fixation. Participants completed 16 blocks per run, lasting 5 min and 45 s each, and six total runs. Participants completed a target detection task in which they responded with a button press each time a green dot was displayed on an image. Target images appeared 3 times per block, with their exact locations following one of four predetermined patterns that varied across trial blocks in a fixed random order (to give the illusion that the order is completely random).

MVPA Statistical Analysis

The first five volumes of each experimental run were discarded prior to any analyses. The following pre-processing steps were applied to all experimental data prior to our MVPA analysis: non-brain removal using FSL’s brain extraction tool, motion correction using FSL’s MCFLIRT linear realignment tool, high-pass temporal filtering with a 50 s cutoff, and un-distorting of the EPI data to correct for magnetic field distortions by means of individual field maps. EPI data were registered to each participant’s T1-weighted anatomical scan using BBR.

We used MVPA to assess the sensitivity of the functionally defined bilateral OFA, FFA, and anterior temporal face areas to two types of information associated with faces and objects: facial/object identity, and the category that the face or object belonged to. We additionally assessed the sensitivity of each of our functionally defined ROIs to the typical location of each object-type or person category (i.e., either a kitchen or hospital), regardless of stimulus type.

Across all analyses data were z-scored within each run to control for baseline shifts in the magnetic resonant signal, and all regressors were convolved with a standard HRF. For the first analysis we defined 8 regressors, one for each facial identity. We then assessed whether our classifier could identify unique multivoxel patterns for each facial identity. For the second analysis we defined four regressors, one for each occupation label, and assessed whether our classifier could identify unique multivoxel patterns for different faces that shared the same occupation. Next we performed analogous analyses assessing the ability of our classifiers to identify unique multivoxel patterns for distinct object identities, and for objects that shared a category label. Finally, we defined two regressors, one for each spatial location (Hospital vs. Kitchen) and assessed whether our classifier could identify unique multivoxel patterns for different faces and objects that are associated with the same location. We used a Gaussian Naïve Bayes (GNB) classifier and a leave-one-run-out cross validation scheme in which the classifier was trained on five runs of data and tested on the remaining un-trained run. This procedure was repeated 6 times, each time using a different test run, and the average classification accuracy was calculated for each ROI and compared to chance performance (face/object identity = 0.125, face/object category = 0.25, location = 0.5) using a one-tailed t-test. Following the methods of previous studies that have employed similar methods (Epstein and Morgan, 2012; Peelen and Caramazza, 2012; Goesaert and Op de Beeck, 2013; Anzellotti et al., 2014), all analyses were restricted to a priori-defined regions of interest and statistical significance is reported using an uncorrected threshold of p < 0.05. However in Figure 3 we will also indicate which ROIs have statistical effects that survive a conservative correction for multiple comparisons (p < 0.0125). For analyses in which multiple ROIs showed classification accuracy significantly above chance, differences in the classification accuracy of each ROI were compared using two-tailed t-tests to avoid making assumptions about the directionality of the effects.

Results

Behavioral Results

Participants were quite accurate at recollecting information about trained people and objects immediately following training (M = 0.98, SD = 0.05) and immediately prior to scanning (M = 0.97, SD = 0.04). Immediately after training recall performance for object category was significantly greater than for object name (M = 99% vs. 93%, t(17) = 2.54, p = 0.021), with no other differences in recall accuracy being observed across any of the attributes tested. Likewise, participants were highly accurate at detecting targets across both functional localizer runs (M = 0.97, SD = 0.03) and all six experimental runs (M = 0.98, SD = 0.04) indicating attention to stimuli was maintained across the experiment.

Functional Localizer

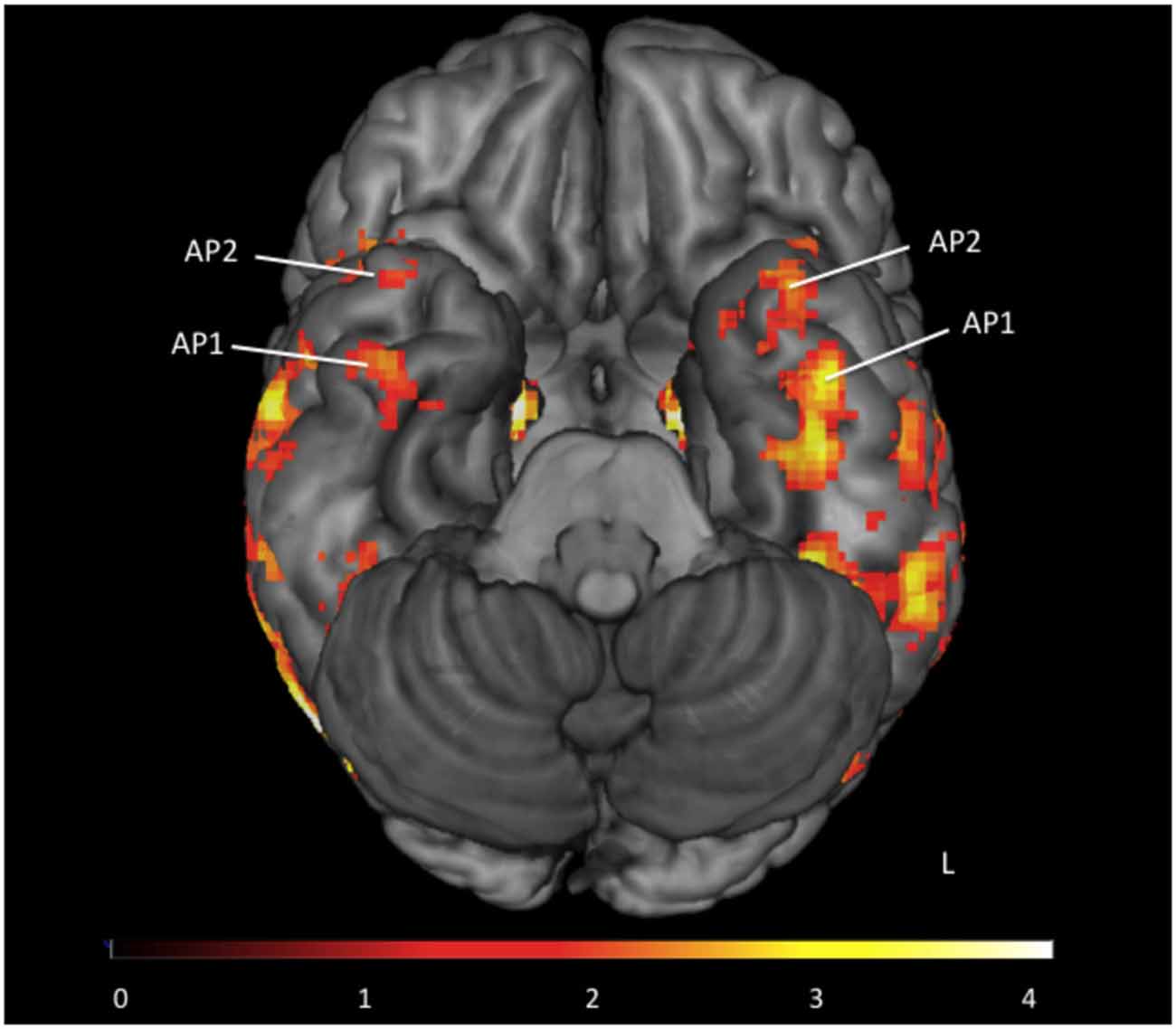

All participants had 1 or 2 face-sensitive regions anterior to the FFA (see Figure 2). The first anterior temporal face area (AP1), was located on the inferior temporal or fusiform gyrus, along the anterior collateral sulcus. The second anterior temporal face area (AP2) was located more anteriorly, on the inferior or middle temporal gyrus near the temporal pole. These regions showed no laterality bias, and were found in locations consistent with the location of anterior temporal face-areas identified in earlier fMRI studies (Tsao et al., 2008; Rajimehr et al., 2009). Their location is also consistent with the facial identify area identified by Nestor et al. (2011). In the right hemisphere 13 subjects had both AP1 and AP2, and 3 subjects had AP1 only. In the left hemisphere 14 subjects had both AP1 and AP2, and 2 subjects had AP1 only. For consistency with previous literature (Rajimehr et al., 2009; Nasr and Tootell, 2012; Avidan et al., 2013; Axelrod and Yovel, 2013; Yang et al., 2016) our vATL ROI was located in right AP1 for all participants.

Figure 2. Group map of anterior face temporal face areas. Average activations for the contrast faces > places (thresholded z > 1.65) superimposed on a ventral view of the inferior surface of the brain. Anterior temporal faces areas 1 and 2 are labeled as first anterior temporal face area (AP1) and second anterior temporal face area (AP2) respectively. Color scale presented as z-scores.

Differential Discriminability in the Face-Processing Network

To test for conceptual face representations in the face-processing network, we assessed the ability of each region to accurately classify facial identity and occupation (Figure 3). Facial identity was accurately decoded within the right anterior temporal face area [t(15) = 2.21, p = 0.021], however this effect was not found in the right FFA, OFA or EVC (p’s > 0.1). Facial occupation was also accurately decoded by multivoxel patterns in the right anterior temporal face area [t(15) = 2.52, p = 0.012] as well as the right FFA [t(15) = 2.12, p = 0.026] and EVC [t(15) = 2.27, p = 0.019]. The right OFA did not display sensitivity to facial occupation that was significantly above chance (p > 0.05) Classification accuracy for facial occupation was significantly higher in the right anterior temporal face area than in the FFA [t(15) = 2.43, p = 0.028] and was marginally higher than in EVC [t(15) = 1.94, p = 0.072].

Figure 3. The classification accuracy of each face-network regions of interest (ROI) for facial identity, facial occupation, object identity, object category, and location as assessed by the MVPA analysis. The dashed line represents chance performance (face/object identity = 0.125, face/object category = 0.25, location = 0.5). Single asterisks (*) indicate significant above-chance classification accuracy at an uncorrected threshold as indicated by a one-tailed t-test (p < 0.05). Double asterisks (**) indicate above-chance classification accuracy that survives a Bonferroni correction for multiple comparisons (p < 0.0125). All face-selective ROIs were located in the right hemisphere.

Next we assessed the ability of each ROI to accurately classify different aspects of object information within its multivoxel activity pattern. Both the right anterior temporal face area [t(15) = 2.20, p = 0.022] and EVC [t(15) = 2.40, p = 0.015] were able to accurately classify objects according to their identity. Classification accuracy for object identity did not differ significantly in the anterior temporal face and EVC (p > 0.05). Classification accuracy for object category was also significantly above chance in the anterior temporal face area [t(15) = 2.25, p = 0.020], but not in the, FFA, OFA, or EVC (ps > 0.05). Finally the ability of each face-processing region to accurately classify faces and objects according to where they are typically found (e.g., a doctor and stethoscope are typically found in a hospital) was assessed. Only the anterior temporal face area demonstrated sensitivity to conceptual knowledge about location that was significantly above chance, t(15) = 2.89, p = 0.006. Exploratory follow-up analyses revealed no sensitivity to any of the contrasts of interest in any left-hemisphere ROI (vATL, FFA, or OFA, all p’s > 0.05).

Discussion

The results reported here show that a face sensitive region in the ventral ATLs, dubbed the “anterior temporal face area”, represents information that goes beyond perceptual features of faces to include semantic information about a person’s identity and occupation. These findings are consistent with previous studies implicating the functionally-localized anterior temporal face area in the representation of facial identity (Anzellotti et al., 2014; Yang et al., 2016), and further demonstrate that this region may possess the unique ability to represent abstract conceptual information about an individual.

Interestingly, the FFA was sensitive to facial category, but not facial identity in our study. The existing literature is mixed in regards to this issue: four prior MVPA studies reported sensitivity to facial identity in the FFA (Nestor et al., 2011; Axelrod and Yovel, 2013, 2015; Goesaert and Op de Beeck, 2013; Anzellotti et al., 2014), while another MVPA study failed to find identity representations in the FFA (Kriegeskorte et al., 2007). To our knowledge only one study has demonstrated sensitivity in the FFA to semantic attributes associated with a person (van den Hurk et al., 2011).

The vATLs: Face Memory vs. Face Perception

We have previously suggested that a region of the vATL plays a role in face perception as well as person memory (Collins and Olson, 2014). Here we extend these findings by showing that the functionally-defined anterior temporal face area is sensitive to the identity of a facial stimulus, as defined by its perceptual features across changes in viewpoint, as well as learned semantic attributes about individuals. Although the differential sensitivity of the vATL face region to different types of person information remains to be explored, based on the findings reported here, as well as a wealth of research demonstrating sensitivity of the ATLs generally to semantic knowledge (Collins and Olson, 2014), we speculate that the anterior temporal face area may be critically involved in the integration of perception and memory for the end goal of person identification. This proposal is consistent with previous cognitive models of face recognition that supported the existence of an amodal person identity node (PIN) that when activated, enabled the retrieval of person-specific semantic information (Bruce and Young, 1986). The anterior temporal face area is ideally suited to serve this function due to due to several special properties of this region.

First, the anterior temporal face area is sensitive to perceptual attributes of faces but in a highly restricted manner. For instance, we tested two humans with unilateral ATL resections across a range of face discrimination tasks using carefully controlled morphed face stimuli. We found that they performed normally on many difficult tasks requiring discrimination of facial gender or facial age, but performed abnormally low when required to discriminate facial identity defined by altering a gestalt representation (Olson et al., 2015). Our results, and those of Olson et al. (2015), mimic findings in macaques (Freiwald and Tsao, 2010) and humans (Freiwald and Tsao, 2010; Nasr and Tootell, 2012; Anzellotti et al., 2014) showing that cells in the vATLs are only sensitive to perceptual manipulations that alter facial identity, but are insensitive to many low-level perceptual manipulations that leave facial identity intact such as inversion, contrast reversal, and viewpoint. This region may even be insensitive to higher-level perceptual changes that leave identity intact such as changes in facial expression (Nestor et al., 2011).

Second, there is evidence from the episodic memory literature that portions of the ATLs are sensitive to a range of mnemonic manipulations: responsiveness is enhanced by knowledge-based familiarity in the form of semantic knowledge (Nieuwenhuis et al., 2012; Ross and Olson, 2012), but decreased by perceptual familiarity in the form of stimulus repetition (Sugiura et al., 2001, 2011). Cells in this region also have the ability to represent associative pairings (Brambati et al., 2010; Eifuku et al., 2010; Nieuwenhuis et al., 2012), and activity patterns in this region represent information about prior encounters with a facial image (Martin et al., 2013, 2015). One recent study showed that face-place associations were initially represented in the human hippocampus but after a 25 h delay were found to reside in the ATL (Nieuwenhuis et al., 2012). It has often been suggested that the hippocampus is responsible for the initial consolidation of associations but that a short time later, these representations are shipped out to various parts of the cortex, a notion supported by these findings. The tight structural interconnectivity of the vATLs, amygdala, and anterior hippocampus via short-range fiber pathways may facilitate this process (Insausti et al., 1987; Morán et al., 1987; Suzuki and Amaral, 1994; Blaizot et al., 2010).

Object Sensitivity in the Anterior Temporal Face Area

Notably, our anterior temporal ROI contained voxels that also represented the identity and category of concrete objects, and implicit knowledge about where people or items are typically found (regardless of their stimulus domain). These findings are consistent with a rich neuropsychology literature showing reliable semantic memory deficits for concrete objects following cell loss in ventral aspects of the ATLs (Mion et al., 2010). Furthermore, these finding are also consistent with a recent MVPA study showing that activity patterns within the ventral ATLs represent the conceptual attributes of everyday objects, such as how they are used or where they are typically found (Peelen and Caramazza, 2012).

The sensitivity of the anterior temporal face area in both experiments to object knowledge may be driven by the inclusion of object-sensitive voxels in our ROIs. We chose to use the same ROI size for all face-selective regions as previous work has shown that the number of voxels within an ROI may influence MVPA outcomes (e.g., Eger et al., 2008; Anzellotti et al., 2014). Here, we selected to use a 9-mm sphere because it provided the best coverage of face-selective activations across individuals, and because it was consistent with previous MVPA studies that have demonstrated sensitivity to facial identity in the ATLs (Kriegeskorte et al., 2007; Goesaert and Op de Beeck, 2013; Anzellotti et al., 2014). Our 9 mm ROIs encompassed an average of 106 voxels in the right ATL after intersection with the brain mask, and extended beyond face-selective cortex in some individuals. Previous work has shown that the vATLs are sensitive to both to faces and objects (Barense et al., 2010, 2011; McLelland et al., 2014; Mundy et al., 2012), and further analysis of our own functional localizer data confirmed that spatially adjacent and overlapping voxels respond preferentially to faces and objects (See Supplementary Figure 1). An important avenue for future research will be to investigate, with high-resolution imaging techniques, the degree to which face- and object- selective voxels independently and jointly contribute to the representation of conceptual attributes about faces and objects. The sensitivity of the vATLs to object conceptual object properties does not render this area un-important for face processing; even the FFA shows some sensitivity to non-face objects (Gauthier et al., 1999, 2000; Grill-Spector et al., 2006; McGugin et al., 2012; Yang et al., 2016).

Limitations

In designing our experiment we chose to use naturalistic stimuli that might reasonably be encountered in the real world, and thus applied only minimal stimulus editing by using gray-scale images. Thus, one possibility is that the sensitivity of the anterior temporal face area to the conceptual properties of faces is being driven by perceptual attributes of the stimuli; a contention that is supported by the sensitivity of the early visual ROI to facial category. We think that this is unlikely for several reasons, outlined here. First, all facial stimuli were arbitrarily paired to each occupation label, and we were careful not to group highly similar looking individuals into the same occupation category. Second, if our effects were being driven primarily by visual attributes of the facial stimuli with different occupation labels, we would expect to see higher classification accuracy for facial occupation in EVC than in the vATLs, which was not the case. Sensitivity to facial occupation was higher in the vATLs than in EVC, and did not survive correction for multiple comparisons in EVC. It is possible that that the sensitivity of the OFA to facial category is being driven by top-down feedback from higher-order areas (Bar et al., 2006), however future work is needed to investigate this possibility.

In order to ensure that the participants in our study adequately learned all stimulus associations we used a small stimulus set and a short delay between training and the fMRI session. Thus, participants may have recalled episodic details of the study context during the fMRI session in addition to the semantic attributes associated with each stimulus. Although the retrieval of episodic details may have contributed to the results reported here, we believe that the robust sensitivity of the anterior temporal face area to object and face identity and category, as well as to location, which was not explicitly reinforced during the training paradigm, precludes an expiation based solely on episodic memory retrieval. However, including a longer delay period between study and test, or using familiar faces that have a known association with an occupation or a location, would provide a stronger test of our hypothesis. Furthermore, additional work extending our paradigm to larger, more ecologically valid stimulus sets will lend further validity to our results.

Conclusion

To conclude, the present study shows that conceptual knowledge about an individual’s identity and social category is represented in multivoxel activity patterns in the anterior temporal face area. Voxels in the anterior temporal face area also demonstrated sensitivity to conceptual knowledge associated with concrete objects, suggesting that it may play a role in representing conceptual knowledge about concrete objects more generally. Future research should investigate whether the same population of neurons is tuned to both faces and objects, or as we suspect, different populations. Our results are consistent with a recent model of face processing in which an organized system of face areas extends bilaterally from the inferior occipital gyri to the vATLs, with facial representations becoming increasingly complex and abstracted from low-level perceptual features as they move forward along this network (Collins and Olson, 2014). Our results further suggest that the anterior temporal face region may serve as an interface between face perception and face memory, linking perceptual representations of individual identity with person-specific semantic knowledge.

Ethics Statement

The research was approved by the Temple University Institutional Review Board.

Author Contributions

JAC was responsible for the original experimental design and fMRI data analysis. JEK collected the fMRI data and programed the experiments. JAC interpreted the results and wrote the article with input from JEK and IRO.

Funding

This work was supported by a Temple University Dissertation Award to JAC and a National Institute of Health Grant to IRO (RO1 MH091113). The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institute of Mental Health or the National Institutes of Health.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We would like to thank Jason Chein and Jamie Reilly for valuable advice. In addition, we would like to thank Geoff Aguirre and Marc Coutanche for assistance with technical aspects of this study.

Footnotes

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/article/10.3389/fnhum.2016.00189/abstract

References

Anzellotti, S., Fairhall, S. L., and Caramazza, A. (2014). Decoding representations of face identity that are tolerant to rotation. Cereb. Cortex 24, 1988–1995. doi: 10.1093/cercor/bht046

Avidan, G., Tanzer, M., Hadj-Bouziane, F., Liu, N., Ungerleider, L. G., and Behrmann, M. (2013). Selective dissociation between core and extended regions of the face processing network in congenital prosopagnosia. Cereb. Cortex 24, 1565–1578. doi: 10.1093/cercor/bht007

Axelrod, V., and Yovel, G. (2013). The challenge of localizing the anterior temporal face area: A possible solution. Neuroimage 81, 371–380. doi: 10.1016/j.neuroimage.2013.05.015

Axelrod, V., and Yovel, G. (2015). Successful decoding of famous faces in the fusiform face area. PLoS One 10:e0117126. doi: 10.1371/journal.pone.0117126

Barense, M. D., Henson, R. N. A., and Graham, K. S. (2011). Perception and conception: temporal lobe activity during complex discriminations of familiar and novel faces and objects. J. Cogn. Neurosci. 23, 3052–3067. doi: 10.1162/jocn_a_00010

Barense, M. D., Henson, R. N. A., Lee, A. C. H., and Graham, K. S. (2010). Medial temporal lobe activity during complex discrimination of faces, objects, and scenes: effects of viewpoint. Hippocampus 20, 389–401. doi: 10.1002/hipo.20641

Bar, M., Kassam, K. S., Ghuman, A. S., Boshyan, J., Schmid, A. M., Dale, A. M., et al. (2006). Top-down facilitation of visual recognition. Proc. Natl. Acad. Sci. U S A 103, 449–454. doi: 10.1073/pnas.0507062103

Blaizot, X., Mansilla, F., Insausti, A. M., Constans, J. M., Salinas-Alamán, A., Pró-Sistiaga, P., et al. (2010). The human parahippocampal region: I. Temporal pole cytoarchitectonic and MRI correlation. Cereb. Cortex 20, 2198–2212. doi: 10.1093/cercor/bhp289

Brambati, S. M., Benoit, S., Monetta, L., Belleville, S., and Joubert, S. (2010). The role of the left anterior temporal lobe in the semantic processing of famous faces. Neuroimage 53, 674–681. doi: 10.1016/j.neuroimage.2010.06.045

Bruce, V., and Young, A. (1986). Understanding face recognition. Br. J. Psychol. 77, 305–327. doi: 10.1111/j.2044-8295.1986.tb02199.x

Carlin, J. D., Rowe, J. B., Kriegeskorte, N., Thompson, R., and Calder, A. J. (2012). Direction-sensitive codes for observed head turns in human superior temporal sulcus. Cereb. Cortex 22, 735–744. doi: 10.1093/cercor/bhr061

Collins, J. A., and Olson, I. R. (2014). Beyond the FFA: the role of the ventral anterior temporal lobes in face processing. Neuropsychologia 61, 65–79. doi: 10.1016/j.neuropsychologia.2014.06.005

Damasio, A. R., Tranel, D., and Damasio, H. (1990). Face agnosia and the neural substrates of memory. Annu. Rev. Neurosci. 13, 89–109. doi: 10.1146/annurev.ne.13.030190.000513

Devlin, J. T., Russell, R. P., Davis, M. H., Price, C. J., Wilson, J., Moss, H. E., et al. (2000). Susceptibility-induced loss of signal: comparing PET and fMRI on a semantic task. Neuroimage 11, 589–600. doi: 10.1006/nimg.2000.0595

Duchaine, B., and Yovel, G. (2015). A revised neural framework for face processing. Annu. Rev. Vis. Sci. 1, 393–416. doi: 10.1146/annurev-vision-082114-035518

Eifuku, S., Nakata, R., Sugimori, M., Ono, T., and Tamura, R. (2010). Neural correlates of associative face memory in the anterior inferior temporal cortex of monkeys. J. Neurosci. 30, 15085–15096. doi: 10.1523/JNEUROSCI.0471-10.2010

Eger, E., Ashburner, J., Haynes, J. D., Dolan, R. J., and Rees, G. (2008). fMRI activity patterns in human LOC carry information about object exemplars within category. J. Cogn. Neurosci. 20, 356–370. doi: 10.1162/jocn.2008.20019

Epstein, R. A., and Morgan, L. K. (2012). Neural responses to visual scenes reveals inconsistencies between fMRI adaptation and multivoxel pattern analysis. Neuropsychologia 50, 530–543. doi: 10.1016/j.neuropsychologia.2011.09.042

Evans, J. J., Heggs, A. J., Antoun, N., and Hodges, J. R. (1995). Progressive prosopagnosia associated with selective right temporal lobe atrophy. A new syndrome? Brian 118, 1–13. doi: 10.1093/brain/118.1.1

Farzaneh, F., Riederer, S. J., and Pelc, N. J. (1990). Analysis of T2 limitations and off-resonance effects on spatial resolution and artifacts in echo-planar imaging. Magn. Reson. Med. 14, 123–139. doi: 10.1002/mrm.1910140112

Freiwald, W. A., and Tsao, D. Y. (2010). Functional compartmentalization and viewpoint generalization within the macaque face-processing system. Science 330, 845–851. doi: 10.1126/science.1194908

Gainotti, G. (2007). Different patterns of famous people recognition disorders in patients with right and left anterior temporal lesions: a systematic review. Neuropsychologia 45, 1591–1607. doi: 10.1016/j.neuropsychologia.2006.12.013

Gainotti, G. (2013). Laterality effects in normal subjects’ recognition of familiar faces, voices and names. Perceptual and representational components. Neuropsychologia 51, 1151–1160. doi: 10.1016/j.neuropsychologia.2013.03.009

Gainotti, G. (2015). Implications of recent findings for current cognitive models of familiar people recognition. Neuropsychologia 77, 279–287. doi: 10.1016/j.neuropsychologia.2015.09.002

Gainotti, G., and Marra, C. (2011). Differential contribution of right and left temporo-occipital and anterior temporal lesions to face recognition disorders. Front. Hum. Neurosci. 5:55. doi: 10.3389/fnhum.2011.00055

Gauthier, I., Tarr, M. J., Anderson, A. W., Skudlarski, P., and Gore, J. C. (1999). Activation of the middle fusiform “face area” increases with expertise in recognizing novel objects. Nat. Neurosci. 2, 568–573. doi: 10.1038/9224

Gauthier, I., Tarr, M. J., Moylan, J., Skudlarski, P., Gore, J. C., and Anderson, A. W. (2000). The fusiform “face area” is part of a network that processes faces at the individual level. J. Cogn. Neurosci. 12, 495–504. doi: 10.1162/089892900562165

Goesaert, E., and Op de Beeck, H. P. (2013). Representations of facial identity information in the ventral visual stream investigated with multivoxel pattern analyses. J. Neurosci. 33, 8549–8558. doi: 10.1523/jneurosci.1829-12.2013

Grill-Spector, K., Sayres, R., and Ress, D. (2006). High-resolution imaging reveals highly selective nonface clusters in the fusiform face area. Nat. Neurosci. 9, 1177–1185. doi: 10.1038/nn1745

Haxby, J. V., Hoffman, E. A., and Gobbini, M. I. (2000). The distributed human neural system for face perception. Trends Cogn. Sci. 4, 223–233. doi: 10.1016/s1364-6613(00)01482-0

Insausti, R., Amaral, D. G., and Cowan, W. M. (1987). The entorhinal cortex of the monkey: III. Subcortical afferents. J. Comp. Neurol. 264, 396–408. doi: 10.1002/cne.902640307

Kanwisher, N., McDermott, J., and Chun, M. M. (1997). The fusiform face area: a module in human extrastriate cortex specialized for face perception. J. Neurosci. 17, 4302–4311.

Kanwisher, N., and Yovel, G. (2006). The fusiform face area: a cortical region specialized for the perception of faces. Philos. Trans. R. Soc. Lond. B Biol. Sci. 361, 2109–2128. doi: 10.1098/rstb.2006.1934

Kriegeskorte, N., Formisano, E., Sorger, B., and Goebel, R. (2007). Individual faces elicit distinct response patterns in human anterior temporal cortex. Proc. Natl. Acad. Sci. U S A 104, 20600–20605. doi: 10.1073/pnas.0705654104

Martin, C. B., Cowell, R. A., Gribble, P. L., Wright, J., and Köhler, S. (2015). Distributed category-specific recognition memory signals in human perirhinal cortex. Hippocampus 26, 423–436. doi: 10.1002/hipo.22531

Martin, C. B., McLean, D. A., O’Neil, E. B., and Kohler, S. (2013). Distinct familiarity-based response patterns for faces and buildings in perirhinal and parahippocampal cortex. J. Neurosci. 33, 10915–10923. doi: 10.1523/JNEUROSCI.0126-13.2013

McGugin, R. W., Gatenby, J. C., Gore, J. C., and Gauthier, I. (2012). High-resolution imaging of expertise reveals reliable object selectivity in the fusiform face area related to perceptual performance. Proc. Natl. Acad. Sci. U S A 109, 17063–17068. doi: 10.1073/pnas.1116333109

McLelland, V. C., Chan, D., Ferber, S., and Barense, M. D. (2014). Stimulus familiarity modulates functional connectivity of the perirhinal cortex and anterior hippocampus during visual discrimination of faces and objects. Front. Hum. Neurosci. 8:117. doi: 10.3389/fnhum.2014.00117

Mion, M., Patterson, K., Acosta-Cabronero, J., Pengas, G., Izquierdo-Garcia, D., Hong, Y. T., et al. (2010). What the left and right anterior fusiform gyri tell us about semantic memory. Brain 133, 3256–3268. doi: 10.1093/brain/awq272

Morán, M. A., Mufson, E. J., and Mesulam, M. M. (1987). Neural inputs into the temporopolar cortex of the rhesus monkey. J. Comp. Neurol. 256, 88–103. doi: 10.1002/cne.902560108

Mundy, M. E., Downing, P. E., and Graham, K. S. (2012). Extrastriate cortex and medial temporal lobe regions respond differentially to visual feature overlap within preferred stimulus category. Neuropsychologia 50, 3053–3061. doi: 10.1016/j.neuropsychologia.2012.07.006

Murphy, K., Bodurka, J., and Bandettini, P. A. (2007). How long to scan? The relationship between fMRI temporal signal to noise ratio and necessary scan duration. Neuroimage 34, 565–574. doi: 10.1016/j.neuroimage.2006.09.032

Nasr, S., and Tootell, R. B. H. (2012). Role of fusiform and anterior temporal cortical areas in facial recognition. Neuroimage 63, 1743–1753. doi: 10.1016/j.neuroimage.2012.08.031

Nestor, A., Plaut, D. C., and Behrmann, M. (2011). Unraveling the distributed neural code of facial identity through spatiotemporal pattern analysis. Proc. Natl. Acad. Sci. U S A 108, 9998–10003. doi: 10.1073/pnas.1102433108

Nieuwenhuis, I. L. C., Takashima, A., Oostenveld, R., McNaughton, B. L., Fernández, G., and Jensen, O. (2012). The neocortical network representing associative memory reorganizes with time in a process engaging the anterior temporal lobe. Cereb. Cortex 22, 2622–2633. doi: 10.1093/cercor/bhr338

O’Neil, E., Hutchison, R., McLean, D., and Köhler, S. (2014). Resting-state fMRI reveals functional connectivity between face-selective perirhinal cortex and the fusiform face area related to face inversion. Neuroimage 92, 349–355. doi: 10.1016/j.neuroimage.2014.02.005

Olman, C. A., Davachi, L., and Inati, S. (2009). Distortion and signal loss in medial temporal lobe. PLoS One 4:e8160. doi: 10.1371/journal.pone.0008160

Olson, I. R., Ezzyat, Y., Plotzker, A., and Chatterjee, A. (2015). The end point of the ventral visual stream: face and non-face perceptual deficits following unilateral anterior temporal lobe damage. Neurocase 21, 554–562. doi: 10.1080/13554794.2014.959025

Olson, I. R., Plotzker, A., and Ezzyat, Y. (2007). The Enigmatic temporal pole: a review of findings on social and emotional processing. Brain 130, 1718–1731. doi: 10.1093/brain/awm052

Peelen, M. V., and Caramazza, A. (2012). Conceptual object representations in human anterior temporal cortex. J. Neurosci. 32, 15728–15736. doi: 10.1523/JNEUROSCI.1953-12.2012

Pinsk, M. A., Arcaro, M., Weiner, K. S., Kalkus, J. F., Inati, S. J., Gross, C. G., et al. (2009). Neural representations of faces and body parts in macaque and human cortex: a comparative FMRI study. J. Neurophysiol. 101, 2581–2600. doi: 10.1152/jn.91198.2008

Pitcher, D., Walsh, V., Yovel, G., and Duchaine, B. (2007). TMS evidence for the involvement of the right occipital face area in early face processing. Curr. Biol. 17, 1568–1573. doi: 10.1016/j.cub.2007.07.063

Rajimehr, R., Young, J. C., and Tootell, R. B. H. (2009). An anterior temporal face patch in human cortex, predicted by macaque maps. Proc. Natl. Acad. Sci. U S A 106, 1995–2000. doi: 10.1073/pnas.0807304106

Ross, L. A., and Olson, I. R. (2012). What’s unique about unique entities? An fMRI investigation of the semantics of famous faces and landmarks. Cereb. Cortex 22, 2005–2015. doi: 10.1093/cercor/bhr274

Rossion, B., Caldara, R., Seghier, M., Schuller, A. M., Lazeyras, F., and Mayer, E. (2003). A network of occipito-temporal face-sensitive areas besides the right middle fusiform gyrus is necessary for normal face processing. Brain 126, 2381–2395. doi: 10.1093/brain/awg241

Sergent, J., Ohta, S., and MacDonald, B. (1992). Functional neuroanatomy of face and object processing. A positron emission tomography study. Brain 115, 15–36. doi: 10.1093/brain/115.1.15

Snowden, J. S., Thompson, J. C., and Neary, D. (2004). Knowledge of famous faces and names in semantic dementia. Brain 127, 860–872. doi: 10.1093/brain/awh099

Sugiura, M., Kawashima, R., Nakamura, K., Sato, N., Nakamura, A., and Kato, T. (2001). Activation reduction in anterior temporal cortices during repeated recognition of faces of personal acquaintances. Neuroimage 13, 877–890. doi: 10.1006/nimg.2001.0747

Sugiura, M., Mano, Y., Sasaki, A., and Sadato, N. (2011). Beyond the memory mechanism: person-selective and nonselective processes in recognition of personally familiar faces. J. Cogn. Neurosci. 23, 699–715. doi: 10.1162/jocn.2010.21469

Suzuki, W. A., and Amaral, D. G. (1994). Perirhinal and parahippocampal cortices of the macaque monkey: cortical afferents. J. Comp. Neurol. 350, 497–533. doi: 10.1002/cne.903500402

Tsao, D. Y., Moeller, S., and Freiwald, W. A. (2008). Comparing face patch systems in macaques and humans. Proc. Natl. Acad. Sci. U S A 105, 19514–19519. doi: 10.1073/pnas.0809662105

Tsukiura, T., Fujii, T., Fukatsu, R., Okuda, J., Otsuki, T., Umetsu, A., et al. (2002). Neural basis of people’s name retrieval: evidences from brain-damaged patients and fMRI. J. Cogn. Neurosci. 14, 922–937. doi: 10.1162/089892902760191144

Tsukiura, T., Mano, Y., Sekiguchi, A., Yomogida, Y., Hoshi, K., Kambara, T., et al. (2010). Dissociable roles of the anterior temporal regions in successful encoding of memory for person identity information. J. Cogn. Neurosci. 22, 2226–2237. doi: 10.1162/jocn.2009.21349

Tsukiura, T., Mochizuki-Kawai, H., and Fujii, T. (2006). Dissociable roles of the bilateral anterior temporal lobe in face-name associations: an event-related fMRI study. Neuroimage 30, 617–626. doi: 10.1016/j.neuroimage.2005.09.043

Tsukiura, T., Suzuki, C., Shigemune, Y., and Mochizuki-Kawai, H. (2008). Differential contributions of the anterior temporal and medial temporal lobe to the retrieval of memory for person identity information. Hum. Brain Mapp. 29, 1343–1354. doi: 10.1002/hbm.20469

van den Hurk, J., Gentile, F., and Jansma, B. M. (2011). What’s behind a face: person context coding in fusiform face area as revealed by multivoxel pattern analysis. Cereb. Cortex 21, 2893–2899. doi: 10.1093/cercor/bhr093

Visser, M., Jefferies, E., and Lambon Ralph, M. A. (2010). Semantic processing in the anterior temporal lobes: a meta-analysis of the functional neuroimaginglLiterature. J. Cogn. Neurosci. 22, 1083–1094. doi: 10.1162/jocn.2009.21309

Von Der Heide, R. J., Skipper, L. M., and Olson, I. R. (2013). Anterior temporal face patches: a meta-analysis and empirical study. Front. Hum. Neurosci. 7:17. doi: 10.3389/fnhum.2013.00017

Keywords: face processing, anterior temporal lobes, semantic memory, perception, multivoxel pattern analysis

Citation: Collins JA, Koski JE and Olson IR (2016) More Than Meets the Eye: The Merging of Perceptual and Conceptual Knowledge in the Anterior Temporal Face Area. Front. Hum. Neurosci. 10:189. doi: 10.3389/fnhum.2016.00189

Received: 16 December 2015; Accepted: 14 April 2016;

Published: 02 May 2016.

Edited by:

Nathalie Tzourio-Mazoyer, Centre National de la Recherche Scientifique CEA Université Bordeaux, FranceReviewed by:

Rik Vandenberghe, Katholieke Universiteit Leuven, BelgiumStefan Kohler, Western University, Canada

Copyright © 2016 Collins, Koski and Olson. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution and reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jessica A. Collins, jcollins21@mgh.harvard.edu

Jessica A. Collins

Jessica A. Collins Jessica E. Koski2

Jessica E. Koski2  Ingrid R. Olson

Ingrid R. Olson