Abstract

Recently, using artificial intelligence (AI) in drug discovery has received much attention since it significantly shortens the time and cost of developing new drugs. Deep learning (DL)-based approaches are increasingly being used in all stages of drug development as DL technology advances, and drug-related data grows. Therefore, this paper presents a systematic Literature review (SLR) that integrates the recent DL technologies and applications in drug discovery Including, drug–target interactions (DTIs), drug–drug similarity interactions (DDIs), drug sensitivity and responsiveness, and drug-side effect predictions. We present a review of more than 300 articles between 2000 and 2022. The benchmark data sets, the databases, and the evaluation measures are also presented. In addition, this paper provides an overview of how explainable AI (XAI) supports drug discovery problems. The drug dosing optimization and success stories are discussed as well. Finally, digital twining (DT) and open issues are suggested as future research challenges for drug discovery problems. Challenges to be addressed, future research directions are identified, and an extensive bibliography is also included.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The examination of how various drugs interact with the body and how a medication needs to act on the body to have a therapeutic impact is known as drug discovery. Drug discovery strategy constitutes from different approaches as physiology-based and target based. This strategy is based on information about the ligand and the target. In this regard, our attention was directed in certain topics especially drug (ligand)–target interactions, drug sensitivity and response, drug–drug interaction, and drug–drug similarity. For certain diseases such as cancer or pandemic situations as COVID-19, more than one drug combination is required to alleviate the prognosis and pathogenesis interactions. Despite all the recent advances in pharmaceuticals, medication development is still a labor-intensive and costly process. As a result, several computational algorithms are proposed to speed up the drug discovery process (Betsabeh and Mansoor 2021).

As DL models progress and the drug data size is getting bigger, a slew of new DL-based approaches is cropping up at every stage of the drug development process (Kim et al. 2021). In addition, we’ve seen large pharmaceutical corporations migrate toward AI in the wake of the development of DL approaches, eschewing outmoded, ineffective procedures to increase patient profit while also increasing their own (Nag et al. 2022). Despite the DL impressive performance, it remains a critical and challenging task, and there is a chance for researchers to develop several algorithms that improve drug discovery performance. Therefore, this paper presents a SLR that integrates the recent DL technologies and applications in drug discovery. This review study is the first one that incorporates the recent DL models and applications for the different categories of drug discovery problems such as DTIs, DDIs similarity, drug sensitivity and response, and drug-side effects predictions, as well as presenting new challenging topics such as XAI and DT and how they help the advancement of the drug discovery problems. In addition, the paper supports the researchers with the most frequently used datasets in the field.

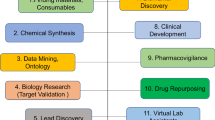

The paper is developed based on six building blocks as shown in Fig. 1. More than 300 articles are presented in this paper, and they are divided across these building blocks. The papers are selected using the following criteria:

-

The papers which published from 2000 to 2022.

-

The papers which published in IEEE, ACM, Elsevier, and Springer have more priority.

The following analytical questions are discussed and completely being answered in the paper:

-

AQ1: What DL algorithms have been used to predict the different categories of drug discovery problems?

-

AQ2: Which deep learning methods are mostly used in drug dosing optimization?

-

AQ3: Are there any success stories about drug discovery and DL?

-

AQ4: What about the newest technologies such as XAI and DT in drug discovery?

-

AQ5: What are the future and open works related to drug discovery and DL?

The remainder of this review paper is organized as: Sect. 2 presents a review of related studies; Sect. 3 covers the various DL techniques as an overview. Section 4 presents the organization of DL applications in drug discovery problems through explaining each drug discovery problem category and gives a literature review of the DL techniques used. Section 5 discusses the numerous benchmark data sets and databases that have been employed in the drug development process. Section 6 presents the evaluation metrics used for each drug discovery problem category. The drug dose optimization, successful stories, and XAI are introduced in Sect. 7, Sect. 8, and Sect. 9. DT and open problems are suggested as future research challenges in Sects. 10 and 11. Section 12 presents a discussion of the analytical questions. Finally, Sect. 13 concludes the paper.

2 Review of related studies

Although the drug discovery is a large field and has different research categories, there is a few review studies about this field and each related study has focused only on a one research category such as reviewing the DL applications for the DTIs. This section aims to review these related studies and a summary is presented in Table 1.

Kim et al. (2021) presented a survey of DL models in the prediction of drug–target interaction (DTI) and new medication development. They start by providing a thorough summary of many depictions of drugs and proteins, DL applications, and widely used exemplary data sets to test and train models. One good point for this study, they identify a few obstacles to the bright future of de novo drug creation and DL-based DTI prediction. However, the major drawback of this study was that it did not consider the latest technology in DL application for the DTIs such as XAI and DTs.

Rifaioglu et al. (2019) presented the recent ML applications in Virtual Screening (VS) with the techniques, instruments, databases, and materials utilized to create the model. They outline what VS is and how crucial it is to the process of finding new drugs. Good points for this study, they highlighted the DL technologies that are accessible as open access programming libraries and provided instances of VS investigations that resulted in the discovery of novel bioactive chemicals and medications, tool kits and frameworks, and can be employed for the foreseeable future's computational drug discovery (including DTI prediction). However, they did not consider the drug dose optimization in their literature review.

Sachdev and Gupta (2019) presented the various feature based chemogenomic methods for DTIs prediction. They offer a thorough review of the different methodologies, datasets, tools, and measurements. They give a current overview of the various feature-based methodologies. Additionally, it describes relevant datasets, methods for determining medication or target properties, and evaluation measures. Although the study considered the initial integrated review which concentrate only on DTI feature-based techniques, they did not consider the latest technology in DL application for the DTIs such as XAI and DTs.

3 Deep learning (DL) techniques

Detecting spam, recommending videos, classifying images, and retrieving multimedia ideas are just a few of the techniques used are just a few of the applications where machine learning (ML) has lately gained favor in research. Deep learning (DL) is one of the most extensively utilized ML methods in these applications. The ongoing appearance of new DL studies is due to the unpredictability of data acquisition and the incredible progress made in hardware technologies. DL is based on conventional neural networks but outperforms them significantly. Furthermore, DL uses transformations and graph technology to build multi-layer learning models (Kim et al. 2021). With their groundbreaking invention, Machine Learning and Deep Learning have revolutionized the world's perspective. Deep learning approaches have revolutionized the way we tackle problems. Deep learning models come in various shapes and sizes, capable of effectively resolving problems that are too complex for standard approaches to tackle. We'll review the various deep learning models in this section (Sarker 2021).

3.1 Classic neural networks

As shown in Fig. 2, Multi-layer perceptron are frequently employed to recognize Fully Connected Neural Networks. It involves converting the algorithm into simple two-digit data inputs (Mukhamediev et al. 2021). This paradigm allows for both linear and nonlinear functions to be included. The linear function is a single line with a constant multiplier that multiplies its inputs. Sigmoid Curve, Hyperbolic Tangent, and Rectified Linear Unit are three representations for nonlinear functions. This model is best for categorization and regression issues with real-valued data and a flexible model of any kind.

3.2 Convolutional neural networks (CNN)

As shown in Fig. 3, The classic convolutional neural network (CNN) model is an advanced and high-potential variant ANN Which developed to manage escalating complexity levels, as well as data pretreatment and compilation. It is based on how an animal's visual cortex's neurons are arranged (Amashita et al. 2018). One of the most flexible algorithms for the processing of data with and without images is CNNs. CNN can be processed through 4 phases:

-

For analyzing basic visual data, such as picture pixels, it includes one input layer that is often the case a 2D array of neurons.

-

Some CNNs analyze images on their inputs using a single-dimensional output layer of neurons coupled to distributed convolutional layers.

-

Layer number 3, called as the sampling layer, is included in CNNs o restrict the number of neurons which It took part in the relevant network levels.

-

The sampling and output layers are joined by one or more connected layers in CNNs.

This network concept can potentially aid in extracting relevant visual data in pieces or smaller units. In the CNN, the neurons are responsible for the group of neurons from the preceding layer.

After the input data has been included into the convolutional model, the CNN is constructed in four steps:

-

Convolution: The method produces feature maps based on supplied data., which are then subjected to a purpose.

-

Max-Pooling: It aids CNN in detecting an image based on supplied changes.

-

Flattening: The data is flattened in this stage so that a CNN can analyze it.

-

Full Connection: It's sometimes referred to as a "hidden layer" which creates the loss function for a model.

Image recognition, image analysis, image segmentation, video analysis, and natural language processing (NLP) (Chauhan et al. 2018; Tajbakhsh et al. May 2016; Mohamed et al. 2020; Zhang et al. 2018) are among the tasks that CNNs are capable of.

3.3 Recurrent neural networks (RNNs)

RNNs were first created to help in sequence prediction. These networks rely solely on data streams with different lengths as inputs. For the most recent forecast, the knowledge of its previous state is used as an input value by the RNN. As a result, it can help a network's short-term memory achievers (Tehseen et al. 2019). As shown in Fig. 4, The Long Short-Term Memory (LSTM) method, for example, is renowned for its adaptability.

LSTMs, which are advantageous in predicting data in time sequences using memory, and LSTMs, which are useful in predicting data in time sequences using memory, are two forms of RNN designs that aid in the study of problems. The three gates are Input, Output, and Forget. Gated RNNs are particularly helpful for temporal sequence prediction using memory-based data. Both types of algorithms can be used to address a range of issues, including image classification (Chandra and Sharma 2017), sentiment analysis (Failed 2018), video classification (Abramovich et al. 2018), language translation (Hermanto et al. 2015), and more.

3.4 Generative adversarial networks: GAN

As shown in Fig. 5, It combines a Generator and a Discriminator DL neural network approach. The Discriminator helps to discriminate between real and fake data while the Generator Network creates bogus data (Alankrita et al. 2021).

Both networks compete with one another as The Discriminator still distinguishes between actual and fake data, and the Generator keeps making fake data look like real data. The Generator network will generate simulated data for the authentic photos if a picture library is necessary. Then, a deconvolution neural network would be created. Then, an Image Detector network would be utilized to discriminate between fictitious and real images. This competition would eventually help the network's performance. It can be employed in creating images and texts, enhancing the image and discovering new drugs.

3.5 Self-organizing maps (SOM)

As shown in Fig. 6, Self-Organizing Maps operate by leveraging unsupervised data to decrease a model's number of random variables (Kohonen 1990). Given that every synapse is linked to both its input and output nodes, the output dimension in this DL approach is set as a two-dimensional model. The competition between each data point and its model representation in the Self-Organizing Maps, the weight of the closest nodes or Best Matching Units is adjusted (BMUs). The value of the weights varies based on how close a BMU is. The value represents the node's position in the network because weights are a node attribute in and of themselves. It's great for evaluating dataset frameworks that don't have a Y-axis value or project explorations that don't have a Y-axis value.

3.6 Boltzmann machines

As shown in Fig. 7, the nodes are connected in a circular pattern because there is no set orientation in this network model. This deep learning technique is utilized to generate model parameters because of its uniqueness. The Boltzmann Machines model is stochastic, unlike all preceding deterministic network models. It can monitor systems, create a binary recommendation platform, and analyze specific datasets (Hinton 2011).

The architecture of the Boltzmann Machine is a two-layer neural network. The visible or input layer is the first, while the hidden layer is the second. They are made up of several neuron-like nodes that carry out computations. These nodes are interconnected at different levels but are not linked across nodes in the same layer. As a result, there is no connectivity between layers, which is one of the Boltzmann machine's disadvantages. When data is supplied into these nodes, it is transformed into a graph, and they process it and learn all the parameters, motifs, and relations between them before deciding whether to transmit it. As a result, an Unsupervised DL model is often known as a Boltzmann Machine.

3.7 Autoencoders

As shown in Fig. 8, This algorithm, one of the most popular deep learning algorithms, automatically based on its inputs, applies an activation function, and decodes the result at the end. Because of the backlog, there are fewer types of data produced, and the built-in data structures are used to their fullest extent (Zhai et al. 2018).

There are various types of autoencoders:

-

Sparse: The generalization technique is used when the hidden layers outnumber the input layer to decrease the overfitting. It constrains the loss function and restricts the autoencoder from utilizing all its nodes simultaneously.

-

Denoising: In this case, randomly, the inputs are adjusted and made to equal 0.

-

Contractive: When the hidden layer outnumbers the input layer, to avoid overfitting and data duplication, a penalty factor is introduced to the loss function.

-

Stacked: When another hidden layer is added to an autoencoder, it results in two stages of encoding and Initial stages of decoding.

Feature identification, establishing a strong recommendation model, and adding features to enormous datasets are some of the difficulties it can solve.

4 Organization of DL applications in drug discovery problems

The evolution of safe and effective treatments for human is the primary goal of drug discovery (Kim et al. 2021). Drug discovery is the problem of finding the suitable drugs to treat a disease (i.e., a target protein) which relies on several interactions. This paper divides the drug discovery problems into four main categories, as presented in Fig. 9. They are drug–target interactions, drug–drug similarity, drug combinations side effects, and drug sensitivity and response predictions. The following subsections provide a literature review of DL with these problems and some of the investigated literature articles related to each category are summarized in Table 2.

4.1 Drug–target interactions prediction using DL

Drug repurposing attempts to uncover new uses for drugs that are already on the market and have been approved. It has attracted much attention since it takes less time, costs less money, and has a greater success rate than traditional de novo drug development (Thafar et al. 2022). The discovery of drug–target interactions is the initial step in creating new medications, as well as one of the most crucial aspects of drug screening and drug-guided synthesis (Wang et al. 2020a). Exploring the link between possible medications and targets can aid researchers in better understanding the pathophysiology of targets at the drug level, which can help with the disease's early detection, treatment prognosis, and drug design. This is well known as drug–target interactions (DTIs) (Lian et al. 2021). Achieving success to the drug repositioning mechanism largely reliant on DTI's forecast because it reduces the number of potential medication candidates for specific targets. The approaches based on molecular docking and the approaches based on drugs are the two basic tactics used in traditional computational methods. When target proteins' 3D structures aren't available, the effectiveness of molecular docking is limited. When there are only a few known binding molecules for a target, drug-based techniques typically produce subpar prediction results. DL technologies overcome the restrictions of the high-dimensional structure of drug and target protein by using unstructured-based approaches which do not need 3D structural data or docking for DTI prediction. Therefore, this section provides a recent comprehensive review of DL-based DTIs prediction models (Chen et al. 2012).

As shown in Fig. 10, there are known interactions (solid lines) and unknown interactions (dashed lines) between diseases (proteins) and drugs. DTIs forecast unknown interactions or what diseases (or target proteins) a new drug might treat. According to their input features, we divided the latest DL models used to predict DTIs into three categories: drug-based models, structure (graph)-based models, and drug-protein(disease)-based models.

4.1.1 Drug-based models

Figure 10A shows drug-based models that assume a potential drug will be like known drugs for the target proteins. It calculates the DTI using the target's medication information. Similarity search strategies are used in these models, which postulate that structurally similar substances have similar biological functions (Thafar et al. 2019; Matsuzaka and Uesawa 2019). These methods have been used for decades to select compounds in vast compound libraries employing massive computer jobs or solve problems using human calculations. Deep neural network models gradually narrow the gap between in silico prediction and empirical study, and DL technology can shorten these time-consuming procedures and manual operations.

Researchers may now use deep neural networks to analyze medicines and predict drug-related features, including as bioactivities and physicochemical qualities, thanks to using benchmark packages like MoleculeNet (Wu et al. 2018) and DeepChem (). As a result, basic neural networks like MLP and CNN have been used in numerous drug-based DL approaches (Zeng et al. 2020; Yang et al. 2019; Liu et al. 2017). The representation power of molecular descriptors was often the focus of ADMET investigations rather than the model itself (Zhai et al. 2018; Liu et al. 2017; Kim et al. 2016; Tang et al. 2014). Hirohara et al. trained a CNN model with the SMILES string and then used learned attributes to discover motifs using significant structures for locations that bind proteins or unidentified functional groupings (Hirohara et al. 2018). Atom pairs and pharmacophoric donor–acceptor pairings have been employed by Wenzel et al. (2019) as adjectives in multi-task deep neural networks to predict microsomal metabolic liability. Gao et al. (2019) compared 6 different kinds of 2D fingerprints in the prediction of affinity between proteins and drugs using ML methods such as RF, single-task DNN, and multi-task DNN models. Matsuzaka and Uesawa (2019) used 2D pictures of 3D chemical compounds to train a CNN model to predict constitutive androstane receptor agonists. They optimized the greatest performance in snapshots of a 3D ball-and-stick model taken at various angles or coordinates. Therefore, the method outperformed seven common 3D chemical structure forecasts.

Since the GCN's development, drug related GCN models have created depictions of graphs which concerned with molecules that incorporate details on the chemical structures by adding up the adjacent atoms' properties (Gilmer et al. 2017).

GCNs have been employed as 3D descriptors instead of SMILES strings in a lot of research, and it's been discovered that these learned descriptors outperform standard descriptors in prediction tests and are easier to understand (Shin et al. 2019; Ozturk et al. 2018; Yu et al. 2019). Chemi-net employed GCN models to represent molecules and compared the performance of single-task and multi-task DNNs on their own QSAR datasets (Liu et al. 2019a). Yang et al. (2019) introduced the directed message passing neural network, which uses a directed message-passing paradigm, as a more advanced model (D-MPNN). They tested their approaches on 19 publicly available and 16 privately held datasets and discovered that in most situations, they were correct. The D-MPNN models outperformed the previous models. In two datasets, they underperformed and were not as resilient as typical 3D descriptors when the sample was small or unbalanced. The D-MPNN model was then employed by another research group to correctly forecast a kind of antibiotic named HALICIN, which demonstrated bactericide effects in models for mice (Stokes et al. 2020). This was the first incident that resulted in the finding of an antibiotic by using DL methods to explore a large-scale chemical space that current experimental methodologies cannot afford. The application of attention-based graph neural networks is another interesting contemporary method (Sun et al. 2020a). Edge weights and node features can be learned together since a molecule's graph representations can be altered by edge properties. As a result, Shang et al. suggested a multi-relational GCN with edge attention (Shang et al. 2018). For each edge, they created a reference guide on attention spans. Because it is used throughout the molecule, the approach can handle a wide range of input sizes.

In the Tox21 and HIV benchmark datasets, they found that this model performed better than the random forest model. As a result, the model may effectively learn pre-aligned features from the molecular graph's inherent qualities. Withnall et al. (2020) extended the MPNN model with AMPNN (attention MPNN), which is an attention technique that the message forwarding step employs weighted summation. Moreover, they termed the D-MPNN model the edge memory neural network because it was extended by the same attention mechanism as the AMPNN (EMNN). Although it is computationally more intensive than other models, this model fared better than others on the uniformly absent information from the maximal unbiased validation (MUV) reference.

4.1.2 Structure (graph)-based models

Unlike the drug- and structure-based models in Fig. 10b, protein targets and medication information should be included. Typical molecular docking simulation methods aim to predict the geometrically possible binding of known tertiary structure drugs and proteins. Atom sequences and amino acid residues can be used to express both the medicine as well as the target. Descriptors based on sequences were selected because DL approaches may be implemented right away with non-significant pre-processing of the entering data.

The Davis kinase binding affinity dataset (Davis et al. 2011) and the KIBA dataset (Sun et al. 2020a) were used in that study. DeepDTA, suggested by Ozturk et al. (2018), outperformed moderate ML approaches such as KronRLS (Nascimento et al. 2016) and SimBoosts (Tong et al. 2017) by applying solely information about the sequence of a CNN model based on the SMILES string and amino acid sequences. Wen et al. used ECFPs and protein sequence composition descriptors as examples of common and basic features and trained them using semi-supervised learning via a deep belief network (Wen et al. 2017). Another study, DeepConv-DTI, built a deep CNN model using only an RDKit Morgan fingerprint and protein sequences (Lee et al. 2019). They also used the pooled convolution findings to capture local residue patterns of target protein sequences, resulting in high values for critical protein areas like actual binding sites.

The scoring feature, which ranks the protein-drug interaction with 3D structures and makes the training data parametric to forecast values for binding affinities of targeted proteins, is used to predict binding affinity values or binding pocket sites of the target proteins as a key metric for the structure-based regression model. The protein–drug complexes' 3D structural characteristics were included in the CNNs by AtomNet (Wallach et al. 2015). They placed 3D grids with set sizes (i.e., voxels) in comparison to protein–drug combinations, with every cell in the grid representing structural properties at that position. Several researchers have examined the situation since then, deep CNN models that use voxels to predict binding pocket location or binding affinity (Wang et al. 2020b; Ashburner et al. 2000; Zhao et al. 2019). In comparison to common docking approaches such as AutoDock Vina (Trott and Olson 2010) or Smina (Koes et al. 2013), these models have shown enhanced performance. This is since CNN models are relatively impervious even with large input sizes. It can be taught and is resilient to input data noise.

Many DTI investigations using GCNs based on structure-based approaches have been reported (Feng et al. 2018; Liu et al. 2016). Feng et al. (2018) used both ECFPs and GCNs as pharmacological characteristics. In the Davis et al. (2011), Metz et al. (2011), and KIBA Tang et al. (2014) benchmark datasets, their methods outperformed prior models such as KronRLS (Nascimento et al. 2016) and SimBoost (Tong et al. 2017). However, they did agree that their GCN model couldn't beat their ECFP model due to time and resource constraints in implementing the GCN. In a different DTI investigation study, Torng et al. employed a graph without supervision to become familiar with constant size depictions of protein binding sites (Torng and Altman 2019). The pre-trained GCN model was then trained using the newly created protein pocket GCN, the drug GCN model, on the other hand, used attributes to be trained and which were generated automatically. They concluded that without relying on target–drug complexes, their model effectively captured protein–drug binding interactions.

Because the models that implement the attention mechanism have key qualities that enable the model to be interpreted, attention-based DTI prediction approaches have evolved (Hirohara et al. 2018; Liu et al. 2016; Perozzi et al. 2014).

For protein sequences, Gao et al. (2017) employed compressed vectors with the LSTM RNNs and the GCN for drug structures. They concentrated on demonstrating their method's capacity to deliver biological insights into DTI predictions. To do so, Mechanisms for two-way attention were employed. to calculate the binding of drug–target pairs (DTPs), allowing for flexible interpretation of superior data from target proteins, such as GO keywords. Shin et al. (2019) introduced the Molecule transformer DTI (MT-DTI) approach for drug representations, which uses the self-attention mechanism. The MT-DTI model was tweaked to perfection and assessed using two Davis models Using pre-trained parameters from the 97 million chemicals PubChem (Davis et al. 2011) and (KIBA) (Tang et al. 2014) benchmark datasets, which are both publicly available. However, the attention mechanism was not used to depict the protein targets because it would take too long to calculate the target sequence in an acceptable amount of time. Pre-training is impossible due to a lack of target information.

On the other hand, attention DTA presented by Zhao et al. incorporates a CNN attention mechanism model to establish the weighted connections between drug and protein sequences (Zhao et al. 2019). They showed that these attention-based drug and protein representations have good MLP model affinity prediction task performance. DeepDTIs used external, experimental DTPs to infer the probability of interaction for any given DTP. Four of the top ten predicted DTIs have previously been identified, and one was discovered to have a poor glucocorticoid receptor binding affinity (Huang et al. 2018). DeepCPI was used to predict drug–target interactions. Small-molecule interactions with the glucagon-like peptide one receptor, the glucagon receptor, and the vasoactive intestinal peptide receptor have been tested in experiments (Wan et al. 2019).

4.1.3 Drug–protein(disease)-based models

According to poly pharmacology, most medicines have multiple effects on both primary and secondary targets. The biological networks involved, as well as the drug's dose, influence these effects. As a result, the drug–protein(disease)-based models shown in Fig. 10c are particularly beneficial when evaluating protein promiscuity or drug selectivity (Cortes-Ciriano et al. 2015). Furthermore, Neural networks that can do multiple tasks are ideal for simultaneously learning the properties of many sorts of data (Camacho et al. 2018). Several DL model applications, such as drug-induced gene-expression patterns and DTI-related heterogeneous networks, leverage relational information for distinct views. A network-based strategy employs heterogeneous networks includes a variety of nodes and edges kinds (Luo et al. 2017; David et al. 2019). The nodes in these networks have a local similarity, which is a significant aspect of these models. One can anticipate DTIs using their connections and topological features when a network of similarity with medications as its nodes and drug–drug similarity values as a measure of the edges' weights is investigated. Machine to support vectors (Bleakley and Yamanishi 2009; Keum and Nam 2017), Machine learning techniques that use heterogeneous networks as prediction frameworks include the regularized least square model (RLS) (Liu et al. 2016; Xia et al. 2010; Hao et al. 2016) and random walk with the restart model Nascimento (Lian et al. 2021; Nascimento et al. 2016). DTI prediction research using networks have employed DL to enhance the methods used to forecast associations today for evaluating the comparable topological structures of drug and target networks that are bipartite and tripartite linked networks, owing to the increased interest in the usage of DL technologies (drug, target, and disease networks) (Hassan-Harrirou et al. 2020; Lamb et al. 2006; Korkmaz 2020; Townshend et al. 2012; Vazquez et al. 2020). Zong et al. (2017) used the DeepWalk approach to collect local latent data, compute topology-based similarity in tripartite networks, and demonstrate the technology's promise as a medication repurposing solution.

Relationship-based features collected by training the AE were used in some network-based DTI prediction studies. Zhao et al. (2020) developed a DTI-CNN prediction model that combined depth information that is low-dimensional but rich with a heterogeneous network that has been taught using the stacked AE technique. To construct the topological similarity matrix of drug and target, Wang et al. used a deep AE and mutually beneficial pointwise information in their analysis (Wang et al. 2020b). Peng et al. (2020) employed a denoising Autoencoder to pick network-based attributes and decrease the representation dimensions in another investigation.

By helping the self-encoder learn to denoise, the anti-aliasing effect (Autoencoder) enhances high-dimensional images with noise, input data that is noisy and incomplete, allowing the encoder to learn more reliably. These approaches, however, have a drawback in that it is challenging to foresee recent medications or targets, a problem. The problem of recommendation systems' "cold start" is known as the "cold start" problem (Bedi et al. 2015). The size and form of the network have a big impact on these models, so if the network isn't big enough, they will not be able to collect all the medications or targets that aren't in the network (Lamb et al. 2006).

Various investigations have also utilized Gene expression patterns as chemogenomic traits to predict DTIs. This research presumes that medications with similar expression patterns have similar effects on the same targets (Hizukuri et al. 2015; Sawada et al. 2018).

The revised version of CMAP, the LINCS-L1000 database, has been integrated into the DL DTI models in recent works (Subramanian et al. 2017; Thafar et al. 2020; Karpov et al. 2020; Arus-Pous et al. 2020). Based on the LINCS pharmacological perturbation and knockout gene data, using a deep neural network, Xie et al. developed a binary classification model (Xie et al. 2018).

On the other hand, Lee and Kim employed as a source of expression signature genes medication and target features. They used node2vec to train the rich data by examining three elements of protein function, including pathway-level memberships and PPI (Lee and Kim 2019). Saho and Zhang employed a GCN model to extract drug and target attributes from LINCS data and a CNN model to forecast DTPs by extracting latent features in DTIGCCN (Shao et al. 2020). The Gaussian kernel function was identified to aid in the production of high-quality graphs, and as a result, this hybrid model scored better on classification tests.

DeepDTnet employs a heterogeneous drug–gene-disease network to uncover known drug targets containing fifteen types of chemicals and genomic, phenotypic, and cellular network properties. DeepDTnet predicted and experimentally confirmed topotecan, a new direct inhibitor of the orphan receptor linked to the human retinoic acid receptor (Zeng et al. 2020).

4.2 Drug sensitivity and response prediction using DL

Drug response is the clinical outcome treated by the drug of interest (https://www.sciencedirect.com/topics/drug-response). This is due to the normally low ratio of samples to measurements each sample, which makes traditional feedforward neural networks unsuitable. The main idea of drug response prediction is shown in Fig. 11. The DL method takes the heterogenous network of drug and protein interactions as inputs and predicts the response scores. Although the widespread use of the deep neural network (DNN) approaches in various domains and sectors, including related topics like computational chemistry (Gómez-Bombarelli et al. 2018), DNNs have only lately made their way into drug response prediction. Overparameterization, overfitting, and poor generalization are common outcomes of recent simulation datasets. However, more public data has become available recently, and freshly built DNN models have shown promise. As a result, this section summarizes current DL computational problems and drug response prediction breakthroughs.

Since the 1990s, neural networks have been used to predict drug response (El-Deredy et al. 1997) revealed that data from tumor nuclear magnetic resonance (NMR) spectra might be used to train a neural network and can be utilized to predict drug response in gliomas and offer information on the metabolic pathways involved in drug response.

In 2018, The DRscan model was created by Chang et al. (2018), and it uses a CNN architecture that was trained on 1000 drug reaction studies per molecule. Compared to other traditional ML algorithms like RF and SVM, their model performed much better. CDRscan's ability to incorporate genomic data and molecular fingerprints is one of the reasons it outperformed these baseline models. Furthermore, its convolutional design has been demonstrated to be useful in various machine learning areas. A neural network called an autoencoder attempts to recreate the original data from the compressed form after compressing its input. As proven by Way and Greene (2018), this is very useful for feature extraction, which condensed a gene expression profile with 5000 dimensions with a maximum of 100 dimensions, some of which revealed to significant characteristics such as the patient's sexual orientation or melanoma status. Using variational autoencoders, Dincer et al. (2018) created DeepProfile, a technique for learning a depiction of gene expression in AML patients in eight dimensions that is then fitted to a Lasso linear model for treatment response prediction with superior results to that of no extracting features.

Ding et al. (2018) proposed a deep autoencoder model for representation learning of cancer cells from input data consisting of gene expression, CNV, and somatic mutations.

In 2019, MOLI (Multi-omics Late Integration) (Sharifi-Noghabi et al. 2019) was a deep learning model that incorporates multi-omics data and somatic mutations to characterize a cell line. Three separate subnetworks of MOLI learn representations for each type of omics data. A final network identifies a cell's response as responder or non-responder based on concatenated attributes. Those methods share two characteristics: integrating multiple input data (multi-omics) and binary classification of the drug response. Although combining several forms of omics data can improve the learning of cell line status, it may limit the method's applicability for testing on different cell lines or patients because the model requires extra data beyond gene expression.

Furthermore, a certain threshold of the IC50 values should be set before binary classification of the drug response, which may vary depending on the experimental condition, such as drug or tumor types. Twin CNN for drugs in SMILES format (TCNNS) (Liu et al. 2019b) takes a one-hot encoded representation of drugs and feature vectors of cell lines as the inputs for two encoding subnetworks of a One-Dimensional (1D) CNN. One-hot encodings of drugs in TCNNS are Simplified Molecular Input Line Entry System (SMILES) strings which describe a drug compound's chemical composition. Binary feature vectors of cell lines represent 735 mutation states or CNVs of a cell. KekuleScope (Cortés-Ciriano and Bender 2019) adopts transfer learning, using a pre-trained CNN on ImageNet data. The pre-trained CNN is trained with images of drug compounds represented as Kekulé structures to predict the drug response.

Yuan et al. (2019) offer GNNDR, a GNN-based technique with a high learning capacity and allows drug response prediction by combining protein–protein interactions (PPI) information with genomic characteristics. The value of including protein information has been empirically proven. The proposed method offers a viable avenue for the discovery of anti-cancer medicines. Semi-supervised variational autoencoders for the prediction of monotherapy response were examined by the Rampášek et al. (2019). In contrast to many conventional ML methodologies, together developed a model for predicting medication reaction that took advantage of expression of genes before and after therapy in cell lines and demonstrated enhanced evaluation on a variety of FDA-approved pharmaceuticals. Chiu et al. (2019) trained a deep drug response predictor after pre-training autoencoders using mutation data and expression features from the TCGA dataset. The use of pretraining distinguishes their strategy from others. Compared to using only the labeled data, the pretraining process permits un-labelled data from outside sources, like TCGA, as opposed to just gene expression profiles obtained from drug reaction tests, resulting in a significant increase in the number of samples available and improved performance.

Chiu et al. (2019) and Li et al. (2019) used a combination of auto-encoders and predicted drug reactions in cell lines with deep neural networks and malignancies that had been gnomically characterized. To anticipate cell lines reactions to drug combinations, in https://string-db.org/cgi/download.pl?sessionId=uKr0odAK9hPs used deep neural encoders to link genetic characteristics with drug profiles.

In 2020, Wei et al. (2020) anticipate drug risk levels (ADRs) based on adverse drug reactions. They use SMOTE and machine learning techniques in their studies. The proposed framework was used to investigate the mechanism of ADRs to estimate degrees of drug risk and to assist with and direct decision-making during the changeover from prescription to over-the-counter medications. They demonstrated that the best combination, PRR-SMOTE-RF, was built using the above architecture and that the macro-ROC curve had a strong classification prediction effect. They suggested that this framework could be used by several drug regulatory organizations, including the FDA and CFDA, to provide a simple but dependable method for ADR signal detection and drug classification, as well as an auxiliary judgement basis for experts deciding on the status change of Rx drugs to OTC drugs. They propose that more ML or DL categorization algorithms be tested in the future and that computational complexity be factored into the comparison process. Kuenzi et al. (2020) built DrugCell, an interpretable DL algorithm of personal cancer cells based on the reactions of 1235 tumor cell lines to 684 drugs. Genotypes of cancer cause conditions in cellular systems combined with medication composition to forecast therapeutic outcome while also learning the molecular mechanisms underlying the response. Predictions made by DrugCell in cell lines are precise and help to categorize clinical outcomes. The study of DrugCell processes results in the development of medication combinations with synergistic effects, which we test using combinatorial CRISPR, in vitro drug–drug screening, and xenografts generated from patients. DrugCell is a step-by-step guide to building interpretable predictive medicine models.

Artificial Neural Networks (ANNs) that operate on graphs as inputs are known as Graph Neural Networks (GNNs). Deep GNNs were recently employed for learning representations of low-dimensional biomolecular networks (Hamilton 2020; Wu et al. 2020). Ahmed et al. (2020) used two separate GNN methods to develop a GNN using GE and a network of genes that are expressed together. This is a network that depicts the relationship between gene pairs' expression.

The CNN is one of the neural network models adopted for drug response prediction. The CNN has been actively used for image, video, text, and sound data due to its strong ability to preserve the local structure of data and learn hierarchies of features. In 2021, several methods had been developed for drug response prediction, each of which utilizes different input data for prediction (Baptista et al. 2021).

Nguyen et al. (2021) proposed a method to predict drug response called GraphDRP, which integrates two subnetworks for drug and cell line features, like CNN in Liu et al. (2019b) and Qiu et al. (2021). Gene expression data from cancer cell lines and medication response data, the author finds predictor genes for medications of interest and provides a reliable and accurate drug response prediction model. Using the Pearson correlation coefficient, they employed the ElasticNet regression model to predict drug response and fine-tune gene selection after pre-selecting genes. They ran a regression on each drug twice, once using the IC50 and once with the area under the curve (AUC), to obtain a more trustworthy collection of predictor genes (or activity area). The Pearson correlation coefficient for each of the 12 medicines they examined was greater than 0.6. With 17-AAG, IC50 has the highest Pearson correlation coefficient of 0.811.

In contrast, AUC has the highest Pearson correlation coefficient of 0.81. Even though the model developed in this study has excellent predictive performance for GDSC, it still has certain flaws. First, the cancer cell line's properties may differ significantly from those of in vivo malignancies, and it must be determined whether this will be advantageous in a clinical trial. Second, they primarily use gene expression data to predict drug response. While drug response is influenced by structural changes such as gene mutations, it is also influenced by gene expression levels. To improve the prediction capacity of the model, more research is needed to use such data and integrate it into the model.

In 2022, Ren et al. (2022) suggested a graph regularized matrix factorization based on deep learning (DeepGRMF), which uses a variety of information, including information on drug chemical composition, their effects on cell biology signaling mechanisms, and the conditions of cancer cells, to integrate neural networks, graph models, and matrix-factorization approaches to forecast cell response to medications. DeepGRMF trains drug embeddings so that drugs in the embedding space with similar structures and action mechanisms, (MOAs) are intimately linked. DeepGRMF learns the same representation embeddings for cells, allowing cells with similar biological states and pharmacological reactions to be linked. The Cancer Cell Line Encyclopedia (CCLE) and On the Genomics of Drug Sensitivity in Cancer (GDSC) datasets, DeepGRMF outperforms competing models in prediction performance. In the Cancer Genome Atlas (TCGA) dataset, the suggested model might anticipate the effectiveness of a treatment plan on lung cancer patients' outcomes. The limited expressiveness of our VAE-based chemical structure representation may explain why new cell line prediction outperforms innovative drug sensitivity prediction in terms of accuracy. A family of neural graph networks has recently been shown to depict better chemical structures that can be investigated in the future. Pouryahya et al. (2022) proposed a new network-based clustering approach for predicting medication response based on OMT theory. Gene-expression profiles and cheminformatic drug characteristics were used to cluster cell lines and medicines, and data networks were used to represent the data. Then, RF model was used regarding each pair of cell-line drug clusters. by comparison, prediction-clustered based models regarding the homogenous data are anticipated to enhance drug sensitivity and precise forecasting and biological interpretability.

4.3 Drug–drug interactions (DDIs) side effect prediction using DL

Drugs are chemical compounds consumed by people and interact with protein targets to create a change. The drugs may alter the human body positively or negatively. Drug side effects are the undesirable alterations medications cause in the human body. These adverse effects might range from moderate headaches to life-threatening reactions like cardiac arrest, malignancy, and death. They differ depending on the person's age, gender, stage of sickness, and other factors (Kuijper et al. 2019). In the laboratory, to determine whether the medications have any unfavorable side effects, several tests are conducted on them. However, these examinations are both pricey and additionally lengthy. Recently, many computational algorithms for detecting medication adverse effects have been created. Computational methodologies are replacing laboratory experiments.

On the other hand, these methods do not provide adequate data to predict drug–drug interactions (DDIs). The phenomenon of DDIs is discussed in Fig. 12. The desired effects of a drug resulting from its interaction with the intended target and the unfavorable repercussions emerging from drug interactions with off targets make up a drug's entire reaction on the human body (undesirable effects). Even though A medication has a strong affinity for binding to one target, it binds to several proteins as well with varied affinities, which might cause adverse consequences (Liu et al. 2021). Predicting DDIs can assist in reducing the likelihood of adverse reactions and optimizing the medication development and post-market monitoring processes (Arshed et al. 2022). Side effects of DDIs are often regarded as the leading cause of drug failure in pharmacological development. When drugs have major side effects, the market is quickly removed from them. As a result, predicting side effects is a fundamental requirement in the drug discovery process to keep drug development costs and timelines in check and launch a beneficial drug in terms of patient health recovery.

Furthermore, the average drug research and development cost is $2.6 billion (Liu et al. 2019). As a result, determining the possibility of negative consequences is important for lowering the expense and risk of medication development. The researchers use various computer tools to speed up the process. In pharmacology and clinical application, DDI prediction is a difficult topic, and correctly detecting possible DDIs in clinical studies is crucial for patients and the public. Researchers have recently produced a series of successes utilizing deep learning as an AI technique to predict DDIs by using drug structural properties and graph theory (Han et al. 2022). AI successfully detected potential drug interactions, allowing doctors to make informed decisions before prescribing prescription combinations to patients with complex or numerous conditions (Fokoue et al. 2016).

Therefore, this section comprehensively reviews the researchers' most popular DL algorithms to predict DDIs.

In 2016, Tiresias is a framework proposed by Achille Fokoue et al. (2017) for discovering DDIs. The Tiresias framework uses a large amount of drug-related data as input to generate DDI predictions. The detection of the DDI approach begins using input data that has been semantically integrated, resulting in a knowledge network that represents drug properties and interactions using additional components like enzymes, chemical structures, and routes. Numerous similarity metrics between all pharmacological categories were determined using a knowledge graph in a scalable and distributed setting. To forecast the DDIs, a large-scale logistic regression prediction model employs calculated similarity metrics. According to the findings, the Tiresias framework was proven to help identify new interactions between currently available medications and freshly designed and existing drugs. The suggested Tiresias model's necessity for big, scaled medication information was negative, resulting in the developed model's high cost.

In 2017, Reza et al. (2017) developed a computational technique for predicting DDIs based on functional similarities among all medicines. Several major biological aspects were used to create the suggested model: carriers, enzymes, transporters, and targets (CETT). The suggested approach was implemented on 2189 approved medications, for which the associated CETTs were obtained, and binary vectors to find the DDIs were created. Two million three hundred ninety-four thousand seven hundred sixty-seven potential drug–drug interactions were assessed, with over 250,000 unidentified possible DDIs discovered. Inner product-based similarity measures (IPSMs) offered good values predicted for detecting DDIs among the several similarity measures used. The lack of pharmacological data was a key flaw in this strategy, which resulted in the erroneous detection of all potential pairs of DDIs.

In 2018, Ryu et al. (2018) proposed a model that predicts more DDI kinds using the drug's chemical structures as inputs and applied multi-task learning to DDI type prediction in the same vein Decagon (Zitnik et al. 2018) models polypharmacy side effects using a relational GNN. To comprehend the representations of intricate nonlinear pharmacological interactions, Chu et al. (2018) utilized an auto-encoder for factoring. To predict DDIs, Liu et al. (2019c) presented the DDI-MDAE based on shared latent representation, a multimodal deep auto-encoder. Recently, interest in employing graph neural networks (GNNs) to forecast DDI has increased. Distinct aggregation algorithms lead to different versions of GNNs to efficiently assemble the vectors of its neighbors’ feature vectors (Asada et al. 2018) uses a convolutional graph network (GCN) to encode the molecular structures to extract DDIs from text. Furthermore, Ma et al. (2018) has incorporated attentive Multiview graph auto-encoders into a coherent model.

Chen (2018) devised a model for predicting Adverse Drug Reactions (ADR). SVM, LR, RF, and GBT were all used in the predictive model. The DEMO dataset, which contains properties such as the patient's age, weight, and sex, and the DRUG dataset, which includes features such as the drug's name, role, and dosage, were employed in this model. Males make up 46% of the sample, while females make up 54%. The developed model had a fair forecasting accuracy for a representative sample set. Furthermore, the outputs revealed that the suggested model is only accurate for a significant number of datasets.

To anticipate the possible DDI, Kastrin et al. (2018) employed statistical learning approaches. The DDI was depicted as a complex network, with nodes representing medications and links representing their potential interactions. On networks of DDIs, the procedure for predicting links was represented as a binary classification job. A big DDI database was picked randomly to forecast. Several supervised and unsupervised ML approaches, such as SVM, classification tree, boosting, and RF, are applied for edge prediction in various DDIs. Compared to unsupervised techniques, the supervised link prediction strategy generated encouraging results. To detect the link between the pharmaceuticals, The proposed method necessitates Unified Medical Language System (UMLS) filtering, which provided a dilemma for the scientists. Furthermore, the suggested system only considers fixed network snapshots, which is problematic for DDI's system because It's a fluid system.

In 2019, Lee et al. (2019) proposed a deep learning system for accurately forecasting the results of DDIs. To learn more about the pharmacological effects of a variety of DDIs, an assortment of auto-encoders and a deep feed-forward neural network was employed in the suggested method that were honed utilizing a mix of well-known techniques. The results revealed that using SSP alone improves GSP and TSP prediction accuracy, and the autoencoder is more powerful than PCA at reducing profile features. In addition, the model outperformed existing approaches and included numerous novel DDIs relevant to the current study Yue et al. (2020) combines numerous graphs embedding methods for the DDI job, while models DDI as link prediction with the help of a knowledge graph (Karim et al. 2019). There's also a system for co-attention (Andreea and Huang 2019), which presented a deep learning model based solely on side-effect data and molecular drug structure. CASTER in Huang et al. (2020) also based on drug chemical structures, develops a framework for dictionary learning to anticipate DDIs (Chu et al. 2019) and proposes using semi-supervised learning to extract meaningful information for DDI prediction in both labeled and unlabeled drug data. Shtar et al. (2019) used a mix of computational techniques to predict medication interactions, including artificial neural networks and graph node factor propagation methods such as adjacency matrix factorization (AMF) and adjacency matrix factorization with propagation (AMFP). The Drug-bank database was used to train the model, containing 1142 medications and 45,297 drug drugs. With 1442 drugs and 248,146 drug–drug interactions, the trained model was tested from the drug bank's most recent version. AMF and AMFP were also used to develop an ensemble-based classifier, and the outcomes were assessed using the receiver operating characteristic (ROC) curve. The findings revealed that the suggested a classifier that uses an ensemble delivers important drug development data and noisy data for drug prescription. In addition, drug embedding, which was developed during the training of models utilizing interaction networks, has been made available. To anticipate adverse drug events caused by DDIs, Hou et al. (2019) suggested a deep neural network architecture model. The suggested model is based on a database of 5000 medication codes obtained from Drug Bank. Using the computed features, it discovers 80 different types of DDIs. Tensor Flow-GPU was also used to create the model, which takes 4432 drug characteristics as input.

Medicines for inflammatory bowel disease (IBD) can predict how they will react; the trained model has an accuracy of 88 percent. The findings also revealed that the model performs best when many datasets are used. Detecting negative effects of drugs with a DNN Model was proposed by Wang et al. (2019). The model predicts ADRs by using synthetic, biological, and biomedical knowledge of drugs. Drug data from SIDER databases was also incorporated into the model. The proposed system's performance was improved by distributing. Using a word-embedding approach, determine the association between medications using the target drug representations in a vector space. The suggested system's fundamental flaw was that it only worked well with ordinary SIDER databases.

In 2020, numerous AI-based methods were developed for DDI event prediction, including evaluating chemical structural similarity using neural graph networks (Huang et al. 2020). Attempts to forecast DDI utilizing different data sources have also been made, such as leveraging similarity features to create pharmacological features for the DDI job predicting occurrences (Deng et al. 2020).

With the help of word embeddings, part-of-speech tags, and distance embeddings. Bai et al. (2020) suggested a deep learning technique that executes the DDI extraction task and supports the drug development cycle and drug repurposing. According to experimental data, the technique can better avoid instance misclassifications with minimal pre-processing. Moreover, the model employs an attention technique to emphasize the significance of each hidden state in the Bi-LSTM layers.

A tool for extracting features regarding a graph convolutional network (GCN) and a predictor based on a DNN. Feng et al. (2020) suggested DPDDI, an effective and robust approach for predicting potential DDIs by utilizing data from the DDI network lacking a thought of drug characteristics (i.e., drug chemical and biological properties). The proposed DPDDI is a useful tool for forecasting DDIs. It should benefit from other DDI-related circumstances, such as recognizing unanticipated side effects and guiding drug combinations. The disadvantage of this paradigm is that it ignores drug characteristics.

Zaikis and Vlahavas (2020), by developing a bi-level network with a more advanced level reflecting the network of biological entities' interactions, suggested a multi-level GNN framework for predicting biological entity links. Lower levels, however, reflect individual biological entities such as drugs and proteins, although the proposed model's accuracy needs to be enhanced.

In 2021, To overcome the DDI prediction, Lin et al. (2021) suggested an end-to-end system called Knowledge Graph Neural Network (KGNN). KGNN expands the use of spatial GNN algorithms to the knowledge graph by selectively various aggregators of neighborhood data, allowing it to learn the knowledge graph's topological structural information, semantic relations, and the neighborhood of drugs and drug-related entities. Medical risks are reduced when numerous medications are used correctly, and drug synergy advantages are maximized. For multi-typed DDI pharmacological effect prediction, Yue et al. (2021) used knowledge graph summarization. Lyu et al. (2021) also introduced a Multimodal Deep Neural Network (MDNN) framework for DDI event prediction. On the drug knowledge graph, a graph neural network was used, MDNN effectively utilizes topological information and semantic relations. MDNN additionally uses joint representation structure information, and heterogeneous traits are studied, which successfully investigates the multimodal data's complementarity across modes. Karim et al. (2019) built a knowledge graph that used CNN and LSTM models to extract local and global pharmacological properties across the network. DANN-DDI is a deep attention neural network framework proposed by Liu et al. (2021). To anticipate unknown DDIs, it carefully incorporates different pharmacological properties (Chun and Yi-Ping Phoebe 2021) and developed a deep hybrid learning (DL) model to provide a descriptive forecasting of pharmacological adverse reactions. It was one of the initial hybrid DL models through conception models that could be interpreted. The model includes a graph CNN through conception models to improve the learning efficiency of chemical drug properties and bidirectional long short-term memory (BiLSTM) recurrent neural networks to link drug structure to adverse effects. After concatenating the outputs of the two networks (GCNN and BiLSTM), a fully connected network is utilized to forecast pharmacological adverse reactions. Regardless of the classification threshold, the model obtains an AUC of 0.846. It has a 0.925 precision score. Even though a tiny drug data set was used for adverse drug response (ADR) prediction, the Bilingual Evaluation Understudy (BLEU) concluded results were 0.973, 0.938, 0.927, and 0.318, indicating considerable achievements. Furthermore, the model can correctly form words to explain pharmacological adverse reactions and link them to the drug's name and molecular structure. The projected drug structure and ADR relationship will guide safety pharmacology research at the preclinical stage and make ADR detection easier early in the drug development process. It can also aid in the detection of unknown ADRs in existing medications. DDI extraction using a deep neural network model from medical literature was proposed by Mohsen and Hossein (). This model employs an innovative approach of attracting attention to improve the separation of essential words from other terms based on word similarity and location concerning candidate medications. Before recognizing the type of DDIs, this method calculates the results of a bi-directional long short-term memory (Bi-LSTM) model's attention weights in the deep network architecture. On the standard DDI Extraction 2013 dataset, the proposed approach was tested. According to the findings of the experiments, they were able to get an F1-Score of 78.30, which is comparable to the greatest outcomes for stated existing approaches.

In 2022, Pietro et al. (2022) introduced DruGNN, a GNN-based technique for predicting DDI side effects. Each DDI corresponds to a class in the prediction, a multi-class, multi-label node classification issue. To forecast the side effects of novel pharmaceuticals, they use a combination inductive-transudative learning system that takes advantage of drug and gene traits (induction path) and knowledge of known drug side effects (transduction path). The entire procedure is adaptable because the base for machine learning can still be used if the graph dataset is enlarged to include more node properties and associations. Zhang et al. (2022) proposed CNN-DDI, a new semi-supervised algorithm for predicting DDIs that uses a CNN architecture. They first extracted interaction features from pharmacological categories, targets, pathways, and enzymes as feature vectors. They then suggested a novel convolution neural network as a predictor of DDIs-related events based on feature representation. Five convolutional layers, two full-connected layers, and a CNN-based SoftMax layer make up the predictor. The results reveal that CNN-DDI superior to other cutting-edge techniques, but it takes longer to complete (Jing et al. 2022) presented DTSyn. This unique dual-transformer-based approach can select probable cancer medication combinations. It uses a multi-head attention technique to extract chemical substructure-gene, chemical-chemical, and chemical-cell-line connections. DTSyn is the initial model that incorporates two transformer blocks to extract linkages between interactions between genes, drugs, and cell lines, allowing a better understanding of drug action processes. Despite DTSyn's excellent performance, it was discovered that balanced accuracy on independent data sets is still limited. Collecting more training data is expected to solve the problem. Another issue is that the fine-granularity transformer was only trained on 978 signature genes, which could result in some chemical-target interactions being lost.

Furthermore, DTSyn used expression data as the only cell line attributes. To fully represent the cell line, additional omics data may be added going forward, including methylation and genetic data. He et al. (2022) proposed MFFGNN, a new end-to-end learning framework for DDI forecasting that can effectively combine information from molecular drug diagrams, SMILES sequences, and DDI graphs. The MFFGNN model used the molecular graph feature extraction module to extract global and local features from molecular graphs.

They run thorough tests on a variety of real-world datasets. The MFFGNN model routinely beats further cutting-edge models, according to the findings. Furthermore, the module for multi-type feature fusion configures the gating mechanism to limit the amount of neighborhood data provided to the node.

4.4 Drug–drug similarity prediction using DL

Drug similarity studies presume that medications with comparable pharmacological qualities have similar activation mechanisms, and side effects are used to treat problems like each other (Brown 2017; Zeng et al. 2019).

The drug-pharmacological similarity is critical for various purposes, including identifying drug targets, predicting side effects, predicting drug–drug interactions, and repositioning drugs. Features of the chemical structure (Lu et al. 2017; O’Boyle 2016), protein targets (Vilar 2016; Wang et al. 2014), side-effect profiles (Campillos et al. 2008; Tatonetti et al. 2012), and gene expression profiles (Iorio et al. 2010) provide a multi-perspective viewpoint for forecasting medications that are similar and can correct for data gaps in different data sources and offer fresh perspectives on drug repositioning and other uses. The main idea of drug–drug similarity is presented in Fig. 13. The vector represents the drug features, and the links reflect the similarity between the two drugs.

4.4.1 Drug similarity measures

The similarity estimations are calculated based on chemical structure, target protein sequence-based, target protein functional, and drug-induced pathway similarities.

4.4.1.1 The similarity in chemical structure

DrugBank (2019) provides tiny molecule medicine chemical structures in SDF molecular format. Invalid SDFs can be recognized and eliminated, such as those with a NA value or fewer than three columns in atom or bond blocks. For valid compounds, atom pair descriptors can be computed, pairwise comparison of compounds, δc (di, dj), was evaluated using atom pairs using the Tanimoto coefficient, which is defined as the number of atom pairs in each fraction shared by two different compounds divided by their union (Eq. 1).

where APi and APj are atom pairs from pharmaceuticals di and dj, respectively, the numerator is the total number of atom pairs in both compounds, while the denominator is the number of common atom pairs in both compounds.

4.4.1.2 Target protein sequence-based similarity

DrugBank provides all small molecule drugs have target sequences in FASTA format. The basic Needleman-Wunsch et al. (1970) dynamic programming approach for global alignment can be used to compare pairwise protein sequences. The proportion of pairwise sequence identity (Raghava 2006) can be represented as the corresponding sequence similarity. Equation 2 was used to calculate drug–drug similarity based on target sequence similarities:

where δt (di, dj) denotes target-based similarity between medicines di and dj. Drugs di target a group of proteins known as Ti. Tj is a set of proteins that pharmaceuticals dj target and S(x,y) is a similarity metric based on symmetric sequences between two targeted proteins, x \(\in \) Ti and y \(\in \) Tj. Overall, Eq. 2 calculates the average of the best matches, wherein each first medicine's target is only connected to the second medicine's most comparable phrase, and vice versa.

4.4.1.3 Target protein functional similarity

Protein targets that are overrepresented by comparable biological functions and have similar sequences imply shared pharmacological mechanisms and downstream effects (Passi et al. 2018). As a result, each protein has a set of Gene Ontology (GO) concepts from all three categories associated with it, such as cellular components (CC), molecular functions (MF), and biological processes (BP). We filtered out GO keywords that were either very specialized (with 15 linked genes) or very general (with 100 genes). DrugBank (2019) provided the Human Protein–Protein Interaction (PPI) network. Wang et al. (2007) proposed leveraging the topology of the GO graph structure to determine the semantic similarity of their linked GO terms, which was used to determine how functionally comparable two drugs are, such as δf (di, dj). Using a best-match average technique, any two GO keywords are compared for pairwise semantic similarity connected with di and dj were aggregated into a single semantic similarity measure and presented into a final similarity matrix.

4.4.1.4 Drug-induced pathway similarity

A medication pair that triggers similar pathways or overlaps shows that the drugs' mechanisms of action are similar, which is useful information for drug similarities and repositioning research (Zeng et al. 2015). Kanehisa and Goto (2000) was used to find the pathways activated by each small molecule medication. Using dice similarity, the similarity in pairs of any two options was calculated based on their constituent genes' closeness. After that, a pathway-based similarity score was calculated for each medication pair di and dj, i.e., δp (di, dj), was calculated using Eq. 3:

where Pi and Pj are a group of drug-induced pathways di and dj, respectively; x and y are two paths represented by a group of genes that make up their constituents, and \(DSC\left( {x,y} \right) = {{{2}\left| {x \cap y} \right|} \mathord{\left/ {\vphantom {{{2}\left| {x \cap y} \right|} {\left( {\left| x \right| + \left| y \right|} \right)}}} \right. \kern-\nulldelimiterspace} {\left( {\left| x \right| + \left| y \right|} \right)}}\) is the probability of a pair of dice matching, this determines how much the two trajectories overlap. When no gene is shared by any two pathways produced by the comparing drug pair, the similarity is set to 0.0. Overall, Eq. 3 implies that if two medications stimulate one or more identical pathways, the maximum pathway-based similarity will be achieved (s).

4.4.2 DL for drug similarity prediction

Wang et al. (2019) introduced a gated recurrent units (GRUs) model that employs similarity to predict drug–disease interactions. In this approach, CDK turned the SMILES into 2D chemical fingerprints, and the Jaccard score of the 2D chemical fingerprints was used to compare the two medicines. This section comprehensively reviews the researchers' most popular DL algorithms to predict drug similarity.

Hirohara et al. (2018) employed a CNN to learn molecular representation. The network is given the molecule's SMILES notation as input to feed into the convolutional layers in this scenario. The TOX 21 dataset was used.

To conduct similarity analysis, Cheng et al. (2019) used the Anatomical Therapeutic Chemical (ATC) based on the drug ATC classification systems and code-based commonalities of drug pairs. The authors created interaction networks, performed drug pair similarity analyses, and developed a network-based methodology for identifying clinically effective treatment combinations for a specific condition.

Xin et al. (2016) presented a Ranking-based k-Nearest Neighbour (Re-KNN) technique for medication repositioning. The method's key feature combines the Ranking SVM (Support Vector Machine) algorithm and the traditional KNN algorithm. Chemical structural similarity, target-based similarity, side-effect similarity, and topological similarity are the types of similarity computation methodologies they used. The Tanimoto score was then used to determine the similarity between the two profiles.

Seo et al. (2020) proposed an approach that combined drug–drug interactions from DrugBank, network-based drug–drug interactions, polymorphisms in a single nucleotide, and anatomical hierarchy of side effects, as well as indications, targets, and chemical structures.

Zeng et al. (2019) developed an assessment of clinical drug–drug similarity derived from data from the clinic and used EHRs to analyse and establish drug–diagnosis connections. Using the Bonferroni adjusted hypergeometric P value, they created connections between drugs and diagnoses in an EMR dataset. The distances between medications were assessed using the Jaccard similarity coefficient to form drug clusters, and a k-means algorithm was devised.

Dai et al. (2020) reviewed, summarized representative methods, and discussed applications of patient similarity. The authors talked about the values and applications of patient similarity networks. Also, they discussed the ways to measure similarity or distance between each pair of patients and classified it into unsupervised, supervised, and semi-supervised.

Yan et al. (2019) created BiRWDDA, a new computational methodology for medication repositioning that combines bi-random walk and various similarity measures to uncover potential correlations between diseases and pharmaceuticals. First drug and disease–disease similarities are assessed to identify optimal drug and disease similarities. The information entropy is evaluated between the similarity of medicine and disease to determine the right similarities. Four drug–drug similarity metrics and three disease–disease similarity measurements were calculated depending on some drug- and disease-related characteristics to create a heterogeneous network. The drug's protein sequence information, the extracted drug interaction from DrugBank then utilized the Jaccard score to determine this similarity, the chemical structure, derived canonical SMILES from DrugBank, and the side effect, respectively the four drug–drug similarities.

Yi et al. (2021) constructed the model of a deep gated recurrent unit to foresee drug–disease interactions that likely employ a wide range of similarity metrics and a kernel with a Gaussian interaction profile. Based on their chemical fingerprints, the similarity measure is utilized to detect a distinguishing trait in medications. Meanwhile, based on established disease–disease relationships, the Gaussian interactions profile kernel is used to derive efficient disease features. After that, a model with a deep gated recurrent cycle is created to anticipate drug-disease interactions that could occur. The outputs of the experiments showed that the suggested algorithm could be used to anticipate novel drug indications or disease treatments and speed up drug repositioning and associated drug research and discovery.

To forecast DDIs, Yan et al. (2022) suggested a semi-supervised learning technique (DDI-IS-SL). DDI-IS-SL uses the cosine similarity method to calculate drug feature similarity by combining chemical, biological, and phenotypic data. Drug chemical structures, drug–target interactions, drug enzymes, drug transporters, drug routes, drug indications, drug side effects, harmful effects of drug discontinuation, and DDIs that have been identified are all included in the integrated drug information.

Heba et al. (2021) used DrugBank to develop a machine learning framework based on similarities called "SMDIP" (Similarity-based ML for Drug Interaction Prediction), where they calculated drug–drug similarity utilizing a Russell–Rao metric for the biological and structural data that is currently accessible on DrugBank to represent the limited feature area. The DDI classification is carried out using logistic regression, emphasizing finding the main predictors of similarity. The DDI key features are subjected to six machine learning models (NB: naive Bayes; LR: logistic regression; KNN: k-nearest neighbours; ANN: neural network; RFC: random forest classifier; SVM: support vector machine).

For large-scale DDI prediction, Vilar et al. (2014) provided a procedure combining five similar drug fingerprints (Two-dimensional structural fingerprints, fingerprinting of interaction profiles, fingerprints of the target profile, Fingerprints of ADE profiles, and pharmacophoric techniques in three dimensions).

Song et al. (2022) used similarity theory and a convolutional neural network to create global structural similarity characteristics. They employed a transformer to extract and produce local chemical sub-structure semantic characteristics for drugs and proteins. To create drug and protein global structural similarity characteristics, The Tanimoto coefficient, Levenshtein distance, and CNN are all utilized in this study.

5 Benchmark datasets and databases