Key Points

-

Describes how quality assessment of randomised clinical trial (RCT) reports can be used for locating sources of bias.

-

Shows that most RCTs in dental and medical research were inadequately reported.

-

Demonstrates a large variation in the quality of RCT reports in dental and medical research.

-

Shows that the journal impact factor was not correlated with the quality of RCT reports..

Abstract

Objective To assess 1) the quality of reporting randomised clinical trials in dental (RCT-Ds) and medical research (RCT-Ms), 2) the quality of RCT reports in relation to the journal impact factor, 3) the source of funding, and 4) the quality of RCT-Ds in different areas of dental research.

Design Random samples of 100 RCT-Ds and 100 RCT-Ms published in 1999 were evaluated for quality of reporting under blinded conditions with the Jadad quality assessment scale. In addition, correlation between the quality scores and journal impact factor or source of funding, as well as area of dental research were analysed.

Results The quality of RCT-Ds and RCT-Ms published in 1999 was generally inadequate. The quality was largely equivalent in RCT-Ds and RCT-Ms. There was no correlation between the quality scores and the journal impact factor or the source of funding. Some differences were found in the quality scores between different areas of dental research.

Conclusions The results from these RCT-Ds and RCT-Ms show that most of them were imperfect in the reporting of methodology and trial conduct. There is a clear need to improve the quality of trial reporting in dental and medical research.

Similar content being viewed by others

Main

Randomised clinical trials (RCTs) are regarded as the most reliable method of evaluating the effects of interventions in healthcare.1,2,3 RCTs are also considered the 'golden standard' for providing research evidence for interventions in evidence-based healthcare.3 The validity and reliability of trial results are, however, largely dependent on the study design and the methodology in its conduct. Reporting of RCTs has been a subject of concern that has led to an international agreement on how trials ideally should been reported in medical journals, such as the CONSORT statement.4,5 This includes a checklist of 21 items related to different aspects of a trial report that are considered important in the publication of an RCT.4,5

Jadad et al. defined the quality of a trial, with emphasis on the methodological quality, as 'the confidence that the trial design, conduct, and analysis have minimised or avoided biases in its treatment comparisons'.6 In addition, the quality of a trial report was given a somewhat different and more suitable definition 'providing information about the design, conduct, and analysis of the trial'.6 Both definitions relate to attempts to eliminate bias.

There are several scales and checklists available for quality assessment of RCTs.1 One widely used, reliable, and to our knowledge, only validated quality scale has been developed by Jadad et al.1,6 It focuses on the methods for random allocation, double-blinding and withdrawals and drop-outs. The total scores range from 0 to 5 points, where trials with 0–2 points are considered to be of poor quality, and those with 3–5 points represent high quality RCTs.6 The Jadad scale has been widely used in different areas of medicine,7,8,9,10,11,12 but has not yet found its way to dental research. Quality assessments of RCTs in dental research (RCT-Ds) are scarce.13,14 Thus, we conducted this study with the primary aim of evaluating and comparing the quality of trial reports in a random sample of RCT-Ds and RCTs in medical research (RCT-Ms). Secondary aims were to assess the reporting of allocation concealment, the quality of RCTs in relation to the journal impact factor and the source of funding, but also to evaluate the quality of RCT-Ds in different areas of dental research.

Material and methods

Medline searches

The Medline database (Entrez PubMed, www.ncbi.nlm.nih.gov) was searched for RCT-Ds and RCT-Ms (September 2000), using the MeSH terms: 'dentistry' and 'medicine', respectively, limited to publication year '1999', publication type 'randomized controlled trial', and language 'English'.

Inclusion and exclusion criteria

The RCTs were scrutinised for relevance to dental and medical research, respectively. RCTs covering other research areas were excluded from the study. Moreover, articles that were found during the quality assessments to be non-randomised trials or in vitro experimental studies were excluded from further analysis. The proportion of false inclusions in the Medline searches was calculated.

Outcome measures

The primary outcome measures were the Jadad RCT quality scores.1,6 Secondary outcome measures were the reporting of allocation concealment,15 the relation of quality scores to journal impact factor or source of funding, and the quality of RCT-Ds in different areas of dental research (the main topic covered by the trial).

Sample size

Power calculations with 95% power (p=0.05) in order to detect differences of one point (or equivalence) in the quality scores between RCT-Ds and RCT-Ms gave a minimum sample size of 67 RCTs per group. We chose to randomise 100 RCT-Ds and 100 RCT-Ms, using a computer-generated random number table.

Randomisation and blinding

The RCTs were randomised and coded by an independent laboratory technician. The laboratory technician also obtained, photocopied in duplicate and masked one copy of each RCT with an opaque black marker pen, deleting the authors names, titles and affiliations, the journal title, country of origin, source of funding and acknowledgements.6 The technician coded the masked RCTs (computer-generated random number table) and kept the code and the unmasked copies concealed from the authors during the quality assessments.

Quality assessments

A validated quality scale (Jadad scale) was used for quality assessments of the RCTs.1,6 The scale includes three items directly related to the validity of RCTs, giving a total score of 0 to 5 points (0–2 points=poor quality, 3–5 points=high quality), described in detail elsewhere.6 Briefly, when it was stated that random allocation was used, one point was given. One additional point was given if the method for random allocation was appropriate. If an inappropriate method was used, one point was withdrawn. In addition, one point was given if it was stated that the study was double-blinded. If the method for double-blinding was appropriate, an additional point was given; inappropriate one point was deducted. Reporting of withdrawals and drop-outs was given one point if the study stated the number and reasons of subjects who were included in the study, but who discontinued, in each group.6

The rater (PS) was trained by assessing RCTs that were previously rated with the Jadad scale.7,11 In order to ensure the consistency of the assessments throughout the study, all RCTs were rated at least twice.15 Furthermore, twenty randomly selected RCTs (computer-generated random table) were assessed by both authors to verify the reliability of the scorings, and doubtful cases were solved by consensus. In addition, reporting of allocation concealment was assessed. When all ratings were completed, the random code for masking was broken. No quality scores were changed after this point.

Data analyses and statistics

After the quality assessments, the scores were paired with the unmasked RCTs for analysis of the secondary outcome measures. Journal impact factors for 1999 were obtained from the Journal Citation Reports® database (Institute for Scientific Information). The source of funding was, when stated, registered as 'research or community funded' or 'industry funded', or as a combination of both. Statistics were analysed with SPSS 9.0 for Windows, with non-parametric methods using Kruskal-Wallis test, Mann-Whitney U test, and Spearman's rank correlation coefficient (rs). P levels below 5% were considered significant.

Results

Medline searches

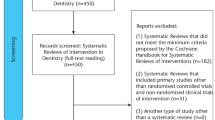

The Medline searches gave a total of 251 search hits for RCT-Ds and 181 for RCT-Ms. Of these, 100 RCT-Ds and 100 RCT-Ms were randomly allocated to this study. Reports covering other research areas than dentistry (n=5) or medicine (n=1) were excluded, as were reports that were non-RCT-Ds (n=3) or non-RCT-Ms (n=10), or were in vitro studies in dental (n=9) or medical (n=0) research.

A total of 83 RCT-Ds and 89 RCT-Ms remained throughout the study for analysis. Thus, the proportion of false inclusions in the Medline searches were 17% for RCT-Ds and 11% for RCT-Ms. The RCTs were published in 101 different journals and originated from 26 countries worldwide.

Randomisation

All RCTs were reported as randomised, but an adequate method of random allocation was reported in less than one third of the RCT-Ds and RCT-Ms. An inadequate method was reported in 6% of the RCT-Ds and RCT-Ms. The remaining two thirds of the RCT-Ds and RCT-Ms did not report the method of random allocation (Table 1).

Double-blinding

Double-blinding was reported in 28% of the RCT-Ds and in 4% of the RCT-Ms. An appropriate method of double blinding was found in 18% of the RCT-Ds and in 2% of the RCT-Ms. Inadequate double blinding was reported in 1% of the RCT-Ds and in 0% of the RCT-Ms (Table 1).

Withdrawals and drop-outs

A complete reporting of withdrawals and drop-outs was given in 35% of the RCT-Ds and in 44% of the RCT-Ms (Table 1).

Allocation concealment

Allocation concealment was reported in 13% of the RCT-Ds and in 9% of the RCT-Ms (Table 1).

Quality scores

High quality scores (3–5 points on the Jadad scale) were assigned for 27% of the RCT-Ds and for 18% of the RCT-Ms. The difference between RCT-Ds and RCT-Ms was not significant (Table 2).

The median Jadad quality score was 2.0 for RCT-Ds and for RCT-Ms. There were no significant differences between the RCT-D and RCT-M scores, indicating equal quality (Table 3).

Journal impact factor

There was no correlation between the Jadad quality scores and the journal impact factor for RCT-Ds (rs=0.140) or for RCT-Ms (rs=0.271).

Source of funding

There were no significant differences in the Jadad quality scores between RCTs that were 'research or community funded' (median Jadad score 2.0, n=63) or 'industry funded' (median score 2.0, n=28). The remaining RCTs were funded by a combination of 'research or community funds' and 'industry' (median score 2.0, n=17), or the source of funding was not stated (median score 2.0, n=64).

Area of dental research

The quality scores for RCT-Ds differed between the areas of dental research. RCT-Ds about oral surgery had significantly higher median quality scores, compared with all other areas grouped together. In addition, oral surgery had significantly higher quality scores than periodontics and restorative treatment. No other significant differences were found. The number of RCT-Ds in many areas was inadequate for subgroup analyses (Table 4.)

Discussion

The median quality scores of RCT-Ds and RCT-Ms published in 1999 indicated an overall inadequate quality. The quality was equal for RCT-Ds and RCT-Ms.

The random sample of RCTs in this study contributed 46% of the total number of RCT-Ds and RCT-Ms published in 1999 that were available on Medline. The power calculations indicated adequate sample size for analyses of equivalence in the quality of RCT-D and RCT-M reports.

The quality of an RCT is dependent on all aspects of the study design and trial conduct. A few key features have been shown to have a discriminating effect in assessments of the scientific quality of a trial report.6,15 The internal validity of an RCT is strongly related to reporting of adequate methodology for random allocation, double-blinding, patient follow-up and allocation concealment.1,15 It has been shown that trials with poor or inadequately reported methodology tend to exaggerate the treatment effects.9,16 Empirical evidence supports the view that inadequate random allocation leads to systematic errors in estimates of intervention effects due to selection bias.15,16 So far, proper randomisation is the only known way to eliminate selection bias from the trial.15 In line with Dickinson et al17 we found that less than a third of the RCTs reported an adequate method of randomisation.

Double-blinding is related to ascertainment bias and when lacking, is associated with overestimation of treatment effects.15,16 Our results indicated that 28% of the RCT-Ds and 4% of the RCT-Ms were double-blinded. This record may prove difficult to improve, since in many trials double-blinding is difficult to achieve. Moreover, the smaller proportion of double-blinded RCT-Ms in comparison to RCT-Ds may be a reflection of differences between these disciplines.

We found complete reporting of withdrawals and drop-outs in about four out of ten RCTs. Reporting of withdrawals and drop-outs is mainly related to the number and the reasons for discontinuing the trial,6 concerning the possible relation of drop-outs to the intervention itself. Ideally all subjects that were incorporated to the trial should be analysed with intention to treat.2

Trials without allocation concealment tend to overestimate the treatment effects.15 We found appropriately reported allocation concealment in about one out of ten RCTs. This is a low proportion compared with earlier findings, where about one third of the RCT-Ms that had been included in various meta-analyses were adequately concealed.15 This difference is largely explained by the fact that RCTs included in meta-analyses have already passed a rigorous quality control, selecting high quality RCTs.

The journal impact factor is often viewed as a measure of scientific quality,18,19 which is why we chose to analyse its relation to the quality scores of RCTs. Our findings indicated no correlation between the journal impact factor and the quality of RCTs. The journal impact factor represents a quota of the number of citations of a journal and the number of citable items during the previous two years.18,19 Thus, journals with many review articles or methodology papers have relatively high impact factors and journals in smaller or less active research areas have relatively low impact factors. It should nevertheless be recognised that many journals with high impact factors have a rigorous review process before accepting an article. Although it has been shown that industrial funding may influence the outcomes of a trial,20 we found no differences in the quality of reporting between industry funded and research or community funded RCTs.

Differences in the quality of RCT-Ds were found between different areas of dental research. These results should, however, be regarded with caution as the variations were wide and the sample size was calculated with respect to the primary outcome measures. Thus, the number of RCT-Ds in each area was too small for meaningful subgroup analyses.

Conclusion

Considering our findings, the quality of RCTs was generally inadequate. The journal impact factor may not be regarded as a measure of the quality of RCTs. Furthermore, it seems that the quality of RCTs is not influenced by the source of funding. Differences in the quality of RCT-Ds between different areas of dental research seem to exist, but larger samples are needed in order to draw meaningful conclusions. There is a possibility that a large part of the RCTs with poor quality scores are, in fact, high quality trials. However, it was not possible to evaluate this, due to a lack of information in the trial reports. The importance of adequate reporting of the methodology in the RCT conduct cannot be sufficiently emphasised and the quality of RCTs needs to be improved if we are to practice evidence-based healthcare.

References

Moher D, Jadad AR, Nichol G, Penman M, Tugwell P, Walsh S Assessing the quality of randomised controlled trials: An annotated bibliography of scales and checklists. Control Clin Trials 1995; 16: 62–73.

Jadad AR Randomised controlled trials pp28–36 London: BMJ Books 1998.

Sacket DL, Rosenberg WMC, Gray JAM, Haynes RB, Richardsson WS Evidence based medicine: what it is and what it isn't. Br Med J 1996; 312: 71–72.

Begg C, Cho M, Eastwood S et al. Improving the quality of reporting of randomised controlled trials: the CONSORT statement. J Am Med Assoc 1996; 276: 637–639.

Altman DG Better reporting of controlled trials: the CONSORT statement. Br Med J 1996; 313: 570–571.

Jadad AR, Moore A, Carrol D et al. Assessing the quality of reports of randomised clinical trials: Is blinding necessary? Control Clin Trials 1996; 17: 1–12.

Linde K, Clausius N, Ramirez G et al. Are the clinical effects of homeopathy placebo effects? A meta-analysis of placebo-controlled trials. Lancet 1997; 350: 834–843.

Moher D, Pham B, Jones A et al. Does quality of reports of randomised trials affect estimates of intervention efficacy reported in meta-analyses. Lancet 1998; 352: 609–613.

Khan KS, Daya S, Jadad AR The importance of quality of primary studies in producing unbiased systematic reviews. Arch Intern Med 1996; 156: 661–666.

Moher D, Fortin P, Jadad AR et al. Completeness of reporting trials published in languages other than English: implications for conduct and reporting of systematic reviews. Lancet 1996; 347: 363–366.

McQuay H, Carrol D, Jadad AR, Wiffen P, Moore A Anticonvulsant drugs for management of pain: a systematic review. Br Med J 1995; 311: 1047–1052.

Egger M, Zellweger-Zähner T, Schneider M, Junker C, Lengeler C, Antes G Language bias in randomised controlled trials published in English and German. Lancet 1997; 350: 326–329.

Antczak AA, Tang J, Chalmers TC Quality assessment of randomised control trials in dental research I. Methods. J Periodontol Res 1986; 21: 305–314.

Antczak AA, Tang J, Chalmers TC Quality assessment of randomised control trials in dental research II. Results. J Periodontol Res 1986; 21: 315–321.

Schultz KF, Chalmers A, Haynes RJ, Altman DG Empirical evidence of bias. J Am Med Assoc 1995; 273: 408–412.

Chalmers TC, Celano P, Sacks HS, Smith H Bias in treatment assignment in controlled clinical trials. New Engl J Med 1983; 309: 1358–1361.

Dickinson K, Bunn F, Wentz R, Edwards P, Roberts I Size and quality of randomised controlled trials in head injury: review of published studies. Br Med J 2000; 320: 1308–1311.

Garfield E Citation analyses as a tool in journal evaluation. Sci 1972; 178: 471–479.

Garfield E Significant journals of science. Nature 1976; 264: 609–615.

Cho MK, Bero LA The quality of drug studies published in symposium proceedings. Ann Intern Med 1996; 124: 485–489.

Acknowledgements

The authors thank the laboratory technician Kristina Hallberg for valuable assistance with the randomisation and masking of the RCTs, and Johan Byrsjö at the Centre for Public Health Sciences, Linköping, Sweden, for his advice with the statistical analyses. This study was funded with grants from the Research Council of Public Dental Services, County of Östergötland, Sweden.

Author information

Authors and Affiliations

Corresponding author

Additional information

Refereed paper

Rights and permissions

About this article

Cite this article

Sjögren, P., Halling, A. Quality of reporting randomised clinical trials in dental and medical research. Br Dent J 192, 100–103 (2002). https://doi.org/10.1038/sj.bdj.4801304

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/sj.bdj.4801304

This article is cited by

-

Quality of reporting of randomised controlled trials in chiropractic using the CONSORT checklist

Chiropractic & Manual Therapies (2016)

-

Retention of tooth-colored restorations in non-carious cervical lesions—a systematic review

Clinical Oral Investigations (2014)

-

Randomisierte kontrollierte und kontrollierte klinische Studien in deutschsprachigen, ophthalmologischen Fachzeitschriften

Der Ophthalmologe (2008)

-

Efficacy of stabilization splints for the management of patients with masticatory muscle pain: a qualitative systematic review

Clinical Oral Investigations (2004)

-

RCTs in periodontology do not meet current recommendations on quality

Evidence-Based Dentistry (2003)